Apple introduces “cloudless dictation”, no longer demands your contact list to understand you

Not everyone was happy about Apple’s terms and conditions when it introduced dictation to OS X 10.8 (Mountain Lion).

Not everyone was happy about Apple’s terms and conditions when it introduced dictation to OS X 10.8 (Mountain Lion).

Speech-to-text was done in the cloud, so Apple got to listen to what you were saying.

In fact, Cupertino didn’t just processs your data off-site.

It collected a bunch of other information about you and your contacts, and hung onto this data and your recorded utterances for an unknown amount of time:

When you use the keyboard dictation feature on your computer, the things you dictate will be recorded and sent to Apple to convert what you say into text and your computer will also send Apple other information, such as your name and nickname; and the names, nicknames, and relationship with you (for example, “my dad”) of your address book contacts (collectively, your “User Data”). All of this data is used to help the dictation feature understand you better and recognize what you say. It is not linked to other data that Apple may have from your use of other Apple services.

At the time of Mountain Lion’s release, we acknowledged why this might be useful.

We wrote at the time that “names are notoriously difficult to recognise and spell correctly, since they frequently don’t come from the same linguistic and orthographic history as the language of which they’ve become part. The Australian mainland’s highest point, Mount Kosciuszko, is a lofty example.”

But we also wondered why it was compulsory.

After all, giving Apple’s Dictation app access to the names of my friends wouldn’t help a jot with dictating this article, for example, nor would it help with any of a huge range of other tasks for which I regularly use my computer.

There were also other problems with a pure-play cloud approach, notably due to latency.

No matter how much beefier Apple’s server farms might be, even than your quad-core Macbook Pro, you have to add the round-trip cost of uploading your digitised voice and fetching back the results.

So the cloud-style dictation just didn’t work like the movies, where words pop up, well, word-by-word as you talk.

Instead, dictation was bursty, and limited to 30 seconds of speech at a time. (That’s only about 70 words, assuming you talk really quickly – and intelligibility drops off with speed.)

Recently, of course, yet another reason for not doing your dictation via the cloud has emerged: the perceived extent of governmental snooping of your on-line activity and the data you generate while you do it.

So here’s some good news if you have updated to OS X Mavericks: offline dictation.

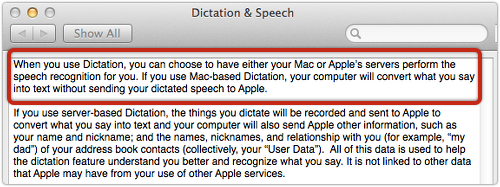

As you can see, the verbiage we quoted above above now reads like this:

When you use Dictation, you can choose to have either your Mac or Apple’s servers perform the speech recognition for you. If you use Mac-based Dictation, your computer will convert what you say into text without sending your dictated speech to Apple. If you use server-based Dictation, the things you dictate will be recorded and sent to Apple…

Additionally, it seems that whenever you switch from server-side voice processing to local dictation, Apple throws away anything its dictation engines may been keeping about you, which is handy to know.

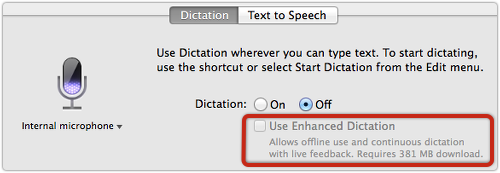

It looks as though this change, introduced in Mavericks, has nothing to do with Edward Snowden and PRISM, and everything to do with quality and usability, since it’s called Enhanced Dictation.

This comes at the cost of a whopper up-front download – 381MB for English (Australia) – that doesn’t seem to have a cancel option if you start it and then change your mind about the bandwidth.

But the new option is not only more private, it also removes the burstiness of cloud-based dictation, giving you “offline use and continuous dictation with live feedback,” which is probably what you expect of a 21st century dictation solution.

And there you have it: there ARE some things that work better without the cloud!

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/TXs1a2_xoZY/