Anatomy of a "goto fail"

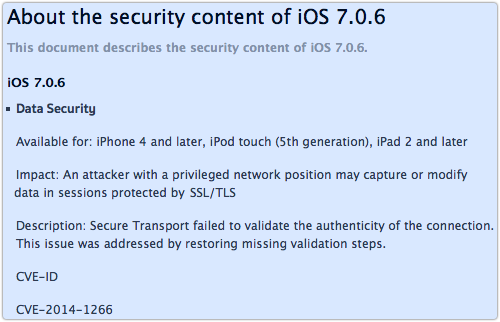

At the end of last week, Apple published iOS 7.0.6, a security update for its mobile devices.

The update was a patch to protect iPhones, iPads and iPods against what Apple described as a “data security” problem:

Impact: An attacker with a privileged network position may capture or modify data in sessions protected by SSL/TLS

Description: Secure Transport failed to validate the authenticity of the connection. This issue was addressed by restoring missing validation steps.

CVE-ID CVE-2014-1266

Apple didn’t say exactly what it meant by “a privileged network position,” or by “the authenticity of the connection,” but the smart money – and my own – was on what’s known as a Man-in-the-Middle attack, or a MitM.

MiTM attacks against unencrypted websites are fairly easy.

If you run a dodgy wireless access point, for example, you can trick users who think they are visiting, say, http://example.com/ into visting a fake site of your choice, because you can redirect their network traffic.

You can then fetch the content they expect from the real site (you are the MitM, after all), feed it back to them with tweaks, modifications and malware, and they may be none the wiser.

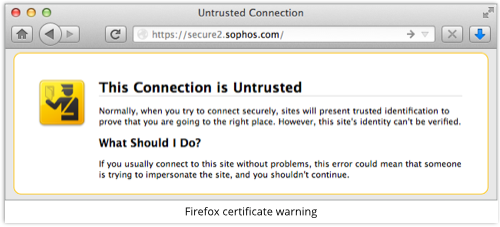

But if they visit https://example.com/ then it’s much harder to trick them, because your MitM site can’t provide the HTTPS certificate that the official site can.

More precisely, a MitM site can send someone else’s certificate, but it almost certainly can’t produce any cryptographic “proof” that it has possession of the private key that the certificate is meant to validate.

So your visitors end up with a certificate warning that gives away your trickery.

At least, your visitors get a warning if the application they are using actually notices and reports the certificate problem.

→ Recent research suggested that about 40% of mobile banking apps do not check HTTPS certificates properly, or at least do not warn if an invalid certificate is presented. This led us to advise our readers to stick to their desktops or laptops for internet banking. Certificate warnings are important.

What was wrong with Apple’s SSL code?

Apple’s reluctance to give away too much is perhaps understandable in this case, but the result was to send experts scurrying off to fill in the blanks in HT6147, the only official detail about this risky-sounding bug.

The problem soon came to light, in a file called sslKeyExchange.c in version 55741 of the source code for SecureTransport, Apple’s offical SSL/TLS library.

The buggy codepath into this file comes as a sequence of C function calls, starting off in SecureTransport’s sslHandshake.c.

The bad news is that the bug applies to both iOS and OS X, and although the bug was patched in iOS, it is not yet fixed in OS X.

If you’d like to follow along, you need to make your way through these function calls:

SSLProcessHandshakeRecord() - SSLProcessHandshakeMessage()

The ProcessHandshakeMessage function deals with a range of different parts of the SSL handshake, such as:

- SSLProcessClientHello()

- SSLProcessServerHello()

- SSLProcessCertificate()

- SSLProcessServerKeyExchange()

This last function is called for certain sorts of TLS connection, notably where forward secrecy is involved.

That’s where the server doesn’t just use its regular public/private keypair to secure the transaction, but also generates a so-called ephemeral, or one-off, keypair that is used as well.

→ The idea of forward secrecy is that if the server throws away the ephemeral keys after each session, then you can’t decrypt sniffed traffic from those sessions in the future, even if you acquire the server’s regular private key by fair means (e.g. a subpoena) or foul (e.g. by bribery or cybertheft).

Now the C code proceeds as follows:

SSLProcessServerKeyExchange()

- SSLDecodeSignedServerKeyExchange()

- SSLDecodeXXKeyParams()

IF TLS 1.2 - SSLVerifySignedServerKeyExchangeTls12()

OTHERWISE - SSLVerifySignedServerKeyExchange()

And theSSLVerifySignedServerKeyExchange function, found in the sslKeyExchange.c file mentioned above, does this:

. . .

hashOut.data = hashes + SSL_MD5_DIGEST_LEN;

hashOut.length = SSL_SHA1_DIGEST_LEN;

if ((err = SSLFreeBuffer(hashCtx)) != 0)

goto fail;

if ((err = ReadyHash(SSLHashSHA1, hashCtx)) != 0)

goto fail;

if ((err = SSLHashSHA1.update(hashCtx, clientRandom)) != 0)

goto fail;

if ((err = SSLHashSHA1.update(hashCtx, serverRandom)) != 0)

goto fail;

if ((err = SSLHashSHA1.update(hashCtx, signedParams)) != 0)

goto fail;

goto fail; /* MISTAKE! THIS LINE SHOULD NOT BE HERE */

if ((err = SSLHashSHA1.final(hashCtx, hashOut)) != 0)

goto fail;

err = sslRawVerify(...);

. . .

You don’t really need an knowledge of C, or even of programming, to understand the error here.

The programmer is supposed to calculate a cryptographic checksum of three data items – the three calls to SSLHashSHA1.update() – and then to call the all-important function sslRawVerify().

If sslRawVerify() succeeds, then err ends up with the value zero, which means “no error”, and that’s what the SSLVerifySignedServerKeyExchange function returns to say, “All good.”

But in the middle of this code fragment, you can see that the programmer has accidentally (no conspiracy theories, please!) repeated the line goto fail;.

The first goto fail happens if the if statement succeeds, i.e. if there has been a problem and therefore err is non-zero.

This causes an immediate “bail with error,” and the entire TLS connection fails.

But because of the pecadilloes of C, the second goto fail, which shouldn’t be there, always happens if the first one doesn’t, i.e. if err is zero and there is actually no error to report.

The result is that the code leaps over the vital call to sslRawVerify(), and exits the function.

This causes an immediate “exit and report success”, and the TLS connection succeeds, even though the verification process hasn’t actually taken place.

What an attacker can do

An attacker now has a way to trick users of OS X 10.9 into accepting SSL/TLS certificates that ought to be rejected, though admittedly there are several steps, and he needs to:

- Trick you into visting an imposter HTTPS site, e.g. by using a poisoned public Wi-Fi access point.

- Force your browser (or other software) into using forward secrecy, possible because the server decides what encryption algorithms it will support.

- Force your browser (or other software) into using TLS 1.1, possible because the server decides what TLS versions it will allow.

- Supply a legitimate-looking TLS certificate with a mismatched private key.

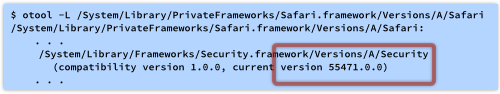

Safari on OS X is definitely affected, because it makes use of the buggy version of SecureTransport.

You can work out if a application is affected by this bug by using Apple’s otool program, which is a handy utility for digging version details out of program files and code libraries.

You use the -L option, which displays the names and version numbers of the shared libraries that a program uses:

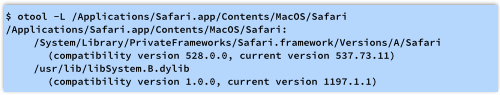

That’s only a start, since the Safari app is just a wrapper for the Safari.framework, which we need run through tt otool in its turn:

Other popular apps that link to the buggy library include, in no particular order: Mail, Numbers, Keynote, Pages, Preview and Calendar.

Clearly, not all of these apps put you in quite the same immediate danger as Safari, but the list presents a good reminder of why shared libraries are both a blessing (one patch fixes the lot) and a curse (one bug affects them all).

Popular apps in which encryption is important but that don’t seem to use the buggy library include: Firefox, Chromium (and Chrome), Thunderbird and Skype.

What to do?

The good news is that Apple has broken its usual code of silence, by which it “does not disclose, discuss, or confirm security issues until a full investigation has occurred and any necessary patches or releases are available.”

Reuters reports that an official Apple spokesperson, Trudy Muller, has gone on the record to say, “We are aware of this issue and already have a software fix that will be released very soon.”

Sadly, she didn’t define “very soon,” but you should watch for this patch and apply it as soon as you can.

→ When you update, be sure to follow the advice below about avoiding insecure networks. The Software Update app uses the buggy Security library!

We suggest that you try any or all of the following:

Avoid insecure networks

Connecting to other people’s Wi-fi networks, even if they are password protected, can be dangerous.

Even if you trust the person who runs the network – a family member or a friend, perhaps – you also need to trust that they have kept their access point secure from everyone else.

If you are using your computer for business, consider asking your employer to set you up as part of the company’s VPN (virtual private network), if you have one.

This limits your freedom and flexibility very slightly, but makes you a lot more secure.

With a VPN, you use other people’s insecure networks only to create an encrypted data path back into the company network, from where you access the internet as if you were an insider.

Use a web filtering product that can scan HTTPS traffic

Products like the Sophos Web Appliance and Sophos UTM can inspect HTTPS traffic – ironically by decrypting and re-encrypting it, but without any certificate shenanigans like a man-in-the-middle crook might try.

Because the Sophos web filtering products do not use Apple’s libraries, or even Apple’s operating system, they are not vulnerable to the certificate trickery described above, so certificate validation will fail, as it should.

Switch to an alternative browser

Alternative browsers such as Firefox and Chromium (as well as Chrome) use their own SSL/TLS libraries as a way of making the applications easier to support on multiple operating systems.

In this case, that has the effect of immunising them against the bug in Apple’s SecureTransport library.

You can switch back to Safari after Apple’s patch is out.

Try this completely unofficial patch!

(Only kidding. You wouldn’t dream of applying a little-tested hack to an important system library, would you?)

This patch exists only:

- To demonstrate that emergency “fixes” don’t always fix, but often can only work around problems.

- To show what C code looks like when compiled to assembler.

- To give some insight into how unauthorised hacks, for good and bad, can be achieved.

- To introduce the OS X codesign utility and Apple’s code signing protection.

- For fun.

By all means take a look – but for research purposes only, of course.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/tiCuEvL9Gw0/