Far from being cyber-spy geniuses with ninja-like black-hat coding skills, the developers of Stuxnet made a number of mistakes that exposed their malware to earlier detection and meant the worm spread more widely than intended.

Stuxnet, the infamous worm that infected SCADA-based computer control systems, is sometimes described as the world’s first cyber-security weapon. It managed to infect facilities tied to Iran’s controversial nuclear programme before re-programming control systems to spin up high-speed centrifuges and slow them down, inducing more failures than normal as a result. The malware used rootkit-style functionality to hide its presence on infected systems. In addition, Stuxnet made use of four zero-day Windows exploits as well as stolen digital certificates.

All this failed to impress security consultant Tom Parker, who told the Black Hat DC conference on Tuesday that the developers of Stuxnet had made several mistakes. For one thing, the command-and-control mechanisms used by the worm were inelegant, not least because they sent commands in the clear. The worm spread widely across the net, something Parker argued was ill-suited for the presumed purpose of the worm as a mechanism for targeted computer sabotage. Lastly, the code-obfuscation techniques were lame.

Parker doesn’t dispute that the worm is as sophisticated as most previous analysis would suggest, or that it took considerable skills and testing to develop. “Whoever did this needed to know WinCC programming, Step 7, they needed platform process knowledge, the ability to reverse engineer a number of file formats, kernel rootkit development and exploit development,” Parker said, Threatpost reports. “That’s a broad set of skills.”

Parker floated the theory that two teams might have been involved in the release of Stuxnet: one a crew of skilled black-hat programmers, who worked on the code and exploits, and the second a far less adept group who weaponised the malware – the point where most of the shortcomings of the code are located. He suggested that a Western state was unlikely to be responsible for developing Stuxnet because its intelligence agencies would have done a better job at packaging the malware payload.

Nate Lawson, an expert on the security of embedded systems, also criticised the cloaking and obfuscation techniques applied by the malware’s creators, arguing that teenage BulgarianVXers had managed a much better job on those fronts as long ago at the 1990s.

“Rather than being proud of its stealth and targeting, the authors should be embarrassed at their amateur approach to hiding the payload,” Lawson writes. “I really hope it wasn’t written by the USA because I’d like to think our elite cyberweapon developers at least know what Bulgarian teenagers did back in the early 90′s”1

He continues: “First, there appears to be no special obfuscation. Sure, there are your standard routines for hiding from AV tools, XOR masking, and installing a rootkit. But Stuxnet does no better at this than any other malware discovered last year. It does not use virtual machine-based obfuscation, novel techniques for anti-debugging, or anything else to make it different from the hundreds of malware samples found every day.

“Second, the Stuxnet developers seem to be unaware of more advanced techniques for hiding their target. They use simple “if/then” range checks to identify Step 7 systems and their peripheral controllers. If this was some high-level government operation, I would hope they would know to use things like hash-and-decrypt or homomorphic encryption to hide the controller configuration the code is targeting and its exact behavior once it did infect those systems,” he adds.

Several theories about the development of Stuxnet exist, the most credible of which suggests it was developed by US and Israeli intelligence agencies as a means of sabotaging Iran’s nuclear facilities without resorting to direct military action. A report by the New York Times earlier this week suggested Stuxnet was a joint US-Israeli operation that was tested by Israel on industrial control systems at the Dimona nuclear complex during 2008 prior to its release a year later, around June 2009. The worm wasn’t detected by anyone until a year later, suggesting that for all its possible shortcomings the worm was effective at escaping detection on compromised systems. ®

1 This is a reference to the then revolutionary virus mutation (polymorphic) technique popularised by a VXer called Dark Avenger, from Bulgaria, back in 1991. The true identity of Dark Avenger has never been established, though there are no shortage of conspiracy theories floating around the net.

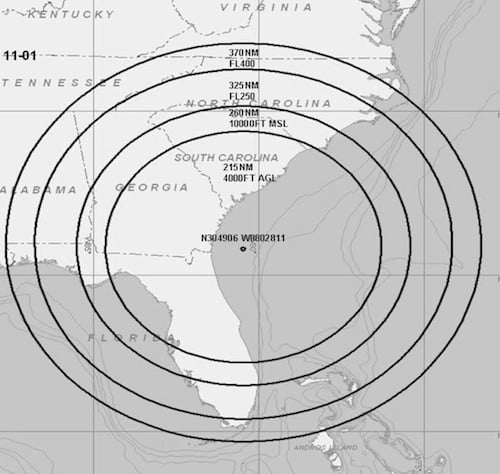

Source: FAA

Source: FAA