Power to the people! Google backtracks (a bit) on forced Chrome logins

Crowdpower!

Even the mighty, all-seeing EoG (eye of Google) can’t always predict how its users are going to feel about new features that are so “obviously” cool that they get turned on by default.

Here at Naked Security, we’ve always favoured opt in, where new features really are so nice to have that users can’t wait to enable them, rather than opt out, where users get the choice made for them and can’t wait to find out how to undo it.

So, we weren’t surprised that there was quite some backlash from Google Chrome users when the latest update to the world’s most widely-used browser changed the way that logging in worked.

As we reported earlier this week:

Users were complaining this week after discovering they’d been logged in to Google’s Chrome browser automatically after logging into a Google website.

In the past, by default, logging into Gmail and Chrome were two separate actions – if you fired up Chrome to read your Gmail, you wouldn’t end up logged in to Chrome as well.

You could choose to enable what’s often referred to as single sign-on, but it wasn’t out-of-the-box behaviour.

But Google – surprise, surprise – figured that what it calls “sign-in consistency” would be such a great help (to Google, if not necessarily to you) that it started doing a sort of single sign-on by default, instead of treating your various Google accounts separately.

As you can imagine, or have probably experienced for yourself if you are a Chrome user, that’s a rather serious sort of change to do without asking.

It’s a bit like a home automation system deciding that after the next firmware update it will start unlocking all your doors at the same time whenever you open your garage, even though that’s not how it worked before.

You can just imagine the marketing focus group arguing for this feature – it means you can park and then go straight on into your house with your arms full of groceries without fumbling for your keyfob a second time, and why did we never think of that before?

“Hey,” the focus group choruses, “A vocal minority has been shouting for this feature so we absolutely must have it, and everyone should get it right away just to prove that we’re clever!”

And you can imagine the techies arguing just as strongly against it – you could easily end up leaving your whole house unlocked unintentionally, and why did we ever think that was a good idea?

“Back off,” retort the techies, “You can’t loosen up security settings and then wait for users to realise by accident, so no one should get it without being asked first.”

Of course, you can also imagine the marketing team winning the battle: because frictionlessness; because cool; because shiny new feature; because progress; because, well, because “duh”! (Because lead generation opportunities, too, but let’s just think that instead of saying it out loud.)

Well, Google has capitulated, sort of.

The company still thinks you ought to appreciate the new feature of autologin, and it pretty much implies that you’re wrong if you feel otherwise, but it has had the good grace to pay attention nevertheless:

We’ve heard — and appreciate — your feedback. We’re going to make a few updates in the next release of Chrome (Version 70, released mid-October) to better communicate our changes and offer more control over the experience.

What Google doesn’t seem to have done is to revert the unpopular change to the status quo ante – by default, as far as we can see, you’ll still get logged into Chrome automatically whenever you log in to some Google service in Chrome, if you see what we mean.

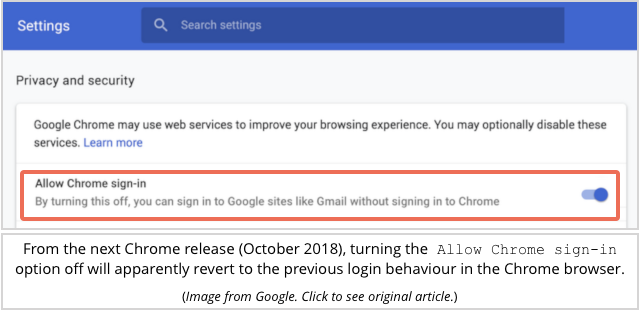

There will now be a switch you can toggle to turn autologin off, but you’ll need to go there yourself and flip said switch.

Our preference is still for opt in by default, so we’d be happier to see Google add the toggle and set it off until turned on.

But we’re willing to be thankful for small mercies, and to applaud Google nevertheless for listening at least in part, and reacting quickly.

Follow @NakedSecurity

Follow @duckblog

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/Gg2kL3a-D6I/