There’s a useful sense of privacy from sitting in such a way that other people can’t see your laptop from behind.

When you’re working on your laptop facing other people, it follows that they’re looking at the back of your screen, so they can’t see exactly what you’re up to.

Whether you’re in a cafe, the library or a meeting room at work, why make it easy for everyone else to figure out your digital lifestle?

Simply put, “Not their business.”

But what if your screen were giving away telltale signs of what you were up to anyway?

A foursome of of cybersecurity researchers decided to take a look, and recently published a fascinating paper describing what they found out, and how – Synesthesia: Detecting Screen Content via Remote Acoustic Side Channels,

In fact, they didn’t so much take a look as have a listen.

Stray emissions

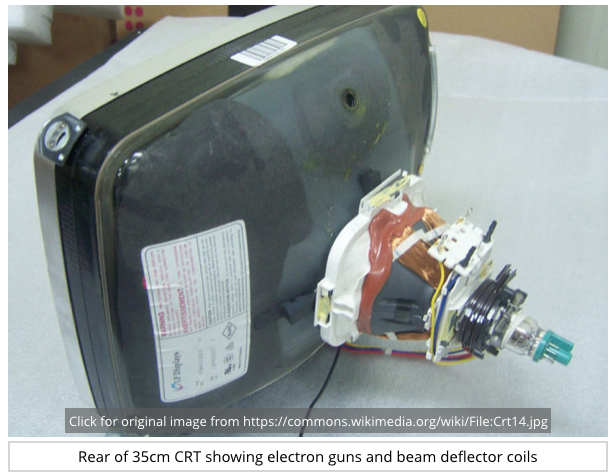

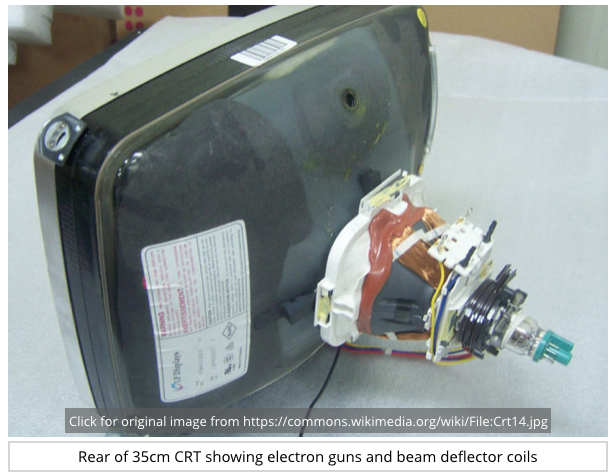

Stray electromagnetic emissions from electrical and electronic equipment have been an eavesropper’s friend for years, especially when display screens were made using so-called CRTs, short for cathode ray tubes.

CRTs were quite literally glass “tubes” (though they were more spherical than cylindrical) covered inside with photoluminescent paint that would light up briefly when struck by a beam of electrons generated by a high-voltage electrical “gun” and aimed by means of magnets.

The tube itself was sucked empty of air – as far as possible – during manufacture in order to let the electrons fly unimpeded to the screen.

That’s why the American slang word for a TV set is “the tube”; it’s where the word Tube in YouTube comes from; and it’s why old-school TVs were so jolly heavy – all that reinforced glass!

As you can imagine, firing a steady beam of electrons at a phosphor-coated glass surface and sweeping the beam left-to-right, top-to-bottom 50 or 60 times a second, produced a cocoon of ever-changing stray electromagnetic radiation that could be detected from a distance.

Back in the 1980s, a Dutch engineer called Wim van Eck showed that this stray radiation could be detected, received and decoded using inexpensive hardware, producing an eerie but legible echo of what was on display on the other side of the room, or even on the other side of a wall.

Suddenly, thanks to what became known as the van Eck effect, covert video eavesdropping wasn’t just the preserve of well-heeled nation-state adversaries with giant-sized detector vans.

Enter the LCD

Fortunately for our collective concerns about covert CRT surveillance, tube displays started to die out, replaced by screens using LCDs (liquid crystal displays), and latterly LEDs (light-eitting diodes), technologies that are especially handy for laptops.

Modern screens are flat, so they’re much more compact; don’t require high-voltage electrical coils, so they use much less power; don’t require a vacuum-proof reinforced glass tube and a bunch of permanent magnets to operate, so they’re much lighter…

…and they don’t work by flinging electrons around in ever-varying magnetic fields.

As a result, there’s a lot less stray radiation for crooks in your vicinity to collect.

The van Eck effect doesn’t work with today’s screens – or, if it does, there’s so little to go on that you can’t do the detection and decoding of stray emissions with commodity equipment that would fit in a handbag or a jacket pocket.

What about other emissions?

So, in this story, our intrepid researchers – Daniel Genkin, Mihir Pattani, Roei Schuster and Eran Trome – decided to try sniffing out video signals in a different way – using sound.

Recent research has shown that modern microphones, even the ones in mobile phones, can pick up sounds outside the range of human hearing.

What if modern screens produce inaudibly high-pitched sound waves as they refresh the pixels on the screen?

After all, the researchers reasoned, today’s screens are still refreshed a line-at-a-time, like old CRTs, and even though they use a tiny fraction of the electron-flinging power of their tube-based counterparts, the amount of electrical energy they consume still varies depending on what’s displayed on each line.

What if those nanoscopic power fluctuations cause micrometric fluctuations in the electronic components providing the power?

And what if those tiny, rapid fluctuations produce minuscule vibrations sufficient to generate faint pressure waves – sound! – that humans can’t perceive, because it’s too high-pitched to hear, and too low-powered to register anyway?

Reading by listening

Could the researchers “read” your screen just by listening to it?

Yes! (Sort of.)

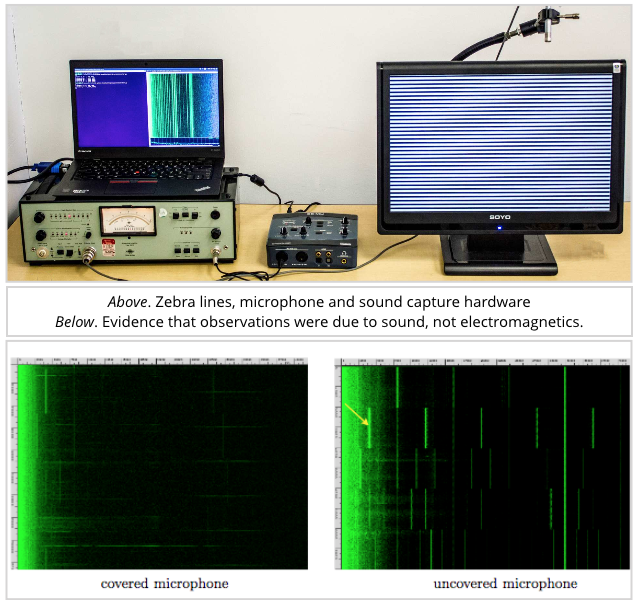

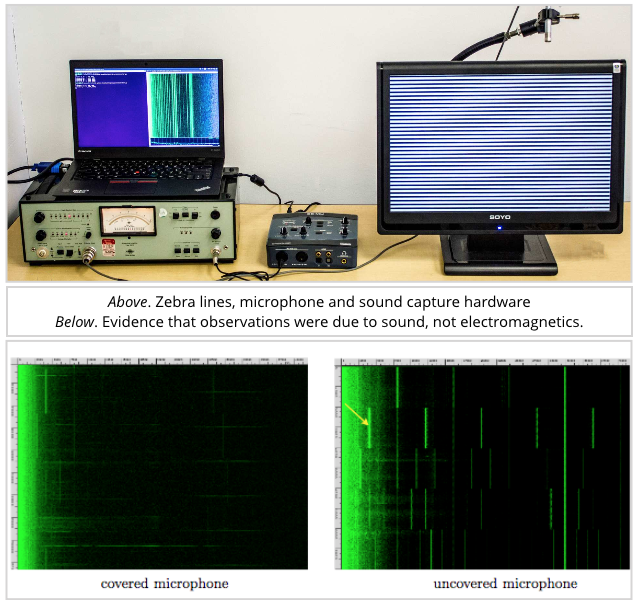

The researchers started out with images they called “zebras”, consisting of giant-sized white-and-black stripes on the screen, chosen to give them the best chance of spotting something and convincing themselves it was worth going further.

Those results were promising, so they got a bit bolder: could someone across the table from you, for example, use a mobile phone to “record” your password off the screen as you typed it?

(Let’s assume that you’ve clicked the icon that reveals the actual password, not merely a string of **** characters – an option you might indeed choose if everyone else in the room can only see the back of your screen.)

Could our researchers sniff out the patterns on your screen using only audio emissions?

In two words, “Definitely maybe!”

What to do?

At the moment, this is an academic attack with little immediate practical value, so there’s not really anything you can or need to do.

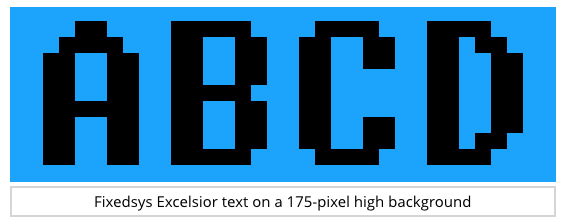

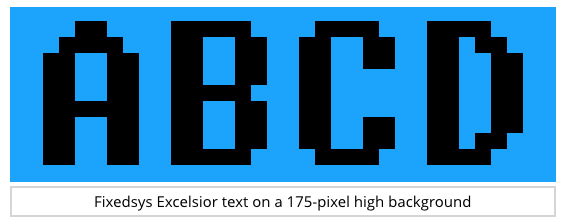

The researchers tried “reading” individual words, consisting of no more six letters at a time on the screen, rendered in a plain, fixed-width typeface with characters 175 pixels high – not the typical font, size or layout you’d experience when reading a document or looking at a website.

Even then, their letter-by-letter success rates were as low as 75%.

But their hit rate was way better than random, so this is still worthwhile research – and it’s a fun paper to read with some cool images.

It’s also a excellent reminder about a truism in cybersecurity: attacks only ever get better.

And that’s why cybersecurity is a journey, not a destination.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/cn9IQyHbefE/

![Common banking ATM switch architecture banking ATM switch architecture [source: Securonix blog post]](https://stewilliams.com/wp-content/plugins/rss-poster/cache/a0db1_banking_atm_switch_architecture.jpg)