Why your website is officially ‘not secure’ from today

In 2017, Google’s Chrome browser started marking transactional sites that weren’t using HTTPS as “not secure”.

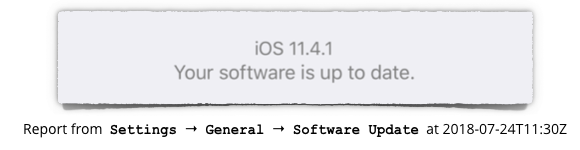

Starting 24 July – think of it as the Google Chrompocalypse – that “transactional” vs. “everything else” difference comes to a decisive end. As of Tuesday, all HTTP pages will be slapped with the “not secure” label, regardless of whether they’re transactional or not.

How important is it? In some ways, not very. The world won’t end. A lot of alarm-fatigued people will probably learn to ignore the little “not secure” message, if they had ever bothered to check the address bar for it to begin with.

On the other hand, it is important, because of a few things. First off, at long last, it ushers in the much-heralded reversal of what’s considered “exceptional”.

For more than a decade, the browser address bar has been the place where we all (hopefully!) looked to see whether the site we were visiting had the reassuring “Secure” padlock, letting us know that the pages we were about to view were coming to us over a secure connection. That padlock let us know that nobody else on the web could intercept the information we exchanged with a given site.

Then too, of course, the address bar also showed us the “not secure” red warning triangle. Really, with all these icons, it was getting a bit crowded up there, as we noted recently.

In its ongoing efforts to make encrypted – i.e., HTTPS – web connections the norm, as opposed to the exception, we can all welcome Chrome version 68, the stable version of which is due on Tuesday.

With Chrome 68, Google takes one more step toward streamlining that address bar, moving to the point where it only informs users when a site is insecure. It gets better from here: Starting with Chrome version 69, due 4 Sept., the “Secure” label will disappear from HTTPS sites, and the green padlock will turn grey.

At some point after that, the padlock will go “Poof!”, completely disappearing from the address bar, leaving it empty save for the URL. No more telling us when something is good (HTTPS). We’ll just be told when it’s bad (HTTP).

Google has been twisting arms for a while to get us here.

In 2014, the company declared that it would be giving preferential treatment to pages that use HTTPS, proclaiming that “HTTPS everywhere” would be the security priority for all web traffic. From there, Google went on to add a dash of pain to the security push by downranking plain HTTP URLs in search results in favor of ones using HTTPS wherever available.

Google started labelling sites offering logins or collecting credit cards without HTTPS as “not secure” starting in 2016.

Now, we’re moving toward a place where HTTPS is a given. But will it solve all security threats?

Of course not. There’s nothing stopping crooks from using HTTPS on scam sites or phishing sites, after all.

We still have to be careful. We’re not putting our tinfoil hats away in the closet just yet. But we’re waving a hearty hello to Chrome 68 just the same: it’s one important stepping stone on the road to a more secure web.

Follow @LisaVaas

Follow @NakedSecurity

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/36u-1ikEmiI/