Bughunter cracks "absolute privacy" Blackphone

Serial Aussie bugfinder Mark Dowd has been at it again.

Serial Aussie bugfinder Mark Dowd has been at it again.

He loves to look for security flaws in interesting and important places.

This time, he turned his attention to a device that most users acquired precisely because of its security pedigree, namely the Blackphone.

Indeed, the Blackphone company isn’t shy in coming forward with its privacy pedigree, telling you proudly that:

To build a truly private product, you have to build a truly private company. This is why we established Blackphone in Switzerland, home to the world’s strictest privacy laws. And this is why we founded it with the best minds in mobile technology, security and encryption.

Their flagship product is described as “more than just smart,” because:

With the seemingly endless headlines about data loss and thefts, maintaining your privacy can feel overwhelming. That’s why we created Blackphone to be a secure starting point for your personal and proprietary communications. It combines a fortified Android operating system with a suite of apps designed to provide you with absolute privacy.

A big claim, although computer scientists are probably best advised to be careful of words like “all,” “forever,” “unbreakable” and “absolute,” unless there are mathematically sound ways to establish the precision of such statements.

The vulnerability

What Dowd found is that text messages received by a Blackphone are processed by the messaging software in an insecure way that could lead to remote code execution.

Simply put, the sender of a message can format it so that instead of being decoded and displayed safely as text, the message tricks the phone into processing and executing it as if it were a miniature program.

Dowd’s paper is a great read if you’re a programmer, because it explains the precise details of how the exploit works, which just happens to make it pretty obvious what the programmers did wrong.

That means his article can help you avoid this sort of error in your own code.

→ The details provided by Dowd amount to full disclosure, although he hasn’t included a proof-of-concept that would allow you to start exploiting the hole at will. He made the disclosure only after Blackphone had published a patch. Indeed, he publicly praised Blackphone on Twitter for the way it dealt with his bug report.

What went wrong

If you (and Mark Dowd) will excuse me, I’ll try an analogy to describe the sort of bug that he found in a way that non-programmers can understand.

Imagine that you go into your bank to change your address.

This is an important transaction, because it has a knock-on effect, namely that future statements and notifications will go somewhere else, and a crook who controls your address effectively controls your account.

Imagine that the bank official takes a generic customer transaction form from a pigeon hole of blank forms, ticks a box at the top to denote the transaction type (change of address), and writes your address into a series of boxes.

He then formally verifies your address, for example by checking it against a recent municipal account and a library membership card, and stamps the form to make it official.

That form is later used to commit the verified-and-approved change into the bank’s database.

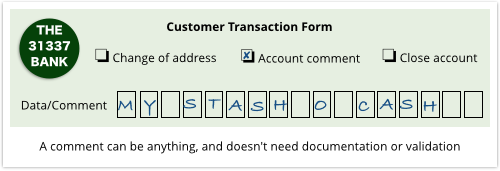

Now imagine that you come back and say that you want to add a comment to your account – my bank lets you do this, so that on internet statements it shows up with a personalised moniker such as MY STASH O CASH instead of the generic words SAVINGS ACCOUNT .

To save time and paper, the official takes the previous form, rubs out the tick-box saying “change of address” and ticks “account comment” instead.

Then he rubs out your address, and writes in the comment, say, MY STASH O CASH, in its place.

Comments like this don’t need any special verification, so he accepts whatever you tell him, and the data is then committed into the database.

What happens next

You can guess where this is going.

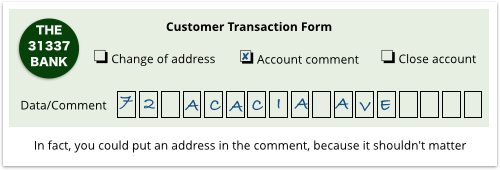

Instead of adding the comment MY STASH O CASH, you tell the bank you want a comment field that just happens to be 72 ACACIA AVE.

(That’s the crooked address you really wanted to use in the first place, but couldn’t get the bank to accept because you don’t have any documentation for it.)

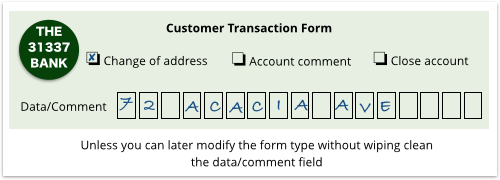

That sets the scene for your third transaction, another change of address.

The official takes the very same form, changes the tick-box from “account comment” to “change of address”…

…and at that point, you walk out of the bank.

So, by subterfuge, you have caused the bank to generate a change of address form that contains an unverified address, because it’s actually just incomplete data left over from the previous transaction.

What to do?

Clearly, the right procedure in the bank is for forms to be destroyed after use, or at the very least for forms to be completely erased before they are recycled.

That would prevent the tick-box that denotes how the data is to be treated being changed independently of the data to which it refers.

Do the same thing when you are programming:

- Allocate memory.

- Use it.

- Erase it.

- Free it.

Continually allocating and freeing up memory blocks does make a program less efficient, especially if there are lots of small chunks of memory you need only briefly.

So it’s much more tempting to allocate a one-size-fits all memory block at the start of a chunk of code, to keep re-using it as needed while your code is running, and then to free it up (i.e. hand it back to the operating system) only at the very end.

But the extra cost of allocating and freeing memory every time you need it is balanced by the fact that:

- The operating system can overwrite each block of memory before and after use, which stops data from one part of your program leaking into another. (Your “account comment” transaction type can’t accidentally be confused with a “change of address” type.)

- The operating system can add randomness into the allocation strategy, making it much harder for an attacker to work out what data can be found where, and thus generally making exploits harder to pull off.

- You only ever allocate and use as much memory as you need at any moment. So, despite all the extra memory management going on, your program may end up more efficient anyway.

Coding endnotes

The offending data structure in the Silent Circle code is this:

typedef struct SCimpMsg {

SCimpMsgPtr next;

SCimpMsg_Type msgType;

void* userRef;

union {

SCimpMsg_Commit commit;

SCimpMsg_DH1 dh1;

SCimpMsg_DH2 dh2;

SCimpMsg_Confirm confirm;

SCimpMsg_Data data;

SCimpMsg_ClearTxt clearTxt;

};

} SCimpMsg;

If you know C, you’ll know that a union is a data structure that can be interpreted as any of the items inside it, but only as one of them at a time.

That’s because the compiler doesn’t allocate space for one of each of the objects (a commit, a dh1, a dh2 and so on), but only for the largest of them.

You use a variable outside the union, known as a type tag, to remember which sort of data you’re storing in there at any time.

In this case, the tag is msgType.

Never change the msgType without overwriting the data that represents it.

That way, you can never get a type confusion error.

Or, at least, (because I pointed out at the start that you should never say never) you are very much less likely ever to get a type confusion error.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/2mFVv8lg0DM/