A brand-thieving email scam that first showed up in January 2019 has resurfaced…

…this time on Facebook.

We received this one from Naked Security reader Rajan Sanhotra who urged us to warn other people, given the high-profile names and brands that were fraudulently exploited in the scam.

The stolen images and logos used in the attack make what a marketing expert would call an enticing “call to action”, with no SHOUTING CAPITAL LETTERS, no obvious mis-spellings (other than the word “I” written in lower case), no grammatical errors, and no REPEATED EXCLAMATION POINTS!!!

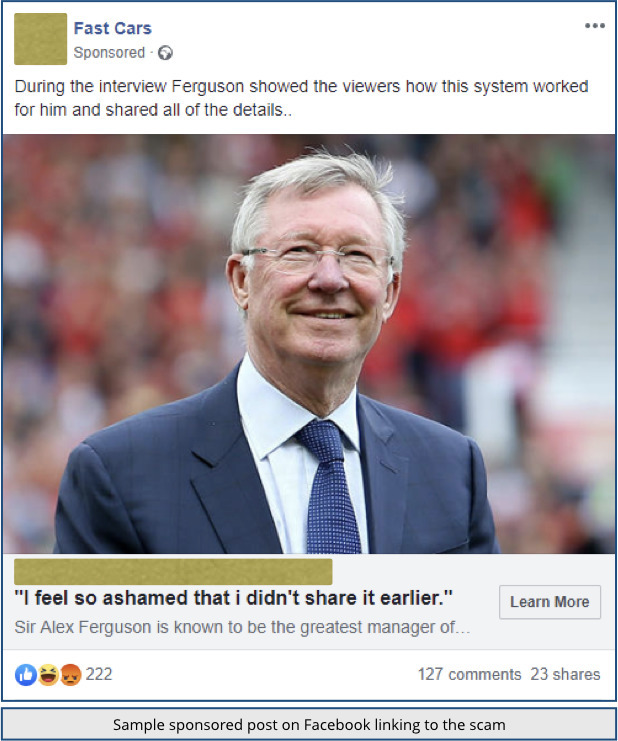

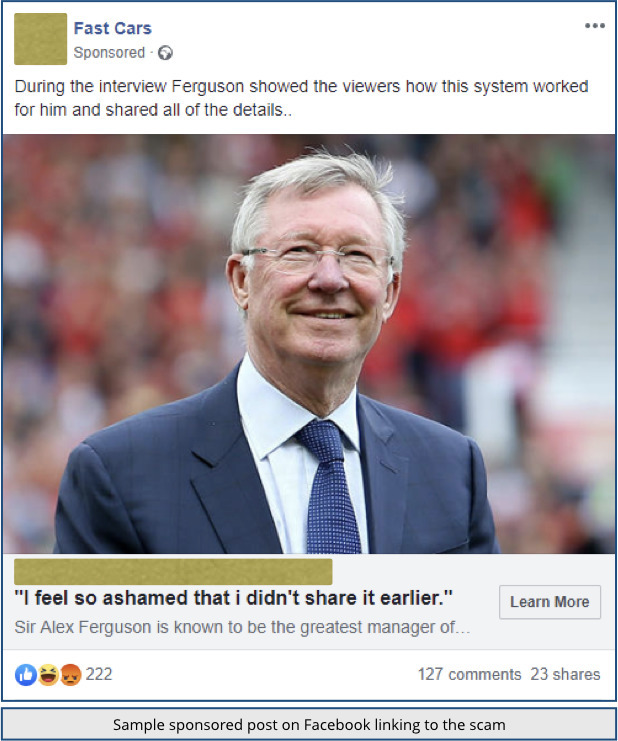

Instead of the old-school giveaways, you’ll see an unexceptionable-looking sponsored post on your Facebook timeline, like this:

Even if you’re not from Europe, or not interested in sport, the article looks both harmless and at least vaguely interesting, featuring as it does the world-famous football manager Sir Alex Ferguson.

Arguably the best sports team manager ever, winner of the most football trophies, a Knight Bachelor of the United Kingdom, still well-known and globally recognisable several years into retirement – clicking through to see what Sir Alex is up to at the moment seems innocent and harmless enough.

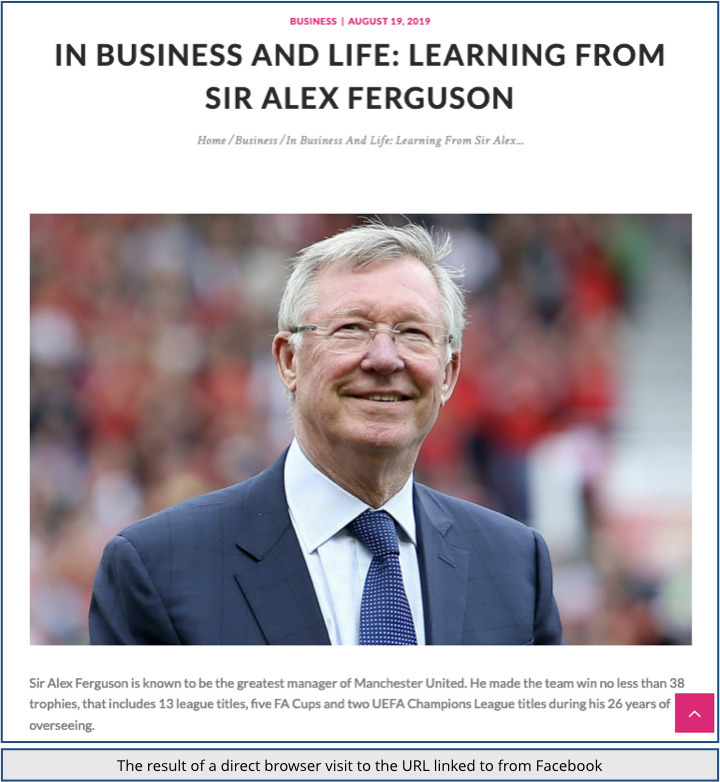

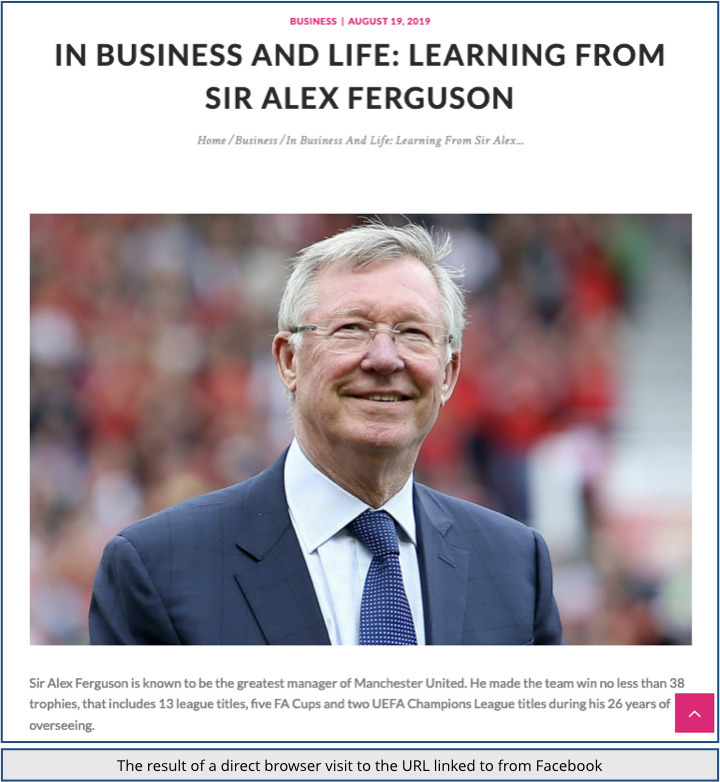

And harmless it was, when we visited the link given in the Facebook post by copying it directly into the address bar of our browser, rather than clicking through from Facebook.

Disappointing, perhaps; dull, yes; but directly harmful or obviously scammy?

No.

The site we visited claimed to be a blog offering free tips to enhance your relationship, wasn’t directly selling anything, had a moderately professional look, had an HTTPS certificate as you would expect, and the subject matter of the page matched the ad that brought us to it.

All in all, the site gave off an unexceptionable and ‘mostly harmless’ feel.

In truth, the site quickly revealed itself to be both narrow (only seven articles on the entire site) and shallow (the articles were all short and uninformatively basic, and none of the contact details gave anything away)…

…but even though you’d be very unlikely to recommend the website to anyone else, there was nevertheless nothing that immediately screamed, “Beware! Report this page to a scamwatch site! Warn your friends about this page! Get out of here now and run a virus check for safety’s sake!”

Likewise, a search engine visiting the site would see nothing obviously bad: no malware; no aggressive ads; no popover password dialogs; no autoplaying videos; no wild inaccuracies or falsehoods.

You’d be excused, even if you were carefully scanning the internet looking for cybercriminality, for just letting this one go by.

Simply put, it looked like a small-time, average-quality, say-no-more website that was effectively hiding in plain sight – in one word, “Meh.”

But when our unlucky reader clicked the same link from the Sponsored post in Facebook, they got a very different result:

This time, the page was a fairly convincing ripoff of the BBC website, with an article featuring a picture of Sir Alex at an event or a post-game wrapup, but with the image background hacked to make it look as if he were addressing a Bitcoin conference.

The page claims to describe an episode of the venerable BBC TV programme Panorama entitled “Who wants to be a Bitcoin millionaire?”, offering a sidebar where the “live” episode can be viewed.

Ironically, Panorama did produce an investigative documentary with that very title, back in 2018, but the BBC’s programme was not advertised with a tagline insisting that “this is one train not to be missed,” as the imposter site insists.

Our reader was, of course, offered an easy way to join the bitcoin revolution – below the illegally modified photo of Alex Ferguson, below the stolen BBC identity, and below the bogus claims that Sir Alex “revealed today” that he’s amassed a Bitcoin fortune of his own, was a signup box.

By investing just $193 to open an account with a cryptocoin business, our reader would be joining a money-making rollercoaster that only ever went up.

(We invented that non-downhill rollercoaser metaphor ourselves, but you get the idea: pay in a modest amount of money now, before it’s too late, and you too will be wealthy, just like Sir Alex Ferguson – though without needing to put in any of the time and effort that he did, or to possess any of the unparalleled skill and ability that made him famous and successful.)

What to do?

You’d probably back yourself to spot this sort of scam any day of the week if it arrived in an old-fashioned phishing email.

Most of us have experienced so much spam over the years that we’re well-tuned to detect emails that don’t belong.

And that’s one reason that the crooks love social media – using a sponsored post, or by posting from a compromised account of someone we know, they can bypass our “spamtennae” and suck us into scams that we’d otherwise avoid with ease.

- Think before you click. The crooks don’t always get their ducks in a row. Is it really likely that an account devoted to Fast Cars would sponsor a post linking to a story about a famous footballer?

- Don’t be tricked by logos and images. Creating a website that looks like an official BBC page isn’t technically difficult, because all the needed logos and web content can be stolen from the real site and republished easily. Fraudulently altering the background of a picture convincingly enough for a website image can be done with free tools.

- No one can guarantee you financial returns on cryptocurrency. If you really want to buy into the Bitcoin scene, do your homework, and pick a cryptocurrency exchange based on your own research. Take your time – investment opportunities that put you under time pressure are aiming to get you to make a hasty decision.

- If it sounds too good to be true, it is. Enough said.

While we’re here, please be a responsible social networker, too: don’t forward things to your friends if you aren’t sure of them yourself, even if it feels like a fun thing to do – that’s how fake news and internet hoaxes get a foothold.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/WGY1bNlnPAA/