What Has Cybersecurity Pros So Stressed — And Why It’s Everyone’s Problem

I often find myself at industry events and meetings with colleagues engaging in casual chitchat about the current work environment and the challenges we as information-security professionals are currently facing. We typically share sighs of empathy as we relate common stories of how our weekends were nonexistent due to responding to a Priority 1 event. To make matters worse, we have continuing professional education credits that are due by the end of the month for one of our many expensive certifications, and we also need to walk our dogs and find time to cut the grass.

I often ask, why do we do this to ourselves? The hours are often brutal, the service is often thankless, the cyberattacks never seem to stop, and the strategies seem to be dated — all leading to a physically and mentally taxing game of whack-a-mole. As cyberattacks intensify and the skills gap broadens, it’s hard not to wonder how much more infosec pros can take before throwing in the towel. Indeed, many of my colleagues are beginning to question whether the time and energy they are investing in developing their professional skill sets is netting them a positive ROI in the department of personal well-being.

It comes down to this: A mass exodus of overworked infosec professionals is a very real threat if we, as a community, don’t take a closer look at the multitiered problems that are creating an environment ripe for job turnover and employee dissatisfaction.

What’s Going On?

According to a 2018 study published by ISC(2), more than 84% of cybersecurity professionals said they were either open to new job opportunities or already planned on pursuing a new opportunity that year. Close to half (49%) said salary was not the main reason for their sentiment. Rather, 63% of respondents said they wanted to work at an organization where their opinions on the existing security posture were taken seriously.

The fact that more than three-quarters of the industry is willing to jump ship at any given time — or at least has given thought to the idea — should be setting off alarm bells, especially given the number of job vacancies market wide. The latter does not seem to be getting better anytime soon: In a recent study conducted by ESG, 53% of companies reported a problematic shortage in cybersecurity skills.

I wonder, though: Is there truly a skills gap, or are other factors at play that gives cybersecurity professionals their pick of the 2.93 million security jobs ISC(2) calculates are open (or at least needed) across the globe?

As a cybersecurity architect who has consulted for a number of different businesses across multiple sectors, I have noticed many common refrains among security and IT operations: “Our network security specialist just resigned for company B last week,” “I just took over the security program a month ago,” “We had a security director who was working on a maturity plan, but she was offered a position for $50K more than what she makes now,” and the infamous, “We hired a new security engineer, but he didn’t show up on the first day because he ended up taking another offer.” Given the time it takes to recruit, interview, screen, onboard, and train a new employee, one can see how this can be problematic for any business. But for a hyperfocused, specialized industry such as cybersecurity that is already experiencing a labor shortage, this could be potentially detrimental.

What’s happening? There’s no single answer. It seems to be a perfect storm of a competitive job landscape, the cost and lack of continuing education programs aimed at cybersecurity, and employee dissatisfaction with their companies’ stance on information security all leading to resentment and, in extreme cases, job burnout.

Frustration Factors

Compensation in an extremely competitive market can be a driver of turnover in itself. According to recent data from tech staffing agency Mondo, salaries ranged from $120,000 to $185,000 for an information security manager and from $175,000 to $275,000 for a chief information security officer.

Yet, while these numbers sound great, salary is far from a singular indicator of job satisfaction. Feelings of dissatisfaction can arise when job expectations become unclear and an employee who may have been hired as a response analyst, for example, now finds himself wearing the hat of an integrations engineer, threat researcher, and fire watch captain.

Exacerbating the situation, many companies lack the personnel to fill critical security roles, which places a heavier demand on existing staff, often resulting frustration, burnout, and overall job dissatisfaction. When there are close to 3 million well-paying infosec positions and more vacancies expected to be created based on demand, it makes it extremely easy for a jaded employee to begin to look elsewhere, particularly when they have a highly in-demand skillset.

Speaking of skills, the demand for professionals to stay up to date with their training and education is driving many of them to look for higher paying positions given the increasingly hefty price tags that accompany higher education and professional certifications. Often the onus of obtaining certifications needed for specific positions falls at the feet of employees —another reason for their frustration, because we all know the cost of education continues to escalate.

In an industry where retraining and constant learning are at the core, it is easy to see how this can be a major stressor on the average infosec professional. Also of note, many universities are struggling to keep up with a curriculum that may change within a matter of months and often are lacking the resources to hire the proper personnel to educate future students.

Findings pertaining to the mental and physical health of cybersecurity professionals are also alarming. According to research conducted by Nominet, 25% of CISOs in the US and UK suffer from mental and or physical stress, with 20% turning to alcohol or drugs as a coping mechanism. Stressors ranged from fear of compromise, not enough budget to protect company assets, and concerns pertaining to visibility and proactively spotting new threats within their organizations.

This fatigue is not only being felt in the executive suite. In a worldwide study of 267 cybersecurity professionals conducted by ISSA and ESG, 40% reported their No. 1 stressor was keeping up with needs of new IT initiatives. Coming in at a close second was finding out about IT initiatives that were started by other teams within their organizations with no security oversight, cited by 39%.

Call to Arms

I am not the biggest fan of clichés, but as the saying goes, “This is an everyone problem.” Retaining and developing talent go hand in hand with creating a mature, robust security posture. Thwarting employee frustration and turnover starts with properly equipping security personnel with the tools to do their jobs, whether that be financial support for continuing education, creating a culture in the senior executive suite of security awareness and criticality, investment in more personnel, a more defined onboarding process, clearer career paths, or mental health services for individuals who may need them. For many infosec professionals, work becomes a personal mission — often a very thankless, invisible mission to the companies they serve.

Now it is up to organizations to adapt a motto that we as cybersecurity professionals live by: “The goal is simple: Protect the human and their well-being at all costs.”

Note: These are the personal views of Kevin Coston and not necessarily those of his employer.

(Image: pathdoc via Adobe Stock)

Related Content:

- Surviving Security Alert Fatigue: 7 Tools and Techniques

- How to Build a Rock-Solid Cybersecurity Culture

- 14 Hot Cybersecurity Certifications Right Now

- Preventing PTSD and Burnout for Cybersecurity Professionals

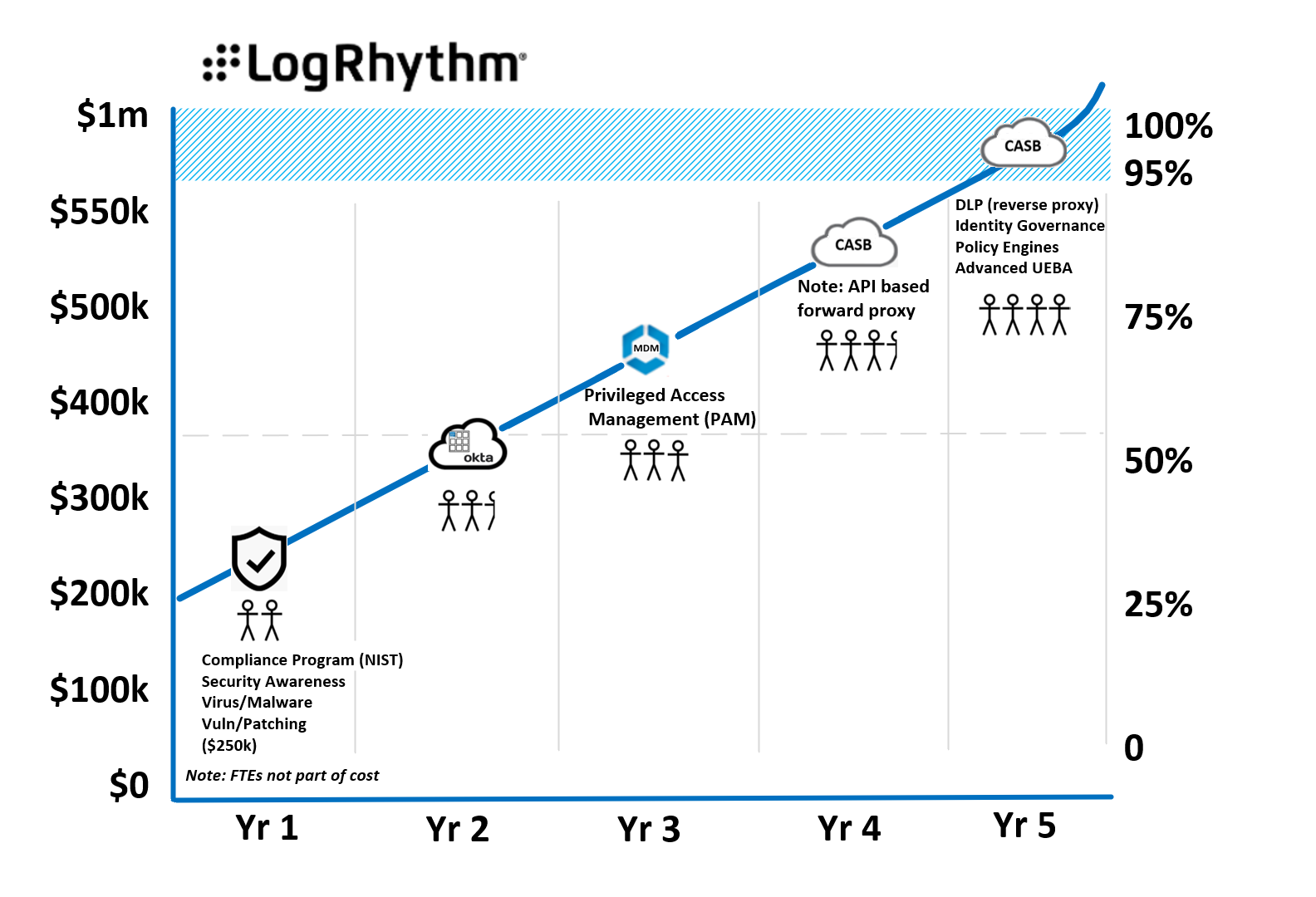

This free, all-day online conference offers a look at the latest tools, strategies, and best practices for protecting your organization’s most sensitive data. Click for more information and, to register, here.

Kevin Coston is a cloud security architect at Akamai Technologies. He currently resides in Denver, Colorado, with his fiancé and three dogs. While not conducting security research or consulting with some of the world’s largest corporations Coston enjoys spending his … View Full Bio

Check out

Check out