With New SOL4Ce Lab, Purdue U. and DoE Set Sights on National Security

The field of cybersecurity has evolved far past its original roots as a discipline focused exclusively on protecting computer systems. As just about anyone who works in the industry knows, a career in cybersecurity today requires knowledge about physical systems, human behavior, and business strategy, as well as many other elements beyond simply bits and bytes.

The work at Purdue University’s Center for Education and Research in Information Assurance and Security (CERIAS) is a true example of the scale and breadth of security research in 2020, says managing director Joel Rasmus.

“CERIAS is a collection of 135 faculty at Purdue, and they come from 18 academic departments across eight colleges. It’s across multiple departments that are both deeply technical as well as behavioral,” Rasmus explains. “We don’t just say we are interdisciplinary; we have embraced it. On some projects, for example, we have had psychologists involved to identify insider threats and social engineering.”

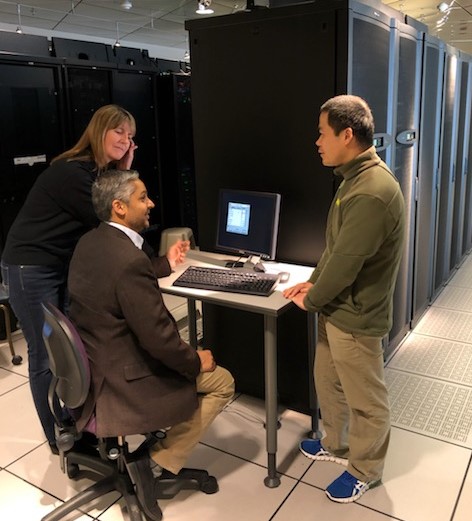

CERIAS now hopes to take those efforts to educate students on security in a new direction that could impact national security efforts. The new Scalable Open Laboratory for Cyber Experimentation (SOL4CE), launched last month, is a collaboration between CERIAS and the US Department of Energy’s Sandia National Laboratories. While the two have had a relationship for a decade, the lab now gives them the opportunity to deepen both the speed and impact of their research efforts.

“It comes down to a foundation for new innovation,” says Kamlesh Patel, manager of Purdue partnerships at Sandia National Laboratories, “beginning with getting the professors who are on leading edge of these areas and have them focus on problems and cutting-edge ways to solve them.”

Building a Pipeline of Talent

SOL4CE will also be a resource to faculty and students for quick modeling and simulation of cyber and cyber-physical systems — a critical component as the security industry struggles to fill open positions, Rasmus notes.

“There is a shortage of good cybersecurity talent out there, to the extent that by the time our students are putting together resumes, they already have job offers,” he says. “Sandia wanted to become involved in what we are doing on campus.”

To that end, Sandia sponsors cybersecurity competitions to raise more awareness of the work involved in the field, as well as facilitates internships and externships.

“Much of what we are working on here are critical skills areas in security. Computer science, data science, AI – these are all emerging fields that are cross-cutting to national security problems,” Patel says. “But there is a lack of talent, and we need to be thinking about our future workforce.”

As part of the efforts to develop a pipeline of talent from the collaboration, Patel has taken residence at Purdue. He says he’s “on loan,” so to speak, from Sandia in order to absorb the academic culture.

“It builds bridges and cultures in a meaningful way,” he says.

More Than Just a Sandbox

Rasmus says Patel’s presence is also key for one of the other goals of the lab: to speed up impactful research on national security. Because Sandia is always engaged in work on security tools for national security, the collaborative lab means taking some of those ideas into the public domain so students and faculty get exposure to them.

“It’s a wide-open program that Sandia has put here that allows us to say, ‘Let us take this tool and see what else it can solve and how we can help make the nation safer,'” Rasmus says.

Some of the lab’s initial research includes looking at airline system vulnerabilities due to their level of interconnection. The lab is also working on research into the potential for various attacks at US nuclear power plants. They’ve coined the term “emulytics,” which is a combination of the words “analytics” and “emulating,” to describe the lab’s capabilities. The lab, Rasmus says, runs real scenarios and aims to extract data that can be used to improve and protect real-world systems.

“It’s not just, ‘Let’s build a virtual machine and see what happens,'” he says. “It’s a much greater tool than just a playground.”

Related Content:

- Martin and Dorothie Hellman on Love, Crypto Saving the World

- With International Tensions Flaring, Cyber-Risk Is Heating Up for All Businesses

- DHS’s Bob Kolasky Goes All in on Risk Management

- Assessing Cybersecurity Risk in Today’s Enterprise

Joan Goodchild is a veteran journalist, editor, and writer who has been covering security for more than a decade. She has written for several publications and previously served as editor-in-chief for CSO Online. View Full Bio

Check out

Check out  Check out

Check out