5 Measures to Harden Election Technology

Part 2 of a two-part series.

The Iowa caucus isn’t the first time that election technology failed spectacularly. As the New York Times reported, a November 2019 election in Northampton County, Pennsylvania, made history by being so lopsided that nobody believed the results. The actual winner (after a count of the paper ballots) was initially credited with just 164 out of 55,000-odd votes in the electronic tally. It’s still unclear whether the cause was a defect in voting hardware or software, or the result of a hack.

In Part 1 of this series, we looked at common vulnerabilities of voting machines, scanners, and the overall voting system. In Part 2, we examine five concrete measures to make our election technology a harder target.

Measure 1: Use single-purpose systems. Less complexity means better security. Voting machines should be purpose-built, capable of filling out ballots, but nothing else. They should support two key functions: voting, and secure device management. They should employ a secure boot process, either loading an OS and voting application or loading an environment that allows secure, verified updates. All commercial off-the-shelf operating systems and software should be locked down, to prevent access to physical interfaces (e.g., USB), network connections, and other interfaces.

Measure 2: Build in defense-in-depth. Manufacturers — of all endpoints, not just voting devices — now recognize that redundancy and multiple layers of security are needed. So-called defense in depth helps make security infrastructure much more difficult to attack because it removes single points of failure.

Measure 3: Limit privileges. A critical, often overlooked security tool is to minimize privileges. This includes system users, software developers, and hardware vendors. Election officials should be able to verify the entire system and ensure that no vendors, employees, or contractors can subvert elections.

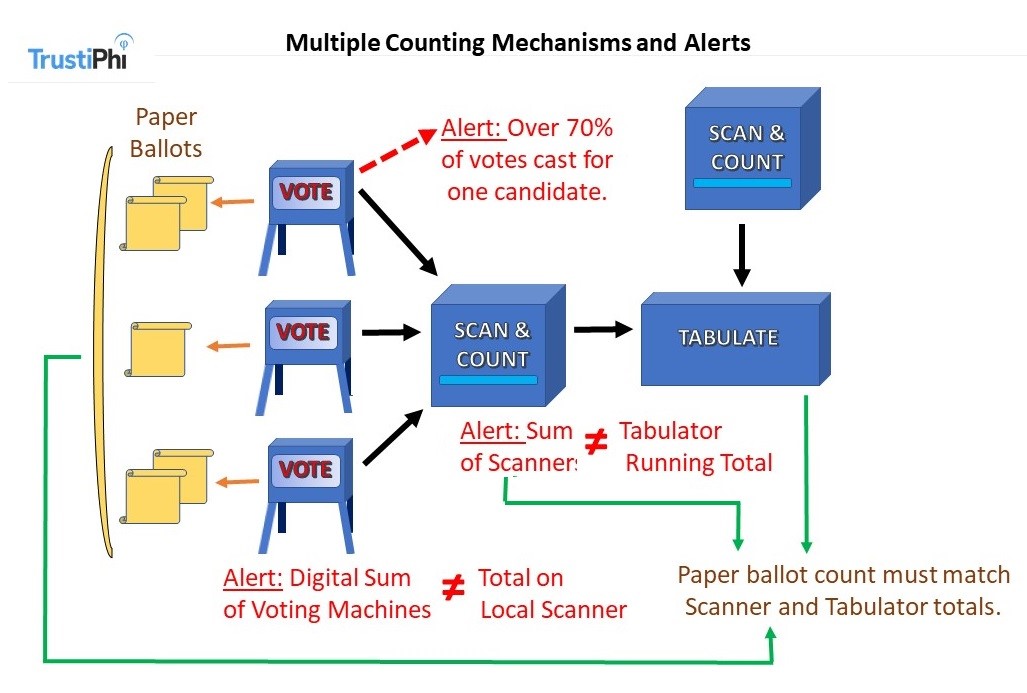

Measure 4: Use multiple counting systems and cross-checks. Election officials and voters need multiple ways to verify the election. Election equipment should provide both digital audit trails and a physical, human-verifiable paper ballot. If, for instance, the voting machine reports its own total vote tally, the voter is given a paper ballot to check before submitting to the tallying system and the tallying system reports the totals from each voting machine, the election administrator could compare three independent pieces of data (from the physical ballots, the voting machine, and the tally system). Having the user double-check the physical ballot helps to ensure the votes are counted as the voters intended.

Measure 5: Layered Security Measures for Election Devices

To achieve secure voting, layer in security against tampering, rogue software, and devices that could insert fake voting results. Require clearly printed paper ballots and ask every voter to check their ballot carefully before scanning its code. These measures are not foolproof, but they’re difficult to hack through.

Election Hardware Security Basics

Strong hardware-based security — with four foundational capabilities — in election machines should underpin the above-described solutions.

- Authentication

- Authorization

- Attestation

- Resiliency

The good news: These requirements for secure interdevice communication apply generally to all connected devices — and technologies exist now to provide these capabilities.

Authentication: Are you the device you claim to be?

Each election device should provide strong (cryptographic) evidence to confirm its identify as the correct source of its data. Any machine providing critical data such as ballot designs, completed ballots or tabulated results should be authenticated to verify it is not an imposter.

Authorization: Does your device have privileges to talk to me?

Only authorized users should be permitted to manage election equipment — that’s a given. In addition, each device should have a defined role in the overall system. A currently authorized voting machine generally is allowed to provide data to a scanner, but only at the same physical polling place. The central tabulator should accept data from scanners but not directly from voting machines.

Attestation: How do I know you are not compromised?

Attestation of device integrity is a verification that the sending device has not been compromised. If an election machine has been hacked at the hardware level, or targeted with malware, it should “turn itself in” — or be unable to attest that it is still safe to use.

Hardware resiliency: How quickly can a device recover from attacks?

Resiliency is an important new method for tackling security issues for election equipment and across the Internet of Things. It’s of great importance that devices can recover quickly from attacks. If an infiltrator compromises a device, such as a voting machine or scanner, the machine must rebound quickly — or continue to operate in a “safe mode” despite the breach.

An election outcome could be changed merely by knocking a scanner or a few voting machines out of service on the big day. When voters are kept waiting, they might just give up and go home. The election device must return to its functional state quickly. There is no perfect security, so resilience is essential.

Where Hostile Nations Would Attack

Election administrators need to take this seriously. An attack against election hardware such as voter registration systems, or anywhere along the vote and tally chain, could upend an election. Professor Steve Bellovin of Columbia University, an authority on election security, has emphasized the threat of supply chain attacks, noting that “nation-state attackers have the resources to infiltrate manufacturers of election technology and compromise the tabulating machines. Such attacks would scale the best.”

Bellovin is specifically concerned about critical vote-tallying software, which transmits results from each precinct to the county’s election board, and may have links to the news media. “This software is networked and hence subject to attack,” he says. He also worries about the ballot design software, which “sits on the election supervisors’ PCs.” Counterfeit software can create ballots that favor one candidate, confuse voters, and make the printed ballot difficult to read and verify.

Voting machinery needs hardware-level security. The stakes are the ultimate, and the attackers among the world’s most capable. Authorization, authentication, and attestation at the hardware level, along with built-in cyber resilience, will make most attacks too difficult to pull off successfully. Independent cross-checks, solid procedures, and third-party software and ballot verification, enable even higher confidence — and it’s urgently needed. The Pennsylvania election and Iowa caucus showed the need to mitigate election technology shortcomings before a catastrophic compromise occurs.

Read Part 1: “How Can We Make Election Technology Secure?”

Related Content:

- Is Voting by Mobile App a Better Security Option or Just ‘A Bad Idea’?

- Government Agency Partners on New Tool for Election Security

- Report: 2020 Presidential Campaigns Still Vulnerable to Web Attacks

Check out The Edge, Dark Reading’s new section for features, threat data, and in-depth perspectives. Today’s top story: “C-Level Studying for the CISSP.”

Check out The Edge, Dark Reading’s new section for features, threat data, and in-depth perspectives. Today’s top story: “C-Level Studying for the CISSP.”

Ari Singer, CTO at TrustiPhi and long-time security architect with over 20 years in the trusted computing space, is former chair of the IEEE P1363 working group and acted as security editor/author of IEEE 802.15.3, IEEE 802.15.4, and EESS #1. He chaired the Trusted Computing … View Full Bio

Article source: https://www.darkreading.com/risk/5-measures-to-harden-election-technology-/a/d-id/1336978?_mc=rss_x_drr_edt_aud_dr_x_x-rss-simple