We just did an informal survey around the office – we asked 10 people in various departments, technical and non-technical, to say the first thing that came into their head when we said, “Browser tracking.”

(No one heard anyone else’s answer, in case you’re wondering how independent each reply might have been.)

All 10 said, “Cookies.”

That’s not surprising, because many websites these days pop up a warning to say they make use of cookies for tracking you across visits – the theory seems to be that you can’t then later complain you didn’t know.

Cookies, therefore, are a well-documented part of online tracking, and the phrase “web cookie” can be considered everyday terminology now, rather than jargon – we encounter it all the time and have become used to it.

Indeed, some sites openly and visibly allow you to choose to accept or reject their cookies…

…although there’s an amusing irony that the most reliable way for a website to remember that you don’t want cookies set is to set a cookie to tell it not to set any more cookies.

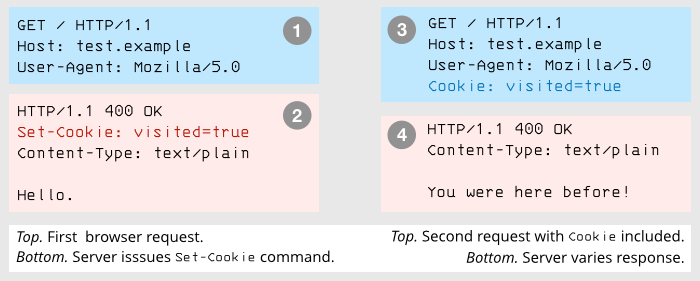

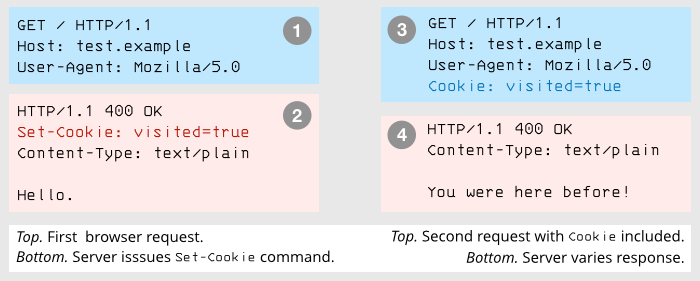

Cookies are browser database entries unique to a website. Your browser sends back a site’s existing cookie entries with every future request to that site. In fact, cookies were specifically designed to track you between visits, without which you wouldn’t be able to set preferences such as currency and language. For example, this site sets a cookie called nakedsecurity-hide-newsletter after you sign up, so we can tell that there’s no point in showing you the signup box next time. But cookies are also easily misused – in programming jargon, cookies allow ‘stateful behaviour’, which is shorthand for a website keeping track of whether you’re paying a second, or third, or fourth visit, and therefore tying together what you do this time with how you behaved before.

Because cookies are so closely connected with tracking, an increasing number of us are using privacy tools that limit the cookies our browser will remember, or that flush all our cookies periodically, or that warn us about the sort of cookies that are commonly associated with online tracking and targeted marketing.

Cookie usage is also cafefully regulated by your browser, to stop privacy and security violations.

For example, while you are browsing on nakedsecurity.sophos.com, your browser will prevent our website from seeing any cookies set by other sites, and vice versa, so one site can’t read out any secrets set by another – this is known, for obvious reasons, as the same origin policy.

Sadly, web marketing companies have pretty much based their business model on keeping track of you for as long as possible, in as much detail as possible.

For them, the same origin policy gets in the way of tracking you between sites, and regular cookie purges prevent them tracking you on the same site for years or months rather than just days or hours.

It’s you again!

So, a minority of web marketers spend time hunting for brand new ways to detect that it’s you again, even if you tell your browser to dump all officially stored data that’s there to track you, and even if it’s pretty obvious that you don’t want to be tracked.

You’ll hear these tricks called by many different names, such as “supercookies”, “cookie respawning”, “evercookies”, “undeletable cookies” and “browser fingerprinting”, and they often rely on collecting a whole raft of apparently incidental details about your browser – data points that give away very little on their own, but that, when combined, may end up identifying you with surprising accuracy.

For example, websites often include JavaScript code to check the size of your browser window, so they can decide which visual style to use – something you can see on this site by dragging the window narrower and narrower. (Note how the visual layout changes subtly when it gets down to 768 pixels wide.)

But what if a website records your current window size for nefarious purposes, such as tracking you?

If you just happen to resize your browser window to unusual dimensions such as 1306×637 pixels, you’ll present that very same weird screen size again when you refresh the page, even if you clear your cookies in between.

The website operator won’t be sure it’s still you – but they can make a pretty good guess.

Worse still, they may be able to combine that apparently innocent detail with a bunch of other circumstantial evidence to lump you in with an ever-decreasing number of ‘viable suspects.”

Other browser characteristics that fingerprinting tricksters have abused include details such as: whether you have an external monitor plugged in; which fonts you have installed; how much battery power you have left; which operating system and browser you’re using; what timezone you’re in; the exact pixel layout your browser chooses when rendering characters; and more.

Fingerprinting tricks might even include minutiae such as the precision of the timer functions inside your browser and the accuracy of the mathematical formulae used in your browser’s JavaScript engine.

With enough apparently harmless discriminators, an unscrupulous web tracking company may be able to put you into a bucket of 1,000,000 possible users – wait, 10,000 – wait, 1000 – wait, 63 – wait, 7 – wait, only ONE POSSIBLE USER MEETS ALL THE CRITERIA COLLECTED!

In other words, browser data points that would be individually unimportant may combine to give you a browser fingerprint that is unique, or perhaps puts you into a very small bucket of possible users.

A cat-and-mouse game

The result, as with so many aspects of cybersecurity, has been a cat-and-mouse game between the browser makers and the browser fingerprinters.

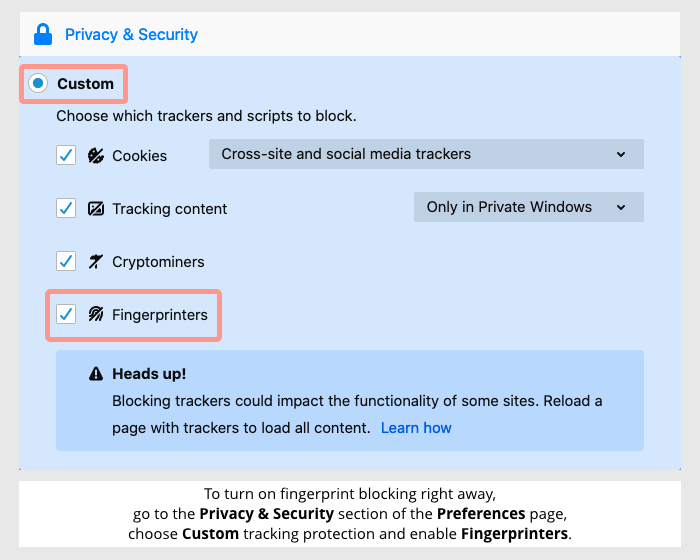

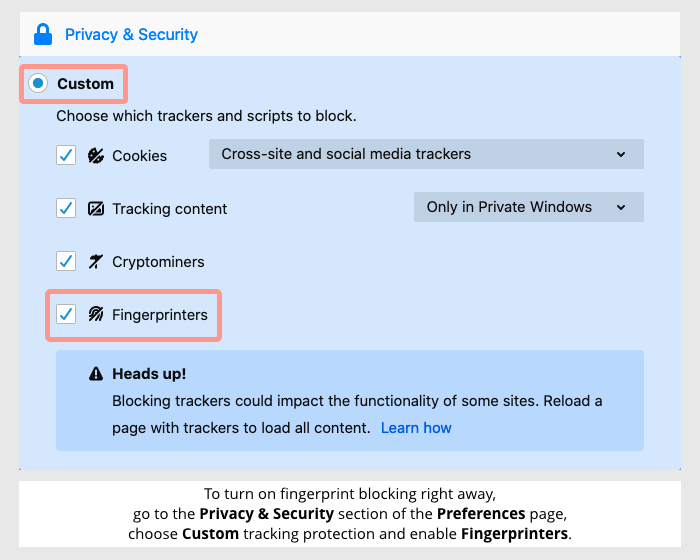

Firefox, in particular, has been vocal about the anti-fingerprinting code it’s been building into its browser in recent years.

Some of these countermeasures have involved throwing out features that, no matter how useful, were primarily being used for evil, not for good – such as getting rid of the navigator.getBattery() function that allowed rogue websites to track the precise battery state of your computer, a data value that tends to change predictably over time.

Other countermeasures include deliberately reducing the precision of system data, for instance by adding random inaccuracies to it, or replacing it with a one-size-fits-all value.

Examples from Mozilla’s own list include:

- Canvas image extraction is blocked.

- Absolute screen coordinates are obscured.

- Window dimensions are rounded to a multiple of 200×100.

- Only specific system fonts are allowed.

- Time precision is reduced to 100ms, with up to 100ms of jitter.

- The keyboard layout is spoofed.

- The locale is spoofed to ‘en-US’.

- The date input field and date picker panel are spoofed to ‘en-US’.

- Timezone is spoofed to ‘UTC’.

- All device sensors are disabled.

The downside of all this, of course, is that any websites that make legitimate and positive use of these details – for example to improve the accessibility of the site or boost the performance and playability of online games – are out of luck.

The upside is that every browser detail that gets “de-precisioned” is a setback for the Bad Guys, and thus a privacy win for the rest of us.

For those reasons, Firefox’s latest tranche of fingerprinter blocking tools are easy to turn on…

…but they’re not yet on by default, just in case hitting back at the crooks has an annoyingly negative effect on the rest of us.

Coming soon

However, alert observers have spotted that Mozilla is planning to change that soon:

We are enabling fingerprinting blocking in the Standard mode of 72. We will revisit this decision based on the results of [our ongoing monitoring program], and may revert the change during the beta cycle for 72.

Simply put, by making us all look a bit less individual online, browsers can help to frustrate web tracking companies that are determined to keep tabs on us even when we clearly want to stay private.

Sometimes, it pays to lose your individuality and just be one of the crowd!

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/0A78KcSO4d8/

Check out

Check out  Check out

Check out  Check out

Check out  Check out

Check out  Check out

Check out