Moore has built a network asset discovery tool that wasn’t intended to be a pure security tool, but it addresses a glaring security problem.

HD Moore, famed developer of the wildly popular Metasploit penetration testing tool, is about to go commercial with a new project he originally envisioned would give him a nice break from security.

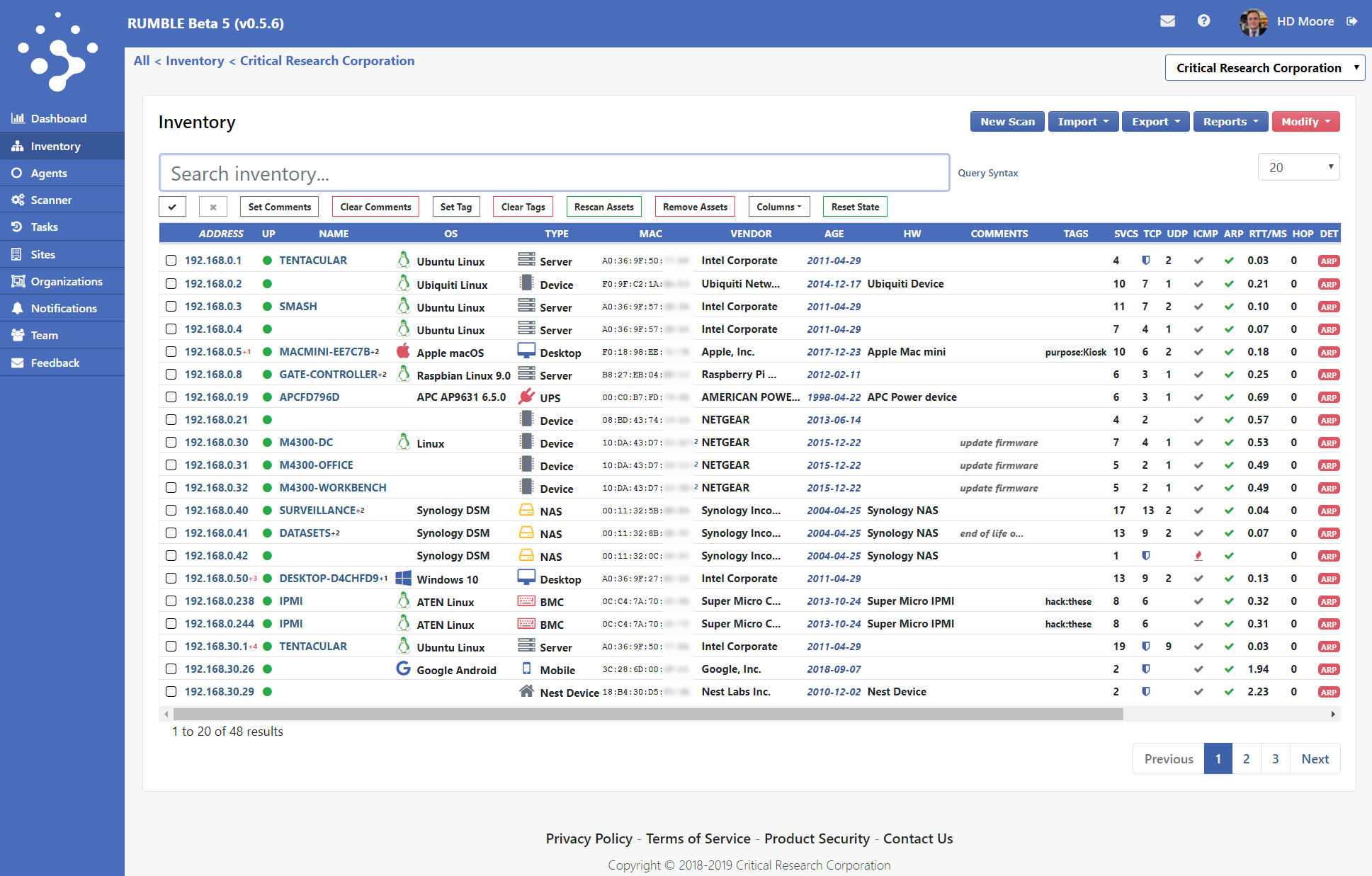

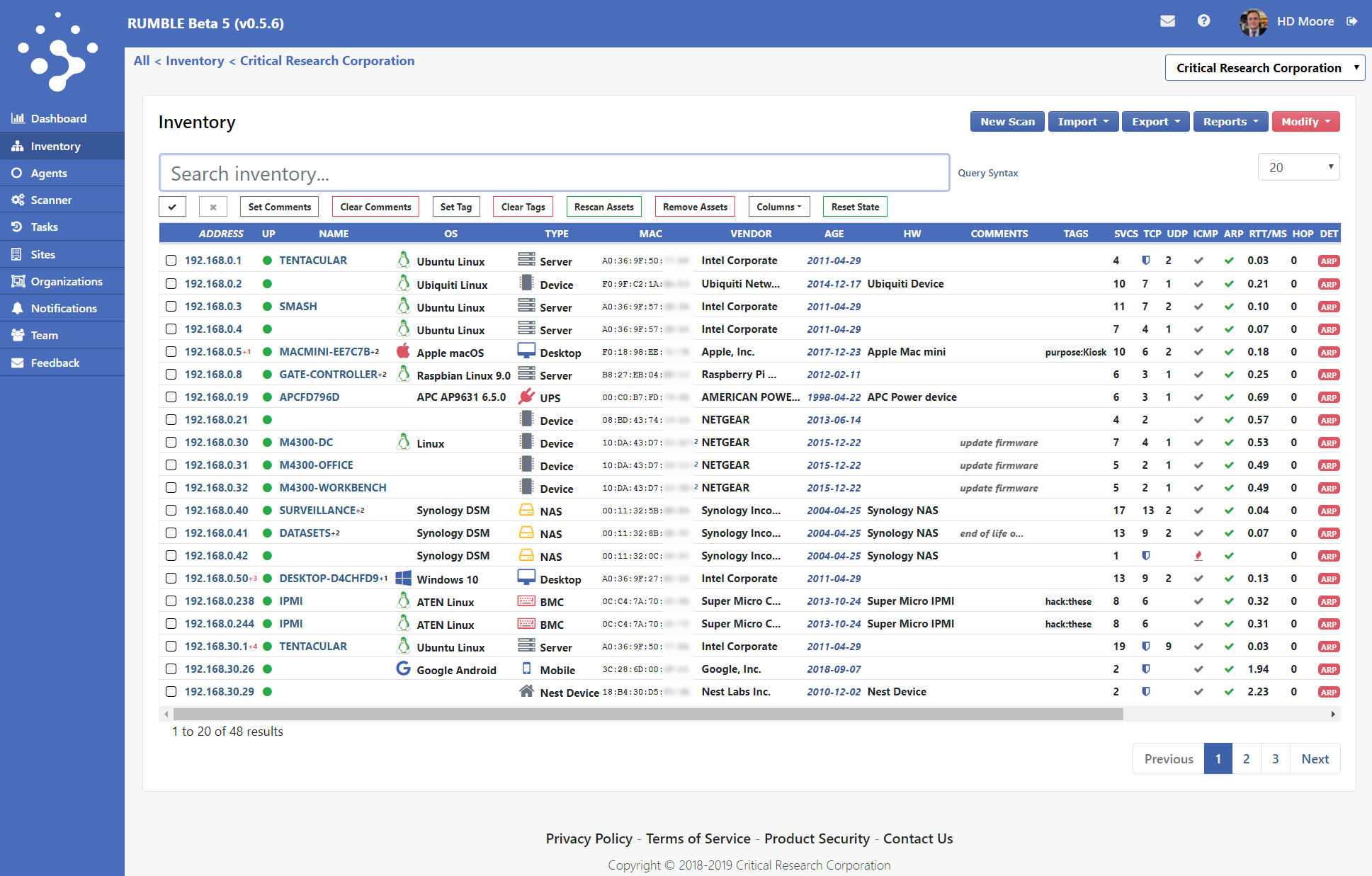

Moore’s IT asset discovery tool. Rumble Network Discovery. aims to solve one of the most basic yet confounding problems organizations face and have faced for years: getting a true inventory of all of the devices and services running in their increasingly diverse and growing networks. Misconfigured systems. misconfigured network settings, and unknown unpatched devices sitting on the network are among the most common weak links that expose enterprises today to attacks and data breaches. It’s a problem getting exacerbated now with the official — and sometimes unofficial — arrival of Internet of Things (IoT) devices on networks.

The renowned security expert, who over the years has also conducted eye-opening research on millions of exposed corporate devices sitting vulnerable on the public Internet, had been bumping up against the network discovery process when building his security tools.

“Every time I’ve written a security product … Metasploit Pro or working on the [Rapid7] Nexpose product, or my prior vulnerability management [tools] I worked on at DDI [Digital Defense Inc.] and BreakingPoint, the very first step of running these things is building a great discovery engine. I never felt like we had enough time,” says Moore, founder and CEO of Critical Research Corp., and vice president of research and development at Atredis Partners.

HD Moore

Photo courtesy of HD Moore

As a penetration tester, he found internal discovery and monitoring lacking at many organizations. “The challenge I ran into is that most customers that I had done security work for didn’t have the ability to centrally monitor all of their traffic in the first place,” he says. “They had huge distributed sites all over the place with strange firewalls and strange rules,” for example, and no way to properly find those devices and configurations.

It’s not there aren’t any IT asset discovery and security tools available today. There’s the popular open source Nmap program, as well as commercial offerings from Armis, Claroty, Cynerio, Forescout, and others, he says. Some focus on passive network discovery in sensitive environments, such as industrial control systems, and study traffic patterns.

But Moore says the underlying challenge remains that most of today’s discovery tools require administrative access control of the network devices as well as visibility. “If you have that stuff, their products work great,” he says.

However, healthcare networks, large retailers, and universities struggle here because of the fluidity and mobility of user devices connecting to their networks. “Higher education, in particular, struggles quite a bit, with tens of thousands of machines [for example] that are student machines and they don’t directly manage,” he says.

They can adopt central monitoring, but those products often are too pricey for the typical state university. “That’s what kind of rankled me: No one had really spent a lot of time doing active discovery right. Current solutions require having credentials and network-traffic monitoring,” he says.

The goal of Rumble, he says, is to provide a discovery tool that doesn’t require credentials to inventory the devices or monitor the ports. “You can just drop it into a network and find everything,” Moore says.

It sounds so simple and obvious — a true mapping and inventory of devices and their status on the network — but it’s one of the biggest security holes for many organizations.

And it turns out, of course, that Moore hasn’t actually been able to take a sabbatical from security with Rumble. Rumble is already resonating with security managers and researchers who have been beta-testing it over the past six months. To date, it tracks more than 1.8 million network assets and runs 1,500 scans per day, with some 2,000 users.

Moore had wanted to build the tool for IT people who may or may not have security experience or responsibilities, but most of his beta users have been IT people with security roles, security researchers, or IT managers from universities, healthcare organizations, service providers — and even bug bounty organizations.

“Starting out I wanted to avoid security entirely; I didn’t want to sell another security product. I wanted to sell something that was directly useful and didn’t rely on like, five other things, to be useful,” says Moore.

In Moore’s hometown of Austin, University of Texas network security analyst Christian Gugas and his team have been testing Rumble for the past couple of months to check for vulnerabilities in university-issued faculty user systems — mainly for the BlueKeep vulnerability, which exposes Windows machines via the Remote Desktop Protocol [RDP].

“What we would try to do is organize those searches and afterward see what machines had that [RDP] port open. We would try proof-of-concept code to see if we could do anything with those machines” in those tests,” Gugas explains.

Gugas says he was impressed with the speed of Rumble — it was faster for his team than Nmap — and the level of detail it provided on the devices the team scanned. There were a couple of false positives, he says, but the results overall were “pretty damn good,” and exporting the data into JSON files let his team’s scripts grab it and catalog it.

Security expert James Boyd, a member of the San Antonio Hackers Association, tested Rumble on his home lab network, on a Linux-based virtual machine. Rumble right away spotted and fingerprinted some rogue machines and wireless access points he had planted in the lab network.

“Everyone wants to do red team [and now blue team],” he says. “No one wants to track what’s on your network; that’s considered boring.” But it’s probably one of the most overlooked parts of security, he adds.

How Rumble Works

Rumble, which officially moves from beta to production at the end of this month, comes in a command-line scanner that can be downloaded for running it offline, or with agents that run on most any platform and use a cloud-based console. The Go-based platform scans Layer 2 and 3 and “fingerprints” applications, Moore explains. Unlike most scanners, it employs a small number of probes to get as much detail about devices on the network as possible.

The “secret sauce” is mostly how Rumble can “leak” information about a device from its MAC address, such as its network interfaces. “For a lot of IT folks, the MAC address is the absolute source of truth for how they manage inventory,” he says. MACs uniquely identify devices, while IP addresses — which are used for some discovery tools — change, he notes.

Rumble determines the specifics on the device without needing to authenticate to it. “When we do a scan, we can use the MAC to leak out hostnames, stack fingerprints … and merge it all together to see what device is on the network,” he says.

That would help to determine, for instance, whether a device was communicating across a nonauthorized network segment, such as a payment card network that wasn’t supposed to be connected to a retail operations network.

A look at Rumble Network Discovery’s user interface.

Source: Critical Research Corp.

But Rumble does not identify malware in the network. That type of detection typically entails traffic monitoring, Moore says, which isn’t the goal of Rumble. “We are looking for how does it respond to the network, what services are exposed,” for example, or which Windows machines are part of the Active Directory Domain, he says.

It employs a homegrown “Splunk-style” search engine, he says. The scan and search data can be pulled into a JSON, CSP, or other type of file, and regular scans can be scheduled to run in the background.

Shades of previous HD Moore projects are in Rumble, too. Probes from his 2013 work at Rapid7 on UDP leaks with the discovery of nearly 50 million networked devices exposed on the Internet via flaws in the Universal Plug and Play (UPnP) protocol, as well as other probes he developed, are woven into Rumble, as well as the fruits of other vulnerability research he has conducted over the years.

What’s Really On the Network

Moore says Rumble has rooted out some shocking residents on corporate networks, such as PlayStation 4s and Amazon Echoes. “Consumer-level technology has made its way into every corporate network we’ve ever scanned,” he says. “You see a lot of smart TVs and Apple TV kits … definitely not your typical enterprise equipment.”

He expects managed service providers to be Rumble’s main sweet-spot customer, but he has seen mainly healthcare, state government, and higher education organizations testing it to date. The hope is to keep Rumble affordable for those budget-strapped organizations. “We are looking at being one-fourth or one-third the cost of any comparable security tool,” he notes.

Moore is offering a 50% discount for beta users who graduate to commercial status by October 15. For organizations scanning up to 256 devices, it’s $495 per year; pricing scales with the size of the organization, at $49,995 per year for scanning up to 100,000 devices, for example.

Rapid7 chief scientist Bob Rudis has been testing Rumble both in Rapid7’s labs as well as in his home network. As a security expert diligent about keeping his home network locked down, he thought it was pretty solid: no Wi-Fi for his IoT, for example, and mostly Zigbee for communications. But when he ran Rumble on the network, the tool discovered that his weather station device had updated its software and opened an unauthenticated telnet service on his LAN. “I missed something, and this tool caught it,” he says.

Meanwhile, Moore says the initial commercial release is just the beginning. Network discovery is an ongoing challenge, and he expects to be updating Rumble for a long time to come.

“It’s really boiled down to we want to do an awesome job at discovery and just discovery, and keep our focus on identifying products and identifying services [in the network],” Moore says. “It’s not the most interesting or sexy-sounding technology, but it’s where people actually need help.”

Related Content:

Check out The Edge, Dark Reading’s new section for features, threat data, and in-depth perspectives. Today’s top story: “The 20 Worst Metrics in Cybersecurity.”

Check out The Edge, Dark Reading’s new section for features, threat data, and in-depth perspectives. Today’s top story: “The 20 Worst Metrics in Cybersecurity.”

Kelly Jackson Higgins is Executive Editor at DarkReading.com. She is an award-winning veteran technology and business journalist with more than two decades of experience in reporting and editing for various publications, including Network Computing, Secure Enterprise … View Full Bio

Article source: https://www.darkreading.com/analytics/metasploit-creator-hd-moores-latest-hack-it-assets-/d/d-id/1335860?_mc=rss_x_drr_edt_aud_dr_x_x-rss-simple

Check out

Check out

Check out

Check out  Check out

Check out  Check out

Check out  Check out

Check out