Code-busters lift RSA keys simply by listening to the noises a computer makes

Disaster recovery protection level self-assessment

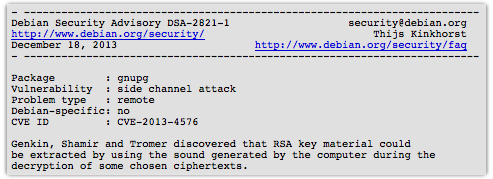

Computer scientists have shown how it might be possible to capture RSA decryption keys using the sounds emitted by a computer while it runs decryption routines.

The clever acoustic cryptanalysis attack was developed by Adi Shamir (the “S” in RSA) of the Weizmann Institute of Science along with research colleagues Daniel Genkin and Eran Tromer and represents the practical fulfillment of an idea first hatched nearly 10 years ago. Back in 2004 Shamir and his colleagues realised that the high-pitched noises emitted by computers could leak sensitive information about security-related computations.

At the time the established that different RSA keys induce different sound patterns but they weren’t able to come up with anything practical. Fast forward 10 years and the researchers have come up with a practical attack using everyday items of electronics, such as mobile phones, to carry out the necessary eavesdropping. The attack rests on the sounds generated by a computer during the decryption of ciphertexts sleeted by an attacker, as a paper RSA Key Extraction via Low-Bandwidth Acoustic Cryptanalysis explains.

We describe a new acoustic cryptanalysis key extraction attack, applicable to GnuPG’s current implementation of RSA. The attack can extract full 4096-bit RSA decryption keys from laptop computers (of various models), within an hour, using the sound generated by the computer during the decryption of some chosen ciphertexts. We experimentally demonstrate that such attacks can be carried out, using either a plain mobile phone placed next to the computer, or a more sensitive microphone placed four meters away.

Put simply, the attack relies on using a mobile phone or other microphone to recover, bit by bit, RSA private keys. the process involves bombarding a particular email client with thousands of carefully-crafted encrypted messages, on a system configured to open these messages automatically. The private key to be broken can’t be password protected because that would mean a human would need to intervene to open every message.

There are other limitations too, including use of the GnuPG 1.4.x RSA encryption software. And because the whole process is an adaptive ciphertext attack a potential attacker needs a live listening device to provide continuous acoustic feedback in order to work out what the next encrypted message needs to be. The attack requires an evolving conversation of sorts rather than the delivery of a fixed (albeit complex) script.

Mitigating against the complex attack requires simply using the more modern GnuPG 2.x instead of the vulnerable GnuPG 1.4.x encryption scheme, which ought to plug up the problem at least until more powerful attacks comes along.

“The Version 2 branch of GnuPG has already been made resilient against forced-decryption attacks by what is known as RSA blinding,” explains security industry veteran Paul Ducklin in a post on Sophos’ Naked Security blog.

Even aside from this all sort of things are likely to go wrong with the potential attack including the presence of background noise and the possibility that an intended target happens to have his or her mobile phone in their pocket or bag while reading encrypted emails on a nearby system.

Key recovery might also be possible by other types of side channel attacks, the crypto boffins go on to explain. For example, changes in the electrical potential of the laptop’s chassis – which can be measured at a distance if any shielded cables (e.g. USB, VGA, HDMI) are plugged in because the shield is connected to the chassis – can provide a source for analysis at least as reliable as emitted sounds. ®

The business case for a multi-tenant, cloud-based Recovery-as-a-Service solution

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2013/12/19/acoustic_cryptanalysis/

A man who stole hundreds of thousands of pounds from UK students has been jailed for three years and nine months.

A man who stole hundreds of thousands of pounds from UK students has been jailed for three years and nine months. The White House on Wednesday released a 303-page

The White House on Wednesday released a 303-page

One of the first computers I was ever allowed to use all on my own was a superannuated

One of the first computers I was ever allowed to use all on my own was a superannuated

![Click on the image to read the original paper [PDF]...it's well worth it! Click on the image to read the original paper [PDF]...it's well worth it!](https://stewilliams.com/wp-content/plugins/rss-poster/cache/8cbbd_gpg-loops-500.png)

![Click on the image to read the original paper [PDF]...it's well worth it! Click on the image to read the original paper [PDF]...it's well worth it!](https://stewilliams.com/wp-content/plugins/rss-poster/cache/24e06_gpg-differents-500.png)

![Click on the image to read the original paper [PDF]...it's well worth it! Click on the image to read the original paper [PDF]...it's well worth it!](https://stewilliams.com/wp-content/plugins/rss-poster/cache/24e06_gpg-decoy-spikes-500.png)