Let’s say that, mid-oversharing, I thought better about writing a Facebook post about how the rash has now spread to my … (cue the backspacing, the select all/delete, hitting cancel or whatever it takes to avoid telling the world about that itch).

Let’s say that, mid-oversharing, I thought better about writing a Facebook post about how the rash has now spread to my … (cue the backspacing, the select all/delete, hitting cancel or whatever it takes to avoid telling the world about that itch).

If that text were a Facebook status update (or a Twitter tweet, a Yahoo email, a comment on a blog or any other typing on a web page), cancelling it doesn’t, theoretically, really matter: what I wrote could still have been recorded, even if I decided not to post it.

That’s a point brought up on Friday by Jennifer Golbeck, director of the Human-Computer Interaction Lab and an associate professor at the University of Maryland.

Slate published an article Golbeck wrote up about a paper, titled Self-Censorship on Facebook (PDF), that describes a study conducted by two Facebook researchers: Sauvik Das, a PhD student at Carnegie Mellon and summer software engineer intern at Facebook, and Adam Kramer, a Facebook data scientist.

Over the course of 17 days in July 2012, the two researchers collected self-censorship data from a random sample of about 5 million English-speaking Facebook users in the US or UK.

How did they know when one of the Facebook users under their microscope had decided to back out of a post?

That’s simple as pie, really: they used code they had embedded in the web pages to determine if anything had been typed into the forms in which we compose status updates or comment on people’s posts.

To protect users’ privacy the researchers decided to record “only the presence or absence of text entered, not the keystrokes or content”. A quote that serves as a helpful reminder that they could have tracked your keystrokes if they had wanted to.

(Note: logging keystrokes is no super secret, privacy-sucking vampire sauce. It’s plain old Web 1.0. This is not news, but it’s certainly worth repeating: anybody with a website can capture what you type, as you type it, if they want to.)

The researchers tracked that a user had started writing content only if a Facebook user typed at least five characters into a compose or comment box. If the content wasn’t shared within 10 minutes, it was marked as self-censored.

Why in the world would Facebook, Twitter, or similar care so much about my rash and subsequent decision not to tell the world about it?

While second thoughts come in handy to stop people who might otherwise post truly embarrassing Facebook or other social media content, as far as the social networks themselves are concerned, self-censoring users just starve sites of the content they otherwise feed upon.

From the paper:

Users and their audience could fail to achieve potential social value from not sharing certain content, and the [social network] loses value from the lack of content generation…

… Understanding the conditions under which censorship occurs presents an opportunity to gain further insight into both how users use social media and how to improve [social networks] to better minimize use-cases where present solutions might unknowingly promote value diminishing self-censorship

In her Slate article, Golbeck interprets Facebook’s 17-day collection of self-censorship data for this research to be an invasion of privacy in that, as she writes, “the things you explicitly choose not to share aren’t entirely private.”

The problem with this thinking is that it conflates two things: 1) Facebook’s ability to capture data about users who started typing something but then didn’t publish it, and 2) the incorrect notion that Facebook tracked the content of what users typed.

Could Facebook have captured my need for salve? Absolutely. As I said above, anybody with a website can capture what we type into their website as we type it. It’s the nature of the web.

But the researchers took pains to state that while they did track the presence or absence of text entered, they explicitly did not listen in on the abandoned content; indeed, they tracked neither the keystrokes nor the content entered.

From the paper:

All instrumentation was done on the client side. In other words, the content of self-censored posts and comments was not sent back to Facebook’s servers. Only a binary value that content was entered at all.

That said, Facebook was still looking over its users’ shoulders in a fashion that would likely come as an unpleasant surprise to many of them.

Golbeck’s conflation isn’t surprising. Particularly given NSA-gate and the heightened awareness about pervasive surveillance it’s bestowed upon us, we’re ready to see eavesdropping governments and their corporate lackeys lurking in every corner of the internet.

But there’s a yawning gap between what people think can and cannot be monitored and what is actually possible.

The reality is that JavaScript, the language that makes this kind of monitoring possible, is both powerful and ubiquitous.

It’s a fully featured programming language that can be embedded in web pages and all browsers support it. It’s been around almost since the beginning of the web, and the web would be hurting without it, given the things it makes happen.

Among the many features of the language are the abilities to track the position of your cursor, track your keystrokes and call ‘home’ without refreshing the page or making any kind of visual display.

Those aren’t intrinsically bad things. In fact they’re enormously useful. Without those sort of capabilities sites like Facebook and Gmail would be almost unusable, searches wouldn’t auto-suggest and Google Docs wouldn’t save our bacon in the background.

There are countless examples of useful, harmless things this (very old) functionality enables.

But yes, it also provides the foundation for any sufficiently motivated website owner to track more or less everything that happens on their web pages.

This is the same old web we’ve been using since forever but a lot of people don’t realize. When they find out, they’re often horrified.

This was illustrated by a recent news piece about Facebook mulling the tracking of cursor movements (actually, technically, it would be tracking the movement of users’ pointers on the screen) to see which ads we like best.

The comments on that story make clear that many people are utterly creeped out by the idea that websites can track their pointers. One commenter likened pointer tracking to keylogging.

But as Naked Security’s Mark Stockley pointed out in a subsequent comment on that article, none of this is new, and the capability is certainly not confined to Facebook:

If Facebook [wants] to do key logging then [it] can – so long as you’re browsing one of their pages they can capture everywhere your cursor goes and everything you type. I’m not saying they do, I’ve no idea, I’m just saying it’s possible – any website can do it and it’s very easy.

In fact, as Mark noted in his comment on the pointer-tracking story, if he had decided to ditch the comment he was writing halfway through, the Naked Security site could still have captured everything he typed, even if he’d never hit submit (it didn’t by the way, we don’t do that).

In sum: Facebook spent 17 days tracking abandoned posts in a manner that some might find discomforting and readers are reminded that the internet allows website owners to be far, far more invasive.

If you want to be sure that nobody is tracking your mouse pointer or what you type then you’ll have to turn off JavaScript or use a browser plugin like NoScript that will allow you to choose which scripts you run or which websites you trust.

Image of backspace key courtesy of Shutterstock.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/I4xemn4JiPE/

Hey, nice, you got a text message from your mate.

Hey, nice, you got a text message from your mate.  Let’s say that, mid-oversharing, I thought better about writing a Facebook post about how the rash has now spread to my … (cue the backspacing, the select all/delete, hitting cancel or whatever it takes to avoid telling the world about that itch).

Let’s say that, mid-oversharing, I thought better about writing a Facebook post about how the rash has now spread to my … (cue the backspacing, the select all/delete, hitting cancel or whatever it takes to avoid telling the world about that itch). Facebook, Apple, Wal-Mart and other companies that plan to use facial-recognition scans for security will be helping to write the rules for how images and online profiles can be used.

Facebook, Apple, Wal-Mart and other companies that plan to use facial-recognition scans for security will be helping to write the rules for how images and online profiles can be used. Android 4.3, released in July, had a feature that enabled users to install apps but also keep them from collecting sensitive data such as a user’s location or address book.

Android 4.3, released in July, had a feature that enabled users to install apps but also keep them from collecting sensitive data such as a user’s location or address book. Apple just announced the first

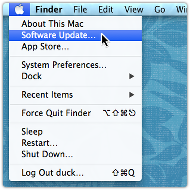

Apple just announced the first  The Mavericks update can be fetched, as usual, by using the Software Update… option in the Apple menu, or by fetching a standalone installer in the form of a DMG (Apple disk image) file.

The Mavericks update can be fetched, as usual, by using the Software Update… option in the Apple menu, or by fetching a standalone installer in the form of a DMG (Apple disk image) file.