5 Steps To Managing Mobile Vulnerabilities

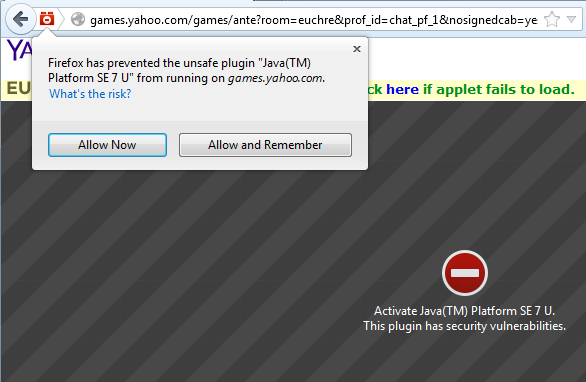

On the second Tuesday of every month, information technology and security groups rush to fix vulnerabilities in their desktop systems, reacting to the regularly scheduled Patch Tuesday implemented by Microsoft and Adobe.

Yet, in most cases, the plethora of smartphones and tablets carried by employees and the hundreds of applications on those devices are not managed, and fixing vulnerabilities on those systems is left up to the user. While the software ecosystem surrounding mobile devices typically means that mobile applications are regularly updated, the risk of those software programs is typically an unknown for most companies.

Businesses need to start paying attention to the mobile software coming in the front door to make sure their data is not headed out that same portal, says Chris Wysopal, chief technology officer for application-security firm Veracode.

“Mobile application management is becoming as important as mobile device management,” he says. “The app layer is where all the risky behavior is happening.”

While mobile applications are relatively new vectors of attacks, security researchers and applications developers have shown that vulnerabilities do exist. The Master Key and SIM card vulnerabilities demonstrated at the Black Hat security conference show that platform issues can lead to vulnerabilities that can be exploited. Yet more common are rogue applications that are legitimate but use aggressive advertising frameworks or tactics to collecting a disproportionate amount of information on the user.

[At Black Hat USA, a team of mobile-security researchers show off ways to circumvent the security of encrypted containers meant to protect data on mobile devices. See Researchers To Highlight Weaknesses In Secure Mobile Data Stores.]

Currently, Veracode and other companies are seeing interest in managing mobile vulnerabilities and risk from the largest enterprises, those with the most at risk. Yet, with the proliferation of mobile devices, more companies will have to worry vulnerable and risky apps, Bala Venkat, chief marketing officer at application-security firm Cenzic, said in an e-mail interview.

“The explosion of mobile devices, growing number of new applications on devices and the access of data anywhere from any device or platform poses a very challenging security environment for organizations.”

For companies that want to tame the risk from their mobile applications, Venkat and other security experts recommend the following five steps.

1. Focus on the apps, not the device

While many companies have mobile-device management (MDM) systems to help them deal with their fleet of device, the bring-your-own-device (BYOD) movement has left a gap in their coverage. The devices are no longer owned by the businesses, so managing them can be a policy problem. In addition, the threat is less about the device and more about the applications, says Domingo Guerra, founder and president of Appthority.

With businesses having thousands of employees and hundreds of applications on the devices, managing the applications should be the focus for most companies, he says.

“There are a lot of different points of possible data breaches,” Guerra says.

2. Catch vulnerabilities at development

While the vulnerabilities in mobile applications are not handled in the same way as with desktop systems, one area of commonality exists. Companies that develop their own in-house applications need to adopt a secure development lifecycle to catch and root out vulnerabilities.

“It is important for companies to ensure its application developers and administrators have a thorough knowledge of the common application attacks, the tools available for detecting vulnerabilities and the procedures for fixing them,” says Cenzic’s Venkat.

Vetting third-party code used in the development process is also important. The advertising frameworks used by many mobile developers typically take actions of which the developer may not be aware. Other frameworks should be check out as well, says Appthority’s Guerra.

“Because it is not all internal code, companies have to be wary,” he says.

3. Measure app reputation

Another way to assess the risk of third-party applications is to use one of the application reputation services. These services, such as Appthority and Veracode’s Mobile Application Reputation Service (MARS), check out mobile application based on runtime and static analysis and create a risk profile for each.

“It is the applications that are purported to be legitimate, but are being monetized through information harvesting that are the bigger risks,” says Veracode’s Wysopal.

In many cases, companies can apply their own policies to the assessment results and generate white- and black lists of mobile applications allowed to access business data or that can be on devices managed by MDM solutions.

4. Encrypt data on the device and in transit

A key consideration for many companies is whether information on the mobile devices used by employees for work encrypt data. Mobile containerization technology can wrap applications in code that enforces encryption and allows the company to manage the keys, letting the business enforce encryption.

Companies should also worry about unencrypted communications to cloud services, says Cenzic’s Venkat.

“Storing unencrypted sensitive data on often-lost mobile devices is a significant cause for concern, but the often unsecured Web services commonly associated with mobile applications can pose an even bigger risk,” he says.

5. Make security easy to use

Finally, employees will get around security measures unless they are easy to use. To retain productivity gains, businesses should support the way that employees work, says Veracode’s Wysopal.

“People want to be able to grab a file off of Dropbox,” he says. “If people cannot interact between a corporate environment and the personal environment, then users will complain and reject the monolithic corporate apps and security,” he says.

Have a comment on this story? Please click “Add Your Comment” below. If you’d like to contact Dark Reading’s editors directly, send us a message.

Article source: http://www.darkreading.com/vulnerability/5-steps-to-managing-mobile-vulnerabiliti/240164636

The updates for Microsoft’s

The updates for Microsoft’s  The updates for Microsoft’s

The updates for Microsoft’s