For pity’s sake, groans Mimecast, teach your workforce not to open obviously dodgy emails

A JavaScript-based phishing campaign mainly targeting British finance and accounting workers has been uncovered by Mimecast.

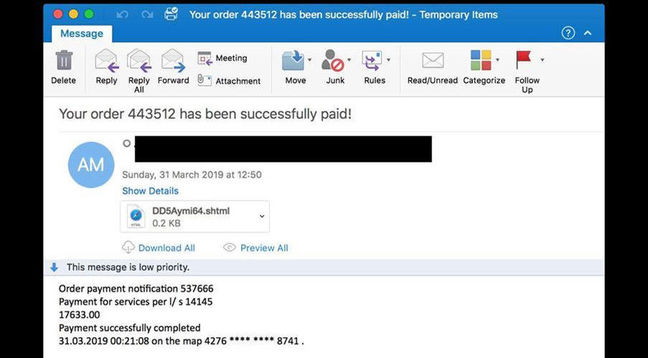

The attack, details of which the security company published on its blog, “was unique in that it utilized SHTML file attachments, which are typically used on web servers”.

When the mark opened a phishing email, the embedded JavaScript would immediately punt them off to a malicious site where they were invited to enter sensitive login credentials.

Tomasz Kojm, senior engineering manager at Mimecast, said: “This seemingly innocent attachment redirecting unsuspecting users to a malicious site might not be a particularly sophisticated technique, but it does present businesses with a big lesson. Simple still works. That’s a huge challenge for organisations trying their best to keep their systems secure.”

Targeted attack email caught by Mimecast (click to enlarge)

British financial and accounting firms have taken the brunt of the attack, receiving 55 per cent of the emails so far detected, with the same sector being targeted in South Africa with 11 per cent of phishing attempts. Around a third of emails were sent to Australian targets, typically in the higher education sector.

Mimecast, which among other things makes email security software, said it had blocked the attack from reaching 100,000 subscribers, and, among the usual thinly disguised sales pitches, offered some rather good advice:

“Train every employee so they can spot a malicious email the second it arrives in their inbox. This can’t be an annual box-ticking quiz, it needs to be regular and engaging. Phishing is not going away any time soon, so you need to ensure your employees can act as a final line of defence against these threats… If in doubt, follow the basic rule to ignore, delete and report.”

As threat intelligence, security and antivirus companies all go down the route of building ever more sophisticated (and expensive) “endpoint solutions,” firewalls and the like, it is important to remember that the simplest attack vectors are the ones enjoying ever more success.

UK spy agency GCHQ’s public-facing offshoot, the National Cyber Security Centre, warned in its annual report that it had halted 140,000 phishing attacks and nixed 190,000 fraudulent websites – though of the top 10 phishing targets, a significant number were identified as government or quasi-governmental agencies (including HMRC, the BBC and the Student Loan Company), which were at risk of reputational harm.

UK.gov reckons its brand reputation protection service brave cyber defenders are having an impact. The Department of Digital, Culture, Media and Sport reckoned there was about a 10 per cent decline in UK businesses reporting cyber attacks and breaches over the past year. ®

Sponsored:

Balancing consumerization and corporate control

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2019/07/17/finance_phishing_javascript/