Google launches new Chrome protection from bad URLs

Google on Tuesday launched two new security features to protect Chrome users from deceptive sites: an extension that offers an easy way to report suspicious sites, and a new warning to flag sites with deceptive URLs.

Emily Schechter, Chrome Product Manager, said in a post on Google’s security blog that Google’s Safe Browsing, which has been protecting Chrome users from phishing attacks for over 10 years, is now helping to protect more than four billion devices across multiple browsers and apps by showing warnings to people before they visit dangerous sites or download dangerous files.

The new extension, called the Suspicious Site Reporter, is going to help even more, she said, as it gives users an easy way to report suspicious sites to Google Safe Browsing.

Safe Browsing works by automatically analyzing sites that Google knows about through Google Search’s web crawlers, and the more dangerous or deceptive sites that it knows about, the more users it can protect, Schecter said.

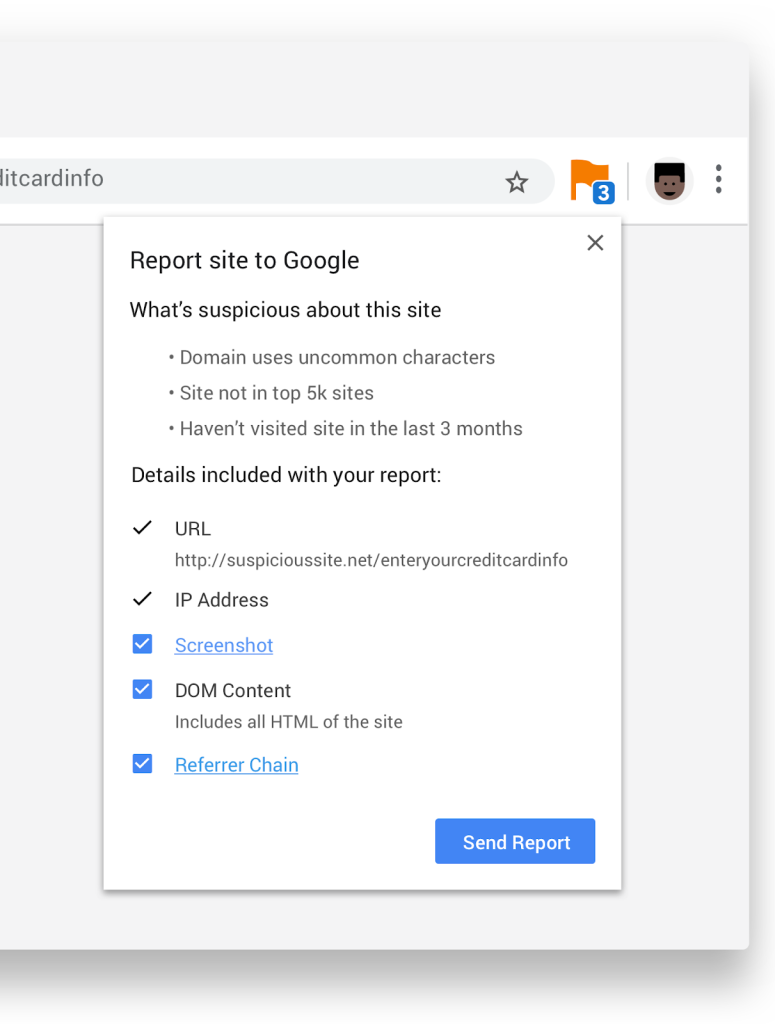

Users who install the extension, which is available now on the Chrome web store, will see an icon when they’re on a potentially dangerous site. It will give a list of reasons regarding why it’s considered suspicious. In the example Google provided, sample reasons are that the domain uses uncommon characters (which could be a sign of typosquatting), that the site isn’t listed in the top 5,000 sites, and that the user hasn’t visited the site in the past three months.

Clicking on “Send Report” allows users to report unsafe sites to Safe Browsing for further evaluation.

Do it for team “Everybody,” Schecter said:

If the site is added to Safe Browsing’s lists, you’ll not only protect Chrome users, but users of other browsers and across the entire web.

Protection from fumble fingers

The second new security feature for Chrome that Google announced is a new warning to protect users from sites with deceptive URLs. Heaven knows we fat-fingered typists need help on this front: It’s all too easy to quickly type a URL you use every day, whether it’s Google or Facebook or Amazon, and in your haste, you accidentally swap, add, or delete a single letter and hit enter.

Maybe you’ll wind up getting a 404 message …if you’re lucky. Otherwise, you could wind up visiting a spoofed page of the original one you were trying to get to.

Registering common misspellings of popular websites to catch users unaware is known as typosquatting, and it’s exactly what it sounds like: cybercrooks scoop up these frequently misspelled domain names, knowing that sooner or later, some innocent users will get stuck in their fly trap.

A while back, Naked Security’s Paul Ducklin misspelled Apple, Facebook, Google, Microsoft, Twitter, and Sophos in 2,249 ways to see what would happen – basically he let a computer miss-type URLs across the web to see what it uncovered. He found everything from outright fake pages to adult content and contests designed to capture personal information:

But while we can try as hard as we like to type as carefully as possible, the reality is that misspellings and mistyping are bound to happen. That’s why it’s nice to hear that Google’s going to help out with this new security feature, which will warn users away from sites that have URLs that look an awful lot like legitimate domains but have something else entirely in mind.

Like, for example, throwing pop-ups and ads into unsuspecting users’ faces; or trying to sell them IT and hosting services, or interesting domain names, or fake tech support; or tricking users into giving away personal or financial information – say, by offering you a free product if you pay for shipping and thereby capturing your payment card data.

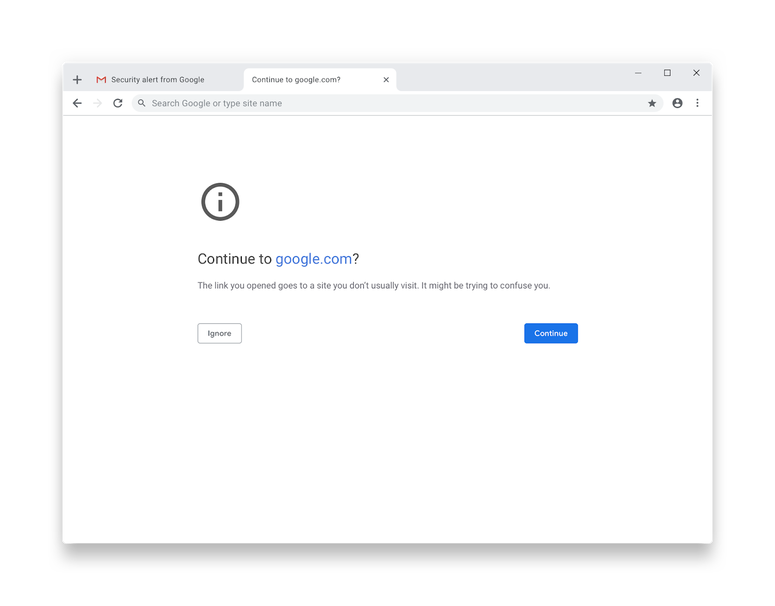

This is what the new warning looks like. Clicking on “continue” will whisk you back to safety:

Schecter:

This new warning works by comparing the URL of the page you’re currently on to URLs of pages you’ve recently visited. If the URL looks similar, and might cause you to be confused or deceived, we’ll show a warning that helps you get back to safety.

This one feels obvious, doesn’t it? It’s hard to believe that we’ve been telling people for years to be careful as they type, instead of having some way to automagically check the spelling of a URL to see if it’s almost-but-not-quite a popular/safe domain instead of some stagnant sinkhole lying nearby.

Nicely done, Chrome. Readers, if you use the new tools, feel free to tell us how you like them in the comments section below.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/BqmWOsnr0Cs/