Huawei bungled router security, leaving kit open to botnets, despite alert from ISP years prior

Exclusive Huawei bungled its response to warnings from an ISP’s code review team about a security vulnerability common across its home routers – patching only a subset of the devices rather than all of its products that used the flawed firmware.

Years later, those unpatched Huawei gateways, still vulnerable and still in use by broadband subscribers around the world, were caught up in a Mirai-variant botnet that exploited the very same hole flagged up earlier by the ISP’s review team.

The Register has seen the ISP’s vulnerability assessment given to Huawei in 2013 that explained how a programming blunder in the firmware of its HG523a and HG533 broadband gateways could be exploited by hackers to hijack the devices, and recommended the remote-command execution hole be closed.

Our sources have requested anonymity.

After receiving the security assessment, which was commissioned by a well-known ISP, Huawei told the broadband provider it had fixed the vulnerability, and had rolled out a patch to HG523a and HG533 devices in 2014, our sources said. However, other Huawei gateways in the HG series, used by other internet providers, suffered from the same flaw because they used the same internal software, and remained vulnerable and at risk of attack for years because Huawei did not patch them.

One source described the bug as a “trivially exploitable remote code execution issue in the router.”

The vulnerability, located in the firmware’s UPnP handling code, was uncovered by other researchers in more Huawei routers years later, and patched by the manufacturer, suggesting the Chinese giant was tackling the security hole whack-a-mole-style, rolling out fixes only when someone new discovered and reported the bug.

One at a time, please – don’t all rush in

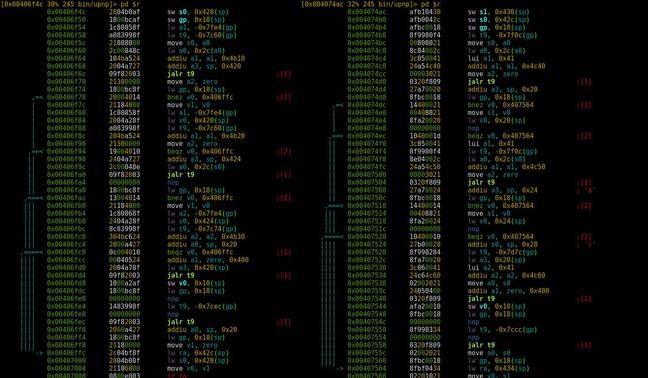

El Reg has studied Huawei’s home gateway firmware, and found blocks of code, particularly in the UPnP component, reused across multiple device models, as you’d expect. Unfortunately, Huawei has chosen to patch the models one by one as the UPnP bug is found and reported again and again, rather than issuing a comprehensive fix to seal the hole for good.

And found they were: “Some time between 2013 and 2017 this issue was then also rediscovered by some nefarious types who used it as part of the exploitation pack to [hijack] consumer home routers as part of the Mirai botnet,” a source told us.

Four years after the ISP’s review team privately disclosed the UPnP command-injection vulnerability to Huawei in 2013, and a year after the infamous Mirai botnet takedown of Dyn DNS in 2016, infosec consultancy Check Point independently found the same vuln that was quietly patched in the HG523a and HG533 series was still lurking in another of the Chinese goliath’s home routers: the HG532.

The Israeli outfit told us it went public with its discovery of the bug, CVE-2017-17215, on December 21, 2017, only after Huawei “had notified customers and developed a patch”, adding that it first “spotted malicious activity on 23rd November 2017”.

Huawei publicly acknowledged the security hole in the HG532 on November 30, 2017, suggesting that “customers take temporary fixes to circumvent or prevent vulnerability exploit or replace old Huawei routers with higher versions”.

Last summer, a security researcher discovered that the same model of Huawei routers in which Check Point had found the UPnP vuln, the HG532, were being used to host an 18,000-strong botnet created using a variant of the Mirai malware. A botnet that could have been avoided if Huawei had patched the broadband boxes when it quietly updated the related HG523a and HG533 devices in 2014.

British government policy is that while Huawei network equipment is not secure enough for government networks, officials say it is acceptable to expose the general public to the potential risks present in Huawei gear. Meanwhile, the US government has banned Huawei equipment from its federal agency networks, a move the Chinese corporation is suing to overturn.

UPnP vulnerability described

Routers affected by the UPnP vuln included Huawei’s HG523a, HG532e, HG532S, and HG533 models. For the HG533, firmware version 1.14 was reviewed by the ISP’s security assessors, and for the HG523a, version 1.12. The other two models were probed by Check Point. These were white-label products distributed by internet providers.

Check Point summarised the vulnerability, which affects all four models of router, back in 2017 as follows:

From looking into the UPnP description of the device, it can be seen that it supports a service type named ‘DeviceUpgrade’. This service is supposedly carrying out a firmware upgrade action by sending a request to ‘/ctrlt/DeviceUpgrade_1’ (referred to as controlURL) and is carried out with two elements named ‘NewStatusURL’ and ‘NewDownloadURL’.

The vulnerability allows remote administrators to execute arbitrary commands by injecting shell meta-characters “$()” in the NewStatusURL and NewDownloadURL…

The ISP’s report, dated 2013 and seen by The Register, stated:

An unauthenticated command execution vulnerability was discovered in the UPNP interface visible from the LAN on the Huawei Wireless Routers.

The UPNP schema, defined at http://192.168.1.1:37215/desc/DevUpg.xml describes the “Upgrade” action, which takes two arguments, “NewDownloadURL” and “NewStatusURL”.

The “NewStatusURL” parameter is vulnerable to command injection when commands are introduced via backticks. Injected commands are run with root privileges on the underlying operating system. No authentication credentials are required to exploit this vulnerability.

Our sources confirmed the issue Check Point found was the same one described in the internal ISP report into the HG523a and HG533 firmware.

That would mean Huawei knew of the security weakness in 2013, claimed it was fixed in 2014, yet didn’t fully address it in other routers until 2017 when Check Point got wind of it. The flawed UPnP service can be accessed from the LAN by local machines, and, depending on the default configuration, can face the public internet for anyone or any botnet to find.

The ISP-commissioned review, incidentally, documented exploiting the command-injection hole using backticks, while Check Point demonstrated exploiting the flaw using shell meta-characters. The end result is the same: shell commands can be inserted into URL parameters passed to the UPnP service running on the router by a hacker, which are executed with root-level privileges.

Specifically, the URLs are used directly on the command line to invoke a program on the device that handles security updates, without any sanity checks or sanitization; injecting commands into the URLs ensures they are executed as the updater program is invoked.

Audit … Our own analysis of the flawed code in Huawei’s firmware. On the left is the dodgy function in the HG533, and on the right, the HG532. The HG533 was quietly patched in 2014 following the ISP review, whereas the HG532 was fixed in 2017 after Check Point spotted it – despite both running functionally the same 32-bit MIPS code (click to enlarge).

A technical analysis of the security screw-up can be found, here.

In a statement, Huawei told The Register:

On November 27, 2017 Huawei was notified by Check Point Software Technologies Research Department of a possible remote code execution vulnerability in its HG532e and HG532S routers. The vulnerabilities highlighted within the report concerned these two routers only. Within days we issued a security notice and an update patch to rectify the vulnerability.

A 2014 report by one of our customers evaluated potential vulnerabilities in our HG523a and HG533 routers. As soon as these issues were presented to us, a patch was issued to fix them. Once made available to our customers, the HG533 and HG523a devices experienced no issues.

It added that it had publicly published an advisory on the Huawei PSIRT page in 2017.

The Chinese company did not address why it had patched the same code vulnerability in some products back in 2014 but not fixed the same pre-existing flaw in other routers until it was pointed out to the firm years later.

This is not the first time Huawei’s networking kit has been placed under the spotlight: the 2017 annual report by British code reviewers from the Huawei Cyber Security Evaluation Centre (HCSEC) obliquely criticised Huawei’s business practices around older elements reaching end-of-life while still embedded within Huawei products that had a longer expected lifespan.

The same team is expected to again criticise the Chinese manufacturer in this year’s report over its security practices. ®

Sponsored:

Becoming a Pragmatic Security Leader

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2019/03/28/huawei_mirai_router_vulnerability/