How to make people sit up and use 2-factor auth: Show ’em a vid reusing a toothbrush to scrub a toilet – then compare it to password reuse

RSA Despite multi-factor authentication being on hand to protect online accounts and other logins from hijackings by miscreants for more than a decade now, people still aren’t using it. Today, a pair of academics revealed potential reasons why there is limited uptake.

Spoiler alert: it’s because, apparently, there isn’t enough focus on clearly explaining the actual need for this extra layer of account security.

In a presentation at this year’s RSA Conference, taking place in San Francisco this week, Dr L Jean Camp, a professor at Indiana University Bloomington in the US, and her doctoral candidate Sanchari Das, detailed their research into why people aren’t using Yubico security keys or Google’s hardware tokens for multi-factor authentication (MFA).

For those who don’t know: typically, you use these gadgets to provide an extra layer of security when logging into systems. You enter your username and password as usual, then plug the USB-based key into your computer and tap a button to activate it. The thing you’re trying to log into checks the username and password are correct, and that the physical key is valid and tied to your account, before letting you in.

That means a crook has to know your username and password, and have your physical key to log in as you. We highly recommend you investigate activating MFA on your online accounts, particularly important ones such as your webmail.

Findings

What the pair found during their research work derails any previous assumptions that the lack of MFA uptake is because people are stupid, or can’t use the technology. What it comes down to is education and communicating risk.

The duo carried out a two-phase test, where users were told about the technology and shown instructions on how to use it. Feedback from this phase, which revealed where folks were getting stuck in the process of MFA enrollment, was passed along to manufacturers of the security keys, who, we’re told, changed their instructions and prioritized ease-of-use as a result. However, it still wasn’t enough.

“Even after the [training] sessions, they still didn’t use it,” Dr Camp said. “It wasn’t cost, because they got the hardware for free, and it wasn’t usability, because we changed the instructions to make those easier. In the end, risk communication was key.”

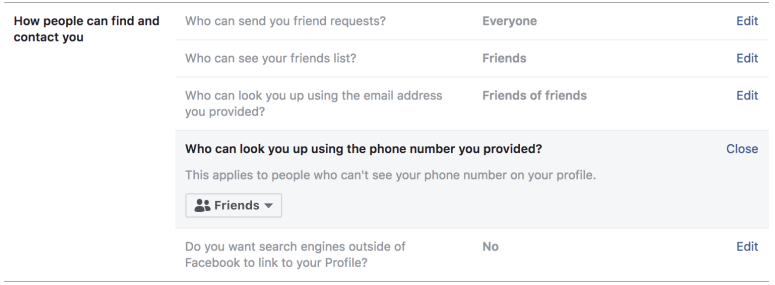

When 2FA means sweet FA privacy: Facebook admits it slurps mobe numbers for more than just profile security

Actually getting this message across needs a variety of techniques. Millennials, they noted, were much less concerned with the loss of personal information, with many saying they put all that info online in public anyway. But show how their bank accounts could get pillaged, and they sat up and paid attention.

The most effective way to get the security message across appears to be video. Dr Camp said that a video showing how reusing a password is like reusing a toothbrush to clean a toilet got the message across more effectively than a print warning. Not only should you not be doing it, but also, password security matters, and MFA is part of that. Single-factor, password-only security is flimsy and weak, compared to MFA protections.

That said, longer videos work best for older folks, while shorter videos were better at convincing da yoof.

There are also privacy fears. Das noted that biometric two-factor systems – think fingerprints and face scans – were the most popular with users by a long chalk. But its adoption has been hurt by concerns that, if data like an iris print is stolen, you can’t change your eyes.

With less than 10 per cent of Gmail users logging in with two-step authentication, last time we checked, there’s clearly a long way to go. But with a little more encouragement, adoption rates can be increased, the two academics concluded. ®

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2019/03/06/password_two_factor_auth_security/