Brave browser explains Facebook whitelist to concerned users

Privacy-conscious web browser company Brave was busy trying to correct the record this week after someone posted what looked like a whitelist in its code allowing its browser to communicate with Facebook from third-party websites.

Launched in 2016, Brave is a browser that stakes its business model on user privacy. Instead of just serving up user browsing data to advertisers, its developers designed it to put control in the users’ hands. Rather than allowing advertisers to track its users, the browser blocks ad trackers and instead leaves users’ browsing data encrypted on their machines. It then gives users the option to receive ads by signalling basic information about their intentions to advertisers, but only with user permission. It rewards users for this with an Ethereum blockchain-based token called the Basic Attention Token (BAT). Users can also credit publishers that they like with the tokens.

Brave’s FAQ explains:

Ads and trackers are blocked by default. You can allow ads and trackers in the preferences panel.

Yet a post on the YCombinator Hacker News site reveals that the browser has whitelisted at least two social media sites known to be aggressive about slurping user data: Facebook and Twitter. The post points to a code commit on Brave’s GitHub repository from April 2017 that includes the following code:

const whitelistHosts = ['connect.facebook.net', 'connect.facebook.com', 'staticxx.facebook.com', 'www.facebook.com', 'scontent.xx.fbcdn.net', 'pbs.twimg.com', 'scontent-sjc2-1.xx.fbcdn.net', 'platform.twitter.com', 'syndication.twitter.com', 'cdn.syndication.twimg.com']

The code was prefaced with this:

// Temporary whitelist until we find a better solution

The whitelist was in an archived version of the repository but also turns up in the latest current master branch.

Brave staff have separately commented on the issue in different threads. CTO Brian Bondy commented directly in the YCombinator thread saying:

There’s a balance between breaking the web and being as strict as possible. Saying we fully allow Facebook tracking isn’t right, but we admittedly need more strict-mode like settings for privacy conscious users.

He added that Brave’s Facebook blocking is “at least as good” as uBlock origin, which is a cross-platform ad blocker.

So if the entries in the whitelist aren’t ad trackers, what are they?

Brave’s director of business development Luke Mulks dived deeper, calling stories in the press about whitelisting Facebook trackers inaccurate. He explained that the browser has to allow these JavaScript events through to support basic functionality on third-party sites.

The domains listed in the article as exceptions are related to Facebook’s JS SDK that publishers implement for user auth and sharing, likes, etc.

Blocking those events outright would break that Facebook functionality on a whole heap of sites, he said.

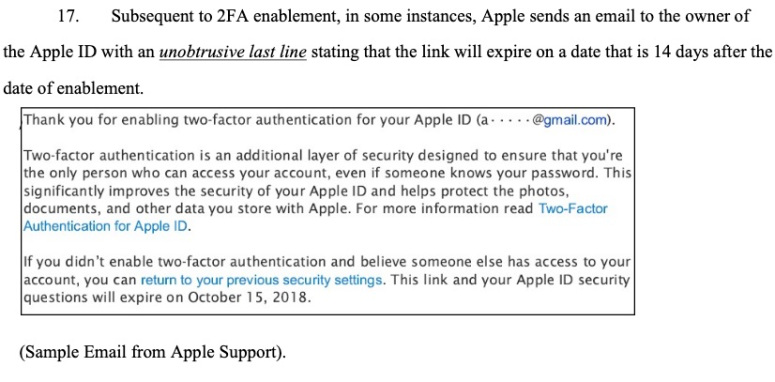

Along with Bondy, he cites GitHub commits from three weeks ago that updated the browser’s ad blocking lists, explicitly blocking Facebook requests used for tracking.

So, these JavaScript exceptions can’t be used to track people? That’s right, according to Brave co-founder Brendan Eich. He weighed in on Twitter and in the Reddit forums, arguing that the Facebook login button can’t be used as a tracker without third-party cookies, which the browser blocks.

Mind you, data slurps like Facebook can also track people by fingerprinting their browsers and machines. Eich doesn’t think that’s enough. He said:

A network request does not by itself enable tracking – IP address fingerprinting is not robust, especially on mobile.

The company used the whitelist when it was relatively small because it didn’t have the resources to come up with a more permanent solution, he said, adding that Brave will work to empty the list over time.

Eich has a solid track record in the tech business, having invented JavaScript and co-founded Mozilla. He was eager to avert any user doubt over Brave’s privacy stance – after all, privacy-conscious users might well take their browsing elsewhere if they feel that Brave is deliberately deceiving them. He added:

We are not a “cloud” or “social” server-holds-your-data company pretending to be on your side. We reject that via zero-knowledge/blind-signature cryptography and client-side computation. Can’t be evil trumps don’t be evil.

Eich and his team could have opted to break things like Facebook likes and Facebook-based authentication on third-party sites, but that would have left users wondering why hordes of sites didn’t look the same in Brave as they did in other browsers. That would have been a big risk for a consumer-facing browser trying to gain traction, and it was one that Brave was understandably unwilling to take.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/a-5irms-6oA/