Got an SMS offering $$$ refund? Don’t fall for it…

SMS, also known as text messaging, may be a bit of a “yesterday” technology…

…but SMS phishing is alive and well, and a good reminder that KISS really works.

If you aren’t familiar with the acronym KISS, it’s short for “keep it simple, stupid.”

Despite the rather insulting tone when you say the phrase out aloud, the underlying ideas work rather well in cybercrime.

Don’t overcomplicate things; pick a believable lie and stick to it; and make it easy for the victim to “figure it out” for themselves, so they don’t feel confused or pressurised anywhere along the way.

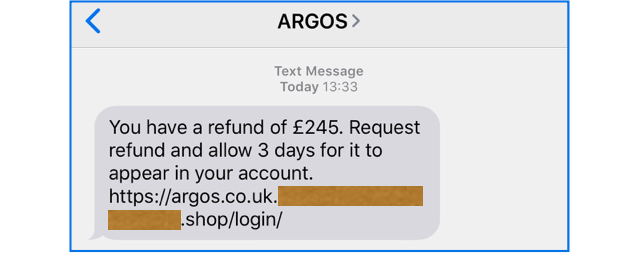

Here’s an SMS phish we received today, claiming to come from Argos, a well-known and popular UK catalogue merchant:

You have a refund of £245. Request refund and allow 3 days for it to appear in your account.

http://argos.co.uk.XXXXXXX.shop/login

The wording here probably isn’t exactly what a UK retailer would write in English (we’re not going to say more, lest we give the crooks ideas for next time!), but it’s believable enough.

That’s because SMS messages, of necessity, rely on a brief and direct style that makes it much easier to get the spelling and grammar right.

Ironically, after years of not buying anything from Argos, we recently purchased a neat new phone for our Android research from an Argos shop – the phone we mentioned in a recent podcast, in fact – so we weren’t particularly surprised or even annoyed to see a message apparently from the company.

We suspect that many people in the UK will be in a similar position, perhaps having done some Christmas shopping at a genuine Argos, or having tried to return an unwanted gift for a genuine refund.

The login link ought to be a giveaway, but the crooks have used an age-old trick that still works well: register an innocent looking domain name, such as online.example, and add the domain name you want to phish at the start.

This works because once you own the domain online.example, you automatically acquire the right to use any subdomain, all the way from http://www.online.example to some.genuine.domain.online.example.

Because we read from left-to-right, it’s easy to spot what looks like a domain name at the left-hand end of the URL and not realise that it’s just a subdomain specified under a completely unrelated domain.

These crooks chose the top-level domain (TLD) .shop, which is open for registrations from anywhere in the world.

Although .shop domains are generally a bit pricier than TLDs such as .com and .net, we found registrars with special deals offering cool-looking .shop names starting under $10.

What if you click through?

What harm in looking?

Well, the problem with clicking through is that you put yourself directly in harm’s way.

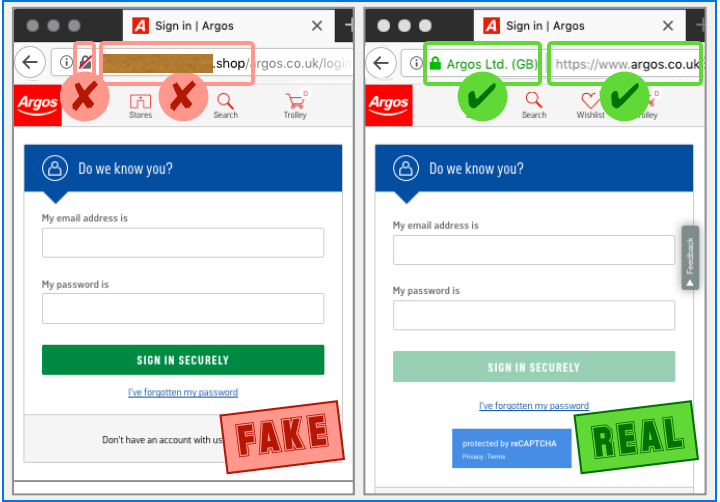

Visting the link provided takes you to a pretty good facsimile of the real Argos login page, shown below on the left (the real page is on the right):

There’s not much fanfare, just a realistic clone of exactly the sort of content you’d expect to see, except for the lack of HTTPS and the not-quite-right domain name.

Getting free HTTPS certificates is pretty easy these days, so the crooks could have taken this extra step if they’d wanted.

Perhaps they were feeling lazy, or perhaps they figured that anyone who’d take care to check for the presence of a certificate might also click through to view the certificate, which would only serve to emphasise that it didn’t belong to Argos?

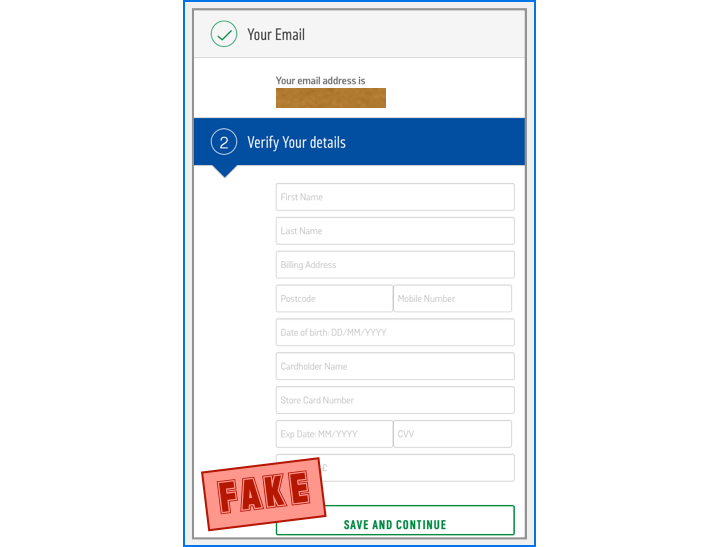

If you do fill in a username and password, then you have not only handed both of them to the crooks, but also embarked on a longer phishing expedition by the crooks, because the next page asks for more:

We didn’t try going any further than this, so we can’t tell you what the crooks might ask you next – but one thing is clear: by the time you get here, you’ve already given away far too much.

What to do?

- Check the full domain name. Don’t let your eyes wander just because the server name you see in the link starts off correctly. What matters is how it ends.

- Look for the padlock. These days, many phishing sites have a web security certificate so you will often see a padlock even on a bogus site. So the presence of a padlock doesn’t tell you much on its own. But the absence of a padlock is an instant warning saying, “Go no further!”

- Don’t use login links in SMSes or emails. If you think you are getting a refund, find your own way to the merchant’s login page, perhaps via a bookmark, a search engine, or a printed invoice from earlier. It’s a bit slower than just clicking through but it’s way safer.

Here’s to a phish-resistant 2019!

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/VR8LnxSyl9c/