25 Years Later: Looking Back at the First Great (Cyber) Bank Heist

The banking industry was at a crossroads 25 years ago, marking the beginning of the digital world we know today. Banks were struggling to lower costs while improving customer access, and we saw physical branches and human tellers being replaced by ATM machines and electronic services.

It was also the time where Citibank fell victim to what many consider one of the first great cybercrimes. Vladimir Levin made headlines in 1994 when he tricked the bank into accessing $10 million from several large corporate customers via their dial-up wire transfer. Levin transferred the money to accounts set up in Finland, the United States, the Netherlands, Germany, and Israel. He was eventually caught, and Citibank ultimately recovered most of the money.

Looking back, it may be the first successful penetration into the systems that transfer trillions of dollars a day around the globe. The moment not only captured the attention of the world, but it caught the attention of my teenage self, inspiring my curiosity — and eventually a career — in the world of cybersecurity.

My young mind struggled to comprehend how something so seemingly simple could baffle the defenses of one of the world’s largest financial institutions. As the Los Angeles Times reported in 1995, “The incident underscores the vulnerability of financial institutions as they come to increasingly rely on electronic transactions. … But as they seek to promote electronic services — and cut the high costs of running branch offices — they face risks.”

I think we could easily say we’re in a similar situation today.

From Bonnie and Clyde to Black Hat

When I first learned of the heist through a documentary on local British television, I was shocked to know that someone could take money from a bank without even having to step into a branch. It was armchair fraud — the responsible person never left a physical fingerprint, all while essentially penetrating the impenetrable.

The 1990s and 2000s were abuzz with the excitement of the Internet and proliferation of access to Internet browsers. As we welcomed this new and wild World Wide Web, banks began to digitize their storefronts. However, the Internet wasn’t inherently designed with digital security in mind. The framework of the Internet was born in academia, an altruistic environment built around trust and exploration.

But with every gain, there was someone trying to game the system for a variety of reasons. Some were just curious what was accessible in this digital frontier. Others, like Levin, had more nefarious goals in mind.

Fast forward 20-plus years, and while we are in an entirely unrecognizable digital world, we’re still facing a similar battle. Rather than spoofing dial-up systems, we have industrial and government-level cybercrime, unpredictable intelligent bots, and vast amounts of computing power to deal with. Yet while there are similarities, there are a few important differences:

- Scalability: While fraudsters were sophisticated for their time, scalability is what really affects how we understand fraud today. In the past, there were thousands of smaller banks and just a handful of people around the world with the capability to be able to “digitally” break in and make off with the loot. Now there are fewer — but larger — banks to steal from, yet with the digital resources today, fraudsters can maximize the footprint of their criminality. They target governments or large enterprises, or they simply get out of the robbery business and make their riches selling the tools globally across the Dark Web, which allows anyone with a computer, Internet connection, and a few hundred dollars to become a cybercriminal.

- The rate of change: There was massive acceleration from the Industrial Revolution to digital revolution. While it’s well known that rate of change in the Industrial Revolution was swift, today’s rate of change is unmatched. Change inherently brings risk, and with the finance industry rapidly transforming, threats often move faster than the solutions that target them. This new rate of change has transformed the job of the CISO, who now must think strategically, and even abstractly, about protecting what isn’t even known yet.

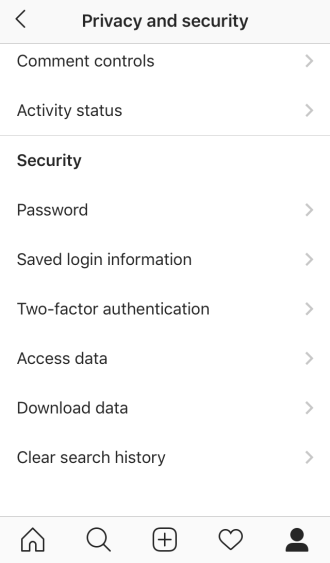

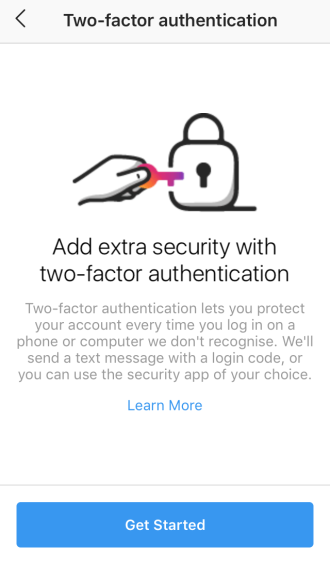

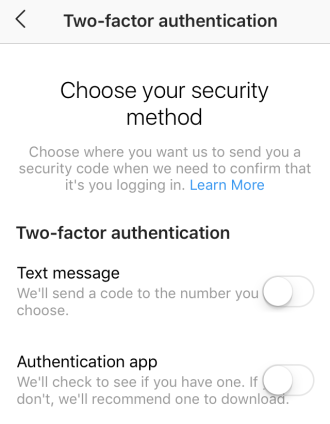

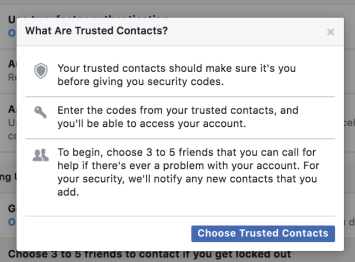

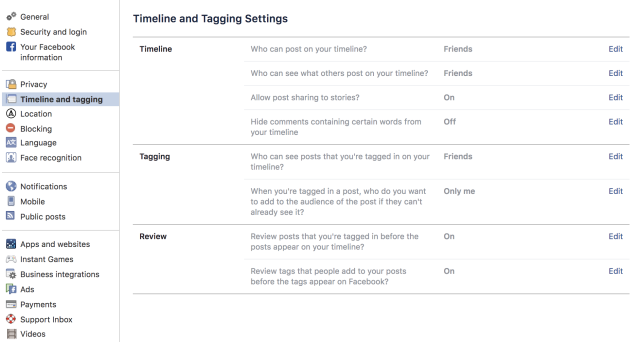

- Digital identity: The concept of digital identity wasn’t on the radar 24 years ago. But today, we have hundreds of websites where we must manage our identity, even if only about 10 are actually important. Consider Facebook, where nearly one-third of the global population log on and also use the same credentials to access millions of other accounts and services. In the digital world, you can become anyone as long as you can get a hold of their credentials — whether that is a password, Social Security number, a fingerprint.

Don’t Fight Fraud Alone

Today’s solutions must absolutely be comprehensive, involving much more than simple cross-industry collaboration. Regulations and frameworks can provide guidance and foster a productive global conversation about the issues at hand, but they take time to put into place and can’t adapt as quickly as the threats they are meant to mitigate. Fighting fraud today requires real-time intelligence. For security executives, it means a continual education on the latest tools, trends, and trials of the cybersecurity market.

The financial services industry has adapted to this new age of fraud by promoting strategic partnerships — often between financial technology companies (fintechs) and banks. While banks bring to the table many decades of refined, robust security measures and regulatory knowledge, fintechs offer their innovative initiatives, agility, and scalability to develop even more sophisticated methodologies for fighting fraud. Every organization is facing an uphill battle as the “what” to protect and “who” to protect it from are rapidly changing. Fortunately, these partnerships offer the right mix of expertise, experience, and innovation to quickly adapt and respond to changes in the cyber ecosystem, often providing a blueprint for others to follow suit.

Will There Be a Great Bank Heist of 2024?

Today, we have a better understanding of what comprises our digital assets, but it remains a constant battle to determine how best to secure them. The monetary losses financial institutions suffer from fraud and theft are staggering. Worse, the cybersecurity space is maturing in more insidious directions, suggesting we need to reconsider the value placed on different assets. Compared with a traditional bank theft, when such commodities fall in the wrong hands, it affects the livelihood of many more citizens and the backbone of our modern society and economy.

Related Content:

- 2019 Attacker Playbook

- How to Remotely Brick a Server

- Cybersecurity in 2019: From IoT Struts to Gray Hats Honeypots

Zia Hayat is CEO of Callsign, a company that specializes in frictionless identification. Zia has a PhD in information systems security from the University of Southampton and has worked in cybersecurity for both BAE systems and Lloyds Banking Group. He founded Callsign in … View Full Bio