Serious Security: When cryptographic certificates attack

Artificial intelligence, fuzzy logic, neural networks, deep learning…

…any tools that help computers to behave in a way that’s closer to what we could call “thinking” are immensely useful in fighting cybercrime.

That’s because what’s generally known today as machine learning is good at dealing quickly with immense amounts of threat-related data, pruning out the many irrelevancies to leaving the interesting and important stuff in clear sight.

But don’t knock human savvy just yet!

Sometimes, a single, informed glance by a human expert is more than enough, like this great tweet from last week by computer security practitioner Paul Melson:

Do you see what I see? —-BEGIN CERTIFICATE—– UEsDBBQ…

Melson didn’t say exactly how or where he came across the file mentioned in his tweet. Given that he describes himself as an “unrepentant blue teamer” – someone whose job is to keep unwanted visitors out of a network, or to find and eject those who have already sneaked in – it’s reasonable to infer that he oughtn’t, and isn’t planning, to tell us. Let’s just assume he spotted the file as part of ruining some malicious hacker’s sneaky experiments.

If you’re a security researcher yourself, you’re probably going, “Hey, that’s cool!” (Or, perhaps more appropriately, “That’s very uncool.”)

But if you aren’t a sysadmin, you might be wondering what the fuss is about – so we figured it would be informative to dig into the story behind the story.

Why does the text ----BEGIN CERTIFICATE---- UEsDBBQ... ring all sort of alarm bells, and what do those bells tell you?

Here goes.

Why the alarm bells?

If you’ve ever dealt with public key cryptography – for example, setting up web servers to accept HTTPS connections – you’ll know you need a public/private keypair and a cryptographically signed certificate that vouches for your public key.

HTTPS relies on an underlying protocol called TLS, short for Transport Layer Security, and most TLS systems use a file format called X.509 to store their cryptographic material.

X.509 is a product of the 1980s telecommunications world, and follows the fashions of that era – its native representation relies based on a rather complicated binary file storage system called DER (Distinguished Encoding Rules), which is, in turns, based on the resoundingly-named Abstract Syntax Notation One (ASN.1).

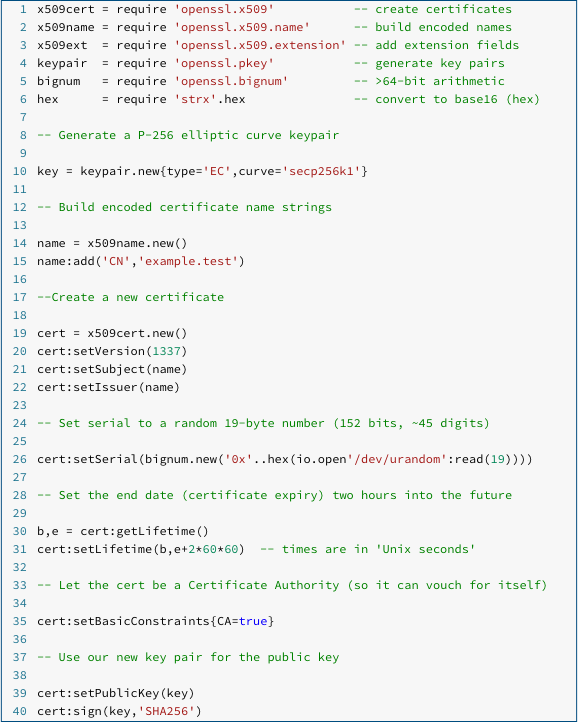

Let’s make a self-signed certificate of our own to play with (we used Lua code here, linked with LibreSSL, but you don’t need to reproduce what we did, so don’t worry if you aren’t a programmer):

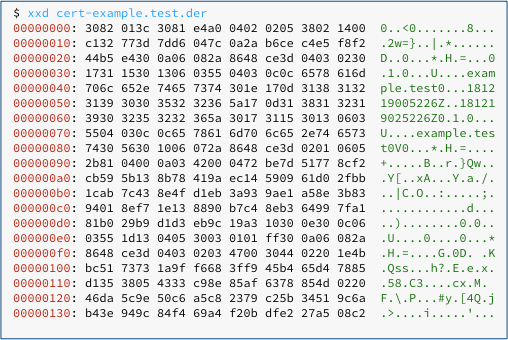

We saved the raw binary data of the certificate as a DER file, giving us a decidedly text-unfriendly certificate that looks like this when dumped:

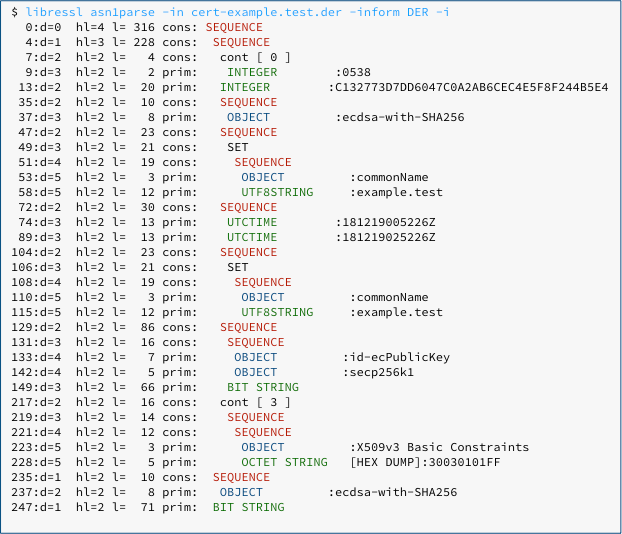

Even with the careful help of LibreSSL’s built-in ASN.1 parser, we get the still-not-very-readable:

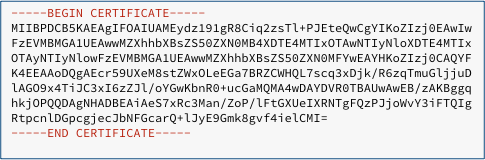

To make X.509 certificates more robust – so you can add them to emails, keep them in text files and so on without risk of corruption – they are usually saved in Privacy-Enhanced Mail format, or PEM for short:

PEM files consist of the raw DER data converted into base64, a text-only format in which four text characters are used to encode every three bytes of binary data, thus sticking to plain ASCII and avoiding control characters, risky punctuation marks and so on.

So, as a security practitioner, you’ll quickly get used to seeing -----BEGIN CERTIFICATE----- in security-related files.

In fact, you sort-of stop noticing certificates after a while – they aren’t supposed to be secret, and any modification, whether by accident or design, automatically renders them useless.

So why would Melson’s rogue certificate stand out?

Certificates are there to share. Every time someone connects to your website, you send them a copy of your certificate, which contains your public key and a digital signature by which a trusted third party vouches for the fact that it really is your public key, issued for your website.

What are certificates supposed to look like?

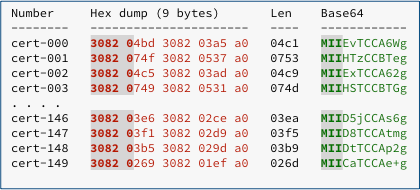

Let’s dump the first few bytes of the 150 certificates that are built into Mozilla products as officially-trusted certificate authorities – these are the trusted third parties that Mozilla currently accepts as fit to vouch for websites that you visit with Firefox:

Note that every certificate starts with the bytes 30 82 0x.

That’s because the X.509 encoding always kicks off like this:

30 - what follows is an X.509 SEQUENCE of objects 82 - the next 2 bytes encode the length of the rest of the objects HH - the high 8 bits of the 2-byte length LL - the low 8 bits of the 2-byte length

The lengths of all these Mozilla “root” certificates range from 442 to at most 2007 bytes, so their encoded lengths are never lower than 0x0100 (256 in decimal), and never bigger than 0x07FF (2047), so their X.509 encodings always start with one of these sequences:

30 82 01 00 30 82 01 01 30 82 01 02 . . . 30 82 07 fe 30 82 07 fe 30 82 07 ff

(We’ve printed the hexadecimal length of each DER file in the chart above – note how it always comes out as 4 plus the length encoded into the SEQUENCE mark, which denotes the four bytes used for the sequence mark itself, plus the length of the sequence.)

Now, when you convert three bytes starting 30 82 0x into base64 notation, you end up with four encoded bytes like this…

Raw Base64 ------ ------ 30 82 00 MIIA 30 82 01 MIIB 30 82 02 MIIC 30 82 03 MIID 30 82 04 MIIE . . .

…and so on.

In fact, you get this pattern all the way to 80 82 3f:

Raw Base64 ------ ------ . . . 30 82 3d MII9 30 82 3e MII+ 30 82 3f MII/ 30 82 40 MIJA 30 82 41 MIJB

In other words, for any X.509 certificate that is less than 0x3FFF (16,383) bytes long, the first three base64 characters of the corresponding PEM data will always be MII, just as you saw in the example PEM certificate we created earlier.

And therefore –---BEGIN CERTIFICATE---- UEsDBBQ smells plain wrong!

Know your base64 magic

Actually, there’s more to it that that.

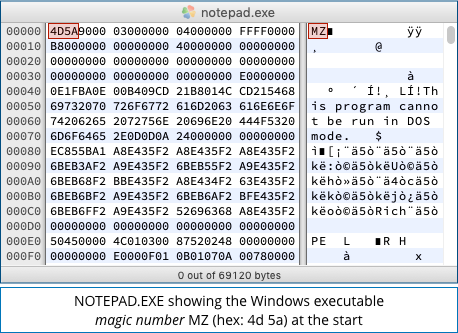

The first two characters of a base64 sequence decode to the first 12 bits of the original content, and many popular file types aways start with the same two or more bytes.

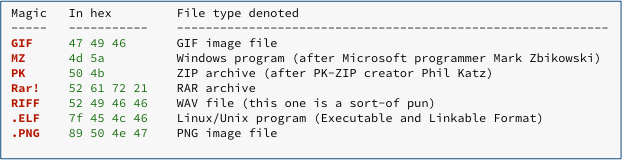

Constant bytes at the start of files are known in the jargon as magic numbers, because they magically tell you the type of the file.

More precisely, magic numbers give you a strong hint that a file will turn out to be of type X, or tell you that it can’t be of type Y or Z.

Here are some common examples:

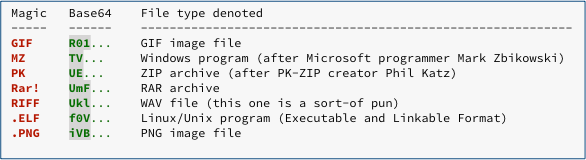

Here are those sequences converted into base64 strings, shortened to just two or three characters for easy recognition:

Experienced security researchers will readily recognise dozens of these base64 “hints”, but even if you only memorise TV (which stands for MZ) and UE (for PK), you will be able to spot loads of potentially malicious files at a glance.

The MZ file marker covers a whole range of Windows executable files, including both EXEs and DLLs, which share the same format.

And ZIP archives are used for many purposes, including by Android apps, which come with a .APK extension but are stored in ZIP format, and Microsoft Word files, which use a variety of different internal layouts, including ZIP.

All of this raises the question, “What had Melson spotted?”

From the UE at the start, you can tell at once it was a ZIP file, but what was inside?

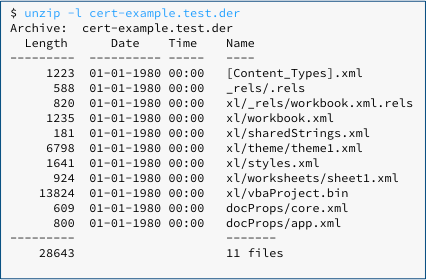

Because it’s in ZIP format, we can present it to the unzip program to list what’s inside it, revealing the tell-tale internal structure of an Excel spreadsheet file:

The presence of a component called xl/vbaProject.bin suggests that this spreadsheet contains macros (embedded program code).

Extracting the VBA (Visual Basic for Applications) macro code from the vbaproject file reveals an Auto_Open function.

As its name suggests, this function is intended to execute automatically when the document is opened:

By default, Office won’t automatically run macros, so a little caution will keep you safe – crooks trying to spread malware this way not only have to persuade you to open the document but also have to trick you into clicking a button to [Enable macros].

Fortunately, it looks as though Melson spotted this one while the crooks were still messing around with the idea, because the embedded Auto_Open macro simply tries to run a program called C:shell.exe that has already been installed in the root directory of the C: drive.

Given that the root directory is not writable by a regular user, any crook hiding malware there probably already has sysadmin powers, so this attack really doesn’t add much.

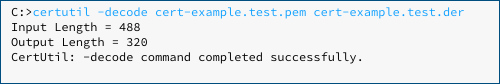

But it’s a neat way of hiding in plain sight – and it means that the crooks can use the inconspicuous and official Windows utility CERTUTIL.EXE to decode the file.

CERTUTIL knows to expect the -----BEGIN CERTIFICATE----- marker and therefore automatically strips it off before un-base64ing the enclosed data:

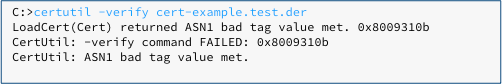

Of course, if you use CERTUTIL to verify the extracted “certificate” afterwards, you will immediately realise that it is bogus, because it isn’t in DER format at all…

…but the crooks already know that and can therefore use CERTUTIL as an attack utility rather than as a security tool.

What to do?

- Don’t trust files based only on filenames or headers they include. The crooks don’t play by the rules so they routinely mis-label data in the hope of staying off your radar a bit longer.

- Take care when you verify downloaded data. Make sure that the scripts or tools you use to validate untrusted files don’t themselves try to “assist” you by choosing a helper app based on what the files seem to be.

The second point sounds above obvious but it is easily overlooked.

Notably, never double-click a file just to see what the operating system makes of it – you will often end up launching it into an application you weren’t expecting, with all the security risks that entails, instead of merely viewing it.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/jgeQ59eyULY/