Fury as OS X Mavericks users FORCED to sync contact books with iCloud

Free Regcast : Managing Multi-Vendor Devices with System Centre 2012

Apple has removed from Mac OS X Mavericks the ability to directly sync an iPhone’s contact list with its owner’s computer – forcing the user to instead upload their address book to Cupertino’s cloud and download it to the local computer.

Anyone who updates to the latest release of Apple’s desktop operating system, version 10.9, can only synchronize personal contacts and calendar information via Apple’s off-site iCloud, because the feature to do the job via iTunes (specifically version 11) no longer exists.

The change – confirmed by El Reg – comes with no forewarning to those about to upgrade, which will annoy privacy-conscious fanbois already spooked by revelations about the NSA and GCHQ’s surveillance of anyone using an electronic device.

“Apple seems to now want to force its clients, who tend to be high end, to export their contact information to a nation that is at best shoddy at keeping secrets and that is already spying on everyone,” said a Reg reader who tipped us off about the OS X change.

“You could argue the US probably has that information already, but as far as I can tell, a business that uses iCloud for business contacts is in the same position as a business that uses Gmail.”

Our reader is not the only person to have spotted the update: irritated Apple fans have filed support queries to Apple about the change, and argued that resorting to setting up a CardDAV, CalDAV or similar network server is not an acceptable workaround.

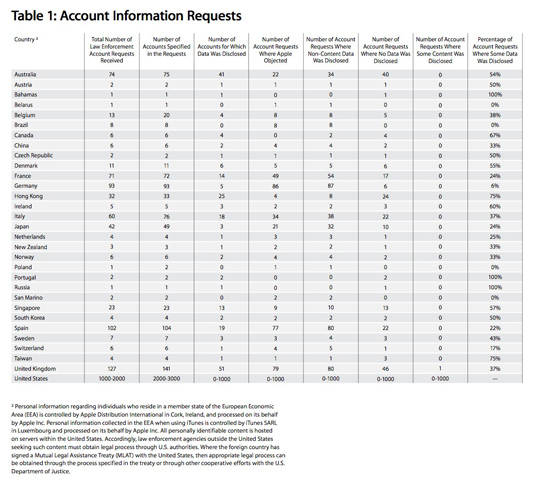

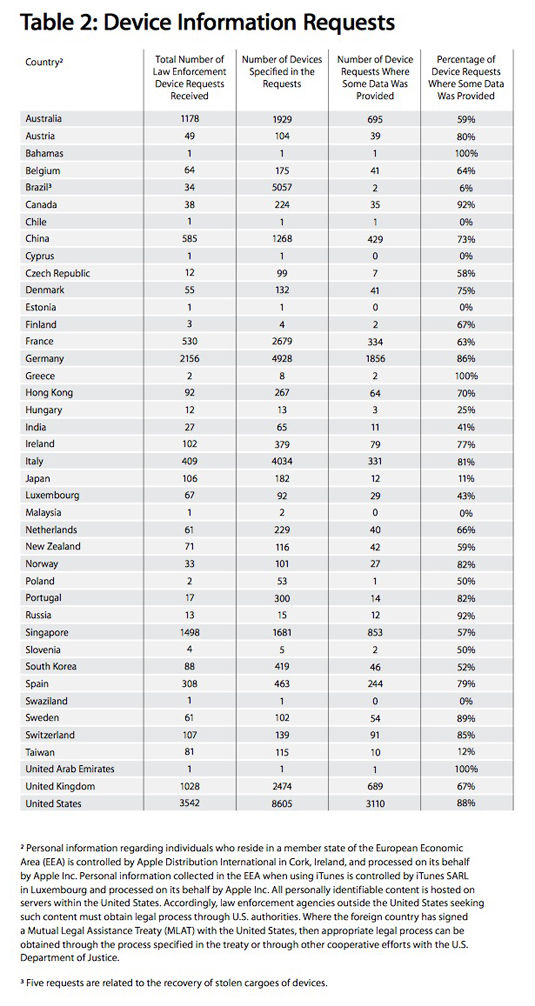

The iCloud-only contact sync policy emerged as Apple published a transparency report that documented the number and types of requests it has received for copies of users’ personal records from cops and intelligence agencies around the world. The US topped the charts, and also bans Apple from revealing specific details about the info slurped – the iPhone maker is opposed to this gagging order.

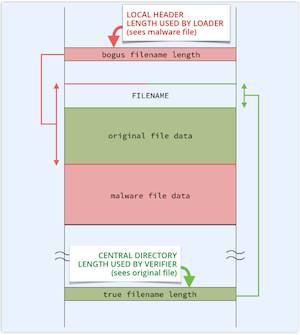

Spook agencies such as Uncle Sam’s NSA don’t necessarily need the contents of your emails and messages for analysis: just the metadata describing who contacted whom and when, or simply who is associated with whom, is enough – thus, address books slurped from cloud providers are a treasure chest of intelligence.

And by mandating iCloud-only calendar synching as well as contacts, Apple could end up handing over details of your whereabouts past and future. Of course, if the NSA really wanted someone’s contact book, it could certainly find some way of snaffling it – but tapping up the iCloud is so much more easier for them.

Apple goes out of its way to say it gives the privacy of its customers “consideration from the earliest stages of design for all our products and services”, a stance that goes against the iCloud-only sync change, which activists will likely view as a step in the wrong direction by Cupertino.

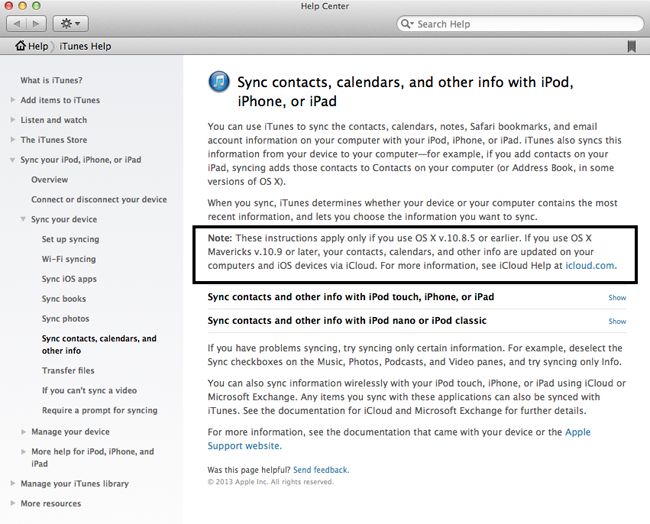

Apple Mac OS X Mavericks help center … a warning lies in the small print

Up close … we zoom in on the cloud sync wording

Mavericks includes a new iCloud Keychain that can store all website usernames, passwords, credit card numbers and Wi-Fi network information, and keeps the data up to date across a user’s Apple devices, including iPhones and iPads. While we’re told the data is encrypted using the AES256 algorithm, security researchers including Mike Shema, director of engineering at cloud security firm Qualys, expressed mixed feelings about the password management feature: it helps people juggle their login credentials, but ultimately users are in the hands of software developers.

“It’s one thing to hear advice that users should have separate passwords for each of their accounts, it’s another to actually follow through on the advice since adhering to it can be such a hassle,” Shema said.

“Something like [Apple’s iCloud] keychain essentially makes this effortless and uniform across a user’s devices – of course, only their Apple devices.

“However, the keychain solves some of the user’s password management problems but none of the app’s. In other words, there may still be weaknesses in how the app handles password storage and password resets, for example. One of the biggest problems in identity security is that apps still equate users with email addresses for password-reset mechanisms.”

On a more positive note, Qualys separately praised Apple for updating Mavericks to mitigate against the infamous BEAST SSL snooping attack. Other security improvements in OS X 10.9 Mavericks include restricting Adobe Flash Player plugins to run in a locked-down sandbox within Apple’s Safari browser software. ®

Free Regcast : Managing Multi-Vendor Devices with System Centre 2012

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2013/11/07/apple_mandates_icloud_contact_syncing/