Cyber criminals have no borders, so neither should we

It’s great to be a part of the US’s National Cyber Security Awareness Month (NCSAM) again this year.

It’s great to be a part of the US’s National Cyber Security Awareness Month (NCSAM) again this year.

Back in the good old days of 2006, the Australian government was becoming increasingly aware of the growing risk of cybercrime, and in conjunction with like-minded organisations such as Sophos, the Australian Cyber Security Awareness Week was born.

Originally with only a handful of partners and schools, this week has become a serious event in 2013 with over 1,400 partners, including around 700 schools.

Then, in 2011, the New Zealand government launched its Cyber Security Awareness Week to coincide with its Australian counterpart. Sophos was again a member of the steering committee.

One great component of the Australian week has been the distribution of the Budd:e Cyber Security education package, which help students from years 3 and 9 adopt safe and secure online practices.

It is interesting to contrast the US ‘month’ with the ANZ ‘week’. A very serious but amusing colleague here at Sophos pointed out that “awareness just for the week or month is a bit like a ‘quit smoking afternoon’ being just a few hours when you don’t smoke, when it should be the point in time where you start never smoking again.”

Earlier this month I was fortunate to be able to present at the Singapore government’s conference – Govware – which has a strong affinity to Cyber Security Awareness Month. Governments all over the world are now engaged in similar events.

Given the global nature of the problem, with criminals possibly based in every country and utilising hijacked servers (zombies or botnets) based randomly around the world, it is clear why no single country can own this.

Bringing together all the fine work of governments will be increasingly important to us all. No one entity owns the problem but we all own elements of the solution.

I believe that a key set of players in this fight must be the global providers of security products as, by our nature, we are not constrained by borders in the way national governments are.

Every day, SophosLabs finds over 250,000 new examples of malware from all over the world and then provides this protection to our global customers – this is a clear example of the span of reach that multinational companies have.

So it seems to me that cooperation between both the public and private resources for good is a good way to ensure we’re not outflanked by the bad guys.

Cross-country prosecution is often difficult due to a general fragmentation of effort, the challenge of borders and uncertain legislation. Allowing this to continue will increase the power of the criminals and make the future problem even greater.

To again draw on the smoking analogy – every day you continue smoking makes giving up harder.

No one country alone can win this battle, nor can one security provider. We all need to play our part. Wherever we are, let’s get behind our governments’ efforts.

Image of passport stamps courtesy of Shutterstock.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/mg0BJ9i3h7A/

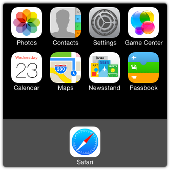

Soon after iOS 7 came out, a

Soon after iOS 7 came out, a  My last post about

My last post about  With all the software installed, it was time to think about

With all the software installed, it was time to think about  Since we’re talking networks, I should mention that our home wireless network is also set up with security in mind.

Since we’re talking networks, I should mention that our home wireless network is also set up with security in mind.  Ever since I first passed my driving test I realised how expensive motoring could be. Just buying a car and filling the tank with petrol took my entire childhood savings. Thankfully the yearly road tax and insurance costs weren’t too high.

Ever since I first passed my driving test I realised how expensive motoring could be. Just buying a car and filling the tank with petrol took my entire childhood savings. Thankfully the yearly road tax and insurance costs weren’t too high. Video clips depicting beheadings have been allowed back onto Facebook.

Video clips depicting beheadings have been allowed back onto Facebook.  Posting violent content – be it photos depicting a terrorist attack victim or a murder – passes muster, whereas nudity is verboten, including images of females’ breasts unless they

Posting violent content – be it photos depicting a terrorist attack victim or a murder – passes muster, whereas nudity is verboten, including images of females’ breasts unless they  Video clips depicting beheadings have been allowed back onto Facebook.

Video clips depicting beheadings have been allowed back onto Facebook.  Posting violent content – be it photos depicting a terrorist attack victim or a murder – passes muster, whereas nudity is verboten, including images of females’ breasts unless they

Posting violent content – be it photos depicting a terrorist attack victim or a murder – passes muster, whereas nudity is verboten, including images of females’ breasts unless they