Four Dangers To Mobile Devices More Threatening Than Malware

From Trojan horses to viruses, botnets to ransomware, malicious software garners a great deal of attention from security vendors and the media.

Yet, mobile users–especially those in North America–should worry more about other threats. While smartphones and tablets could be platforms for a whole new generation of malicious functionality, the ecosystems surrounding the most popular devices work well to limit their exposure to malware. The number of malware variants targeting the Android platform is certainly expanding–surpassing 275,000 as of the first quarter of 2013, according to security firm Juniper Networks–but few of the malicious programs have snuck into the mainstream application marketplaces.

Instead, the top threats to organizations grab fewer headlines. While security experts continue to put malware as a significant threat, lost and stolen devices, insecure communications, and insecure application development affect many more users. Juniper, for example, puts insecure communications at the top of their list, says Troy Vennon, director of the Mobile Threat Center at Juniper Networks.

“We see a lot of organizations that have gone to the BYOD model, and they are encouraging their users to connect back into the enterprise for access to data and resources,” he says. “They are trying to figure out how they are going to secure that communication and secure that transfer of data.”

Enterprises also have to be aware of what their users are installing on their phones and how they may be using the devices for handling sensitive corporate data, says Con Mallon, a senior director of Symantec’s mobility business.

“You can only secure what you know about, so knowing what you have walking around your enterprise is important,” he says, adding that the defenses should extend to application and how those applications deal with data. “I should not be able to take the company data and put it in my own personal dropbox folder.”

Based on data and interviews with experts, here are the top four threats.

1. Lost and stolen phones

In March 2012, mobile-device management firm Lookout analyzed its data for U.S. consumers who activated the company’s phone-finding service, estimating that the nation’s mobile users lose a phone once every 3.5 seconds. In another study released around the same time, Symantec researchers left 50 phones behind in different cities finding that 83 percent of the devices had corporate applications accessed by the person finding the phone.

“Mobile phones and tablets are being lost or stolen on an increasing basis,” says Giri Sreenivas, vice president and general manager for mobile at vulnerability management firm Rapid7. “The challenge is that there is relatively easy techniques for evading some of the on-device security controls, such as bypassing a lock screen password.”

[Embedded device dangers don’t just plague consumers or industrial control systems. See Tackling Enterprise Threats From The Internet Of Things.]

While Apple’s TouchID, announced this week, may help consumers and employees better secure their devices against theft, the majority of users still do not even use a passcode to lock their device against misuse. Companies should train users to lock their smartphones and tablets and use a mobile-device management system to erase the device if necessary, says Juniper’s Vennon. In the company’s latest mobile-security report, Juniper found that 13 percent of users used its MDM solution to locate a phone and 9 percent locked a device. Only 1.5 percent of users–or about one in every 8 that lost a device–wiped the smartphone, indicating that the device was likely not found, says Vennon.

“Every company should be able to locate, lock and wipe,” he says. “It’s hugely necessary.”

2. Insecure communications

While there is a lot less data on how often mobile users connect to open networks, companies consider insecure connections to wireless network a top threat, says Rapid7’s Sreenivas. The problem is that wireless devices are often set to connect to an open network that matches one to which it had previous connected.

“A lot of people will look for a Wifi hotspot and they won’t look to see if it is secure or insecure,” he says. “And once they are on an open network, it is quite easy to execute a man-in-the-middle attack.”

The solution is to force the user to route traffic through a mobile virtual private network before connecting to any network, he says.

3. Leaving the walled garden

Users that jailbreak their smartphone or use a third-party app store that does not have a strong policy of checking applications for malicious behavior put themselves at greater risk of compromise. For example, while only about 3 percent of users in North America have some sort of suspicious or malicious software on their smartphone, the incident of such badware is much higher in China, with more than 170 app stores, and Russia, with more than 130 stores, according to Juniper’s Third Annual Mobile Threats Report.

A well-secured app store, which vets each submitted application, is part of the overall ecosystem that secures a mobile device. Any users that buys from a marketplace with little security puts their phone at risk, Juniper’s Vennon says.

“There is not question that if you, as a user, are making the decision to download an app from an unknown source in a third-party app store, you are opening yourself up for the potential of malware,” he says.

4. Vulnerable development frameworks

Even legitimate applications can be a threat to the user, if the developer does not take security into account when developing the application. Vulnerabilities in popular applications and flaws in frequently used programming frameworks can leave a device open to attack, says Rapid7’s Sreenivas.

The Webkit HTML rendering library, for example, is a key component of the browser in most smartphones. However, security researchers often find vulnerabilities in the software, he says. Companies should make sure that employees devices are updated–currently the best defense against vulnerabilities.

“Understanding the corresponding vulnerability risk and making sure that the devices are patched,” says Sreenivas. “It is very interesting that proximity attacks, and techniques for jailbreaks, and other attacks can all be mitigated by bringing the mobile platform for your device up to date.”

Malicious and suspicious software

Malware, adware and other questionable software are a threat, but mainly in China, Russia and other countries. Yet, while North American users have less to worry about malware, suspicious software–including privacy-invasive apps–are quite rampant. Juniper, for example, has blocked infections of malicious and unwanted software on 3.1 percent of its customers’ devices.

Moreover, security researchers continue to analyze mobile devices for vulnerabilities, and cybercriminals are getting better at monetizing mobile-device compromises–two pre-requisites for the malware to take off on mobile devices, says Symantec’s Mallon.

“We can see malware and monetization happening, toolkits are out there–all of these things parallel the development of malware in the Windows world,” he said.

Have a comment on this story? Please click “Add Your Comment” below. If you’d like to contact Dark Reading’s editors directly, send us a message.

Article source: http://www.darkreading.com/mobile/four-dangers-to-mobile-devices-more-thre/240161141

Linus Torvalds is a very clever man – he invented Linux, after all – but he seems to struggle with simple human decency.

Linus Torvalds is a very clever man – he invented Linux, after all – but he seems to struggle with simple human decency.

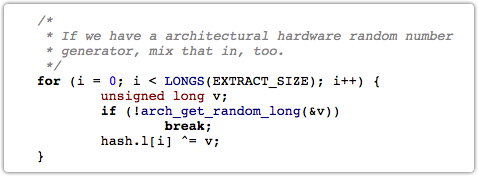

After all, modern Intel CPUs have an instruction called RDRAND which is supposed to use thermal noise, generally considered an unpredictable byproduct of the fabric of physics itself, to generate high quality random numbers very swiftly.

After all, modern Intel CPUs have an instruction called RDRAND which is supposed to use thermal noise, generally considered an unpredictable byproduct of the fabric of physics itself, to generate high quality random numbers very swiftly.

When your children go online, who should be their nanny?

When your children go online, who should be their nanny?  In addition, he announced changes to the law that would make extreme pornography harder to obtain, such as making it illegal to depict rape in porn.

In addition, he announced changes to the law that would make extreme pornography harder to obtain, such as making it illegal to depict rape in porn.  Privacy when using potentially data-leaking mobile phone apps is concern Numero Uno for 22% of smartphone users, according to a new study.

Privacy when using potentially data-leaking mobile phone apps is concern Numero Uno for 22% of smartphone users, according to a new study. An example: at least as recently as the Path and Hipster revelations, Apple’s iOS permission system wasn’t providing notification of what information an app might have been sending to its keepers, aside from location information.

An example: at least as recently as the Path and Hipster revelations, Apple’s iOS permission system wasn’t providing notification of what information an app might have been sending to its keepers, aside from location information. The first thing you’ll notice about the September 2013 Patch Tuesday is that there are only 13 patches to apply, even though there were 14 bulletins in

The first thing you’ll notice about the September 2013 Patch Tuesday is that there are only 13 patches to apply, even though there were 14 bulletins in  A study has found that men are almost twice as likely to snoop on their partner’s mobile phone, peeking without permission to read “incriminating messages or activity” that might point to infidelity.

A study has found that men are almost twice as likely to snoop on their partner’s mobile phone, peeking without permission to read “incriminating messages or activity” that might point to infidelity.