Encryption remains a key security tool despite newly leaked documents revealing the National Security Agency’s efforts to bend crypto and software to its will in order to ease its intelligence-gathering capabilities, expert say. But these latest NSA revelations serve as a chilling wake-up call for enterprises to rethink how they lock down their data.

“The bottom line is what Bruce Schneier said: for all of these [NSA] revelations, users are better off using encryption than not using encryption,” says Robin Wilton, technical outreach director of the Internet Society. “But if you’re a bank [or other financial institution] and you rely on the integrity of your transactions, what are you supposed to be doing now? Are you compromised?”

The New York Times, The Guardian, and ProPublica late last week reported on another wave of leaked NSA documents provided by former NSA contractor Edward Snowden, revealing that the agency has been aggressively cracking encryption algorithms and even urging software companies to leave backdoors and vulnerabilities in place in their products for the NSA’s use. The potential exposure of encrypted email, online chats, phone calls, and other transmissions, has left many organizations reeling over what to do now to keep their data private.

[Concerns over backdoors and cracked crypto executed by the spy agency is prompting calls for new more secure Internet protocols–and the IETF will address these latest developments at its November meeting. See Latest NSA Crypto Revelations Could Spur Internet Makeover.]

[UPDATE 9/11/13 7:30am: The New York Times reported last night that the Snowden documents “suggest” that the NSA “generated one of the random number generators used in a 2006 N.I.S.T. standard — called the Dual EC DRBG standard — which contains a back door for the N.S.A.,” The Times article said.]

Still a mystery is which, and if any, encryption specifications were actually weakened under pressure of the NSA, and which vendor products may have been backdoored. The National Institute of Standards and Technology (NIST), which heads up crypto standards efforts, today issued a statement in response to questions raised about the encryption standards process at NIST in the wake of the latest NSA program revelations: “NIST would not deliberately weaken a cryptographic standard. We will continue in our mission to work with the cryptographic community to create the strongest possible encryption standards for the U.S. government and industry at large,” NIST said.

NIST reiterated its mission to develop standards and that it works with crypto experts from around the world–including experts from the NSA. “The National Security Agency (NSA) participates in the NIST cryptography development process because of its recognized expertise. NIST is also required by statute to consult with the NSA,” NIST said in its statement.

The agency also announced today that it has re-opened public comments for Special Publication 800-90A and draft Special Publications 800-90B and 800-90C specs that cover random-bit generation methods. These specifications have been under suspicion by some experts because the NSA was involved in their development, and NIST says if any vulnerabilities are found in the specs, it will fix them.

The chilling prospect of the NSA building or demanding backdoors in encryption methods, software products, or Internet services is magnified by concerns that that would also give nation-states and cybercriminals pre-drilled holes to infiltrate.

“There’s a strong technological argument that putting backdoors in encryption is just a foolish thing to do. Because if you do that, it’s just open to abuse” by multiple actors, says Stephen Cobb, security evangelist for ESET. “This makes it very complicated for businesses. I would not want to be a CSO or CIO at a financial institution right now.”

So how can businesses ward off the NSA or China and other nation-states or Eastern European cybercriminals if crypto and backdoors are on the table?

Use encryption

Encryption is still very much a viable option, especially if it’s strong encryption, such as the 128-bit Advanced Encryption Standard (AES). “Don’t stop using encryption, review the encryption you’re using, and potentially change the way you’re doing it. If you’ve got a Windows laptop with protected health information, at least be using BitLocker,” for example, says Stephen Cobb, security evangelist for ESET.

David Frymier, CISO and vice president at Unisys, says even the NSA would be hard-pressed to break strong encryption, so using strong encryption is the best bet. Even Snowden said that, Frymier says.

Still unclear is whether the actual algorithms the NSA has cracked will be revealed publicly or not.

“Most algorithms are actually safe,” says Tatu Ylonen, creator of the SSH protocol and CEO and founder of SSH Communications Security.

Beef up your encryption key management

David Frymier, CISO and vice president at Unisys, is skeptical of the claims that the NSA worked to weaken any encryption specifications. “I just don’t find that [argument] compelling. All of these algorithms are basically published in the public domain and they are reviewed by” various parties, he says.

Even so, the most important factor is how the keys are managed: how companies deploy the technology, store their keys, and allow access to them, experts say. The security of the servers running and storing that code is also crucial, especially since the NSA is reportedly taking advantage of vulnerabilities much in the way hackers do, experts note.

Dave Anderson, a senior director with Voltage Security, says it’s possible for the NSA to decrypt a financial transaction, but probably only if the crypto wasn’t implemented correctly or there keys weren’t properly managed. “A more likely way that the NSA is reading Internet communications is through exploiting a weakness in key management. That could be a weakness in the way that keys are generated, or it could be a weakness in the way that keys are stored,” Anderson says. “And because many of the steps in the lifecycle of a key often involve a human user, this introduces the potential for human error, making key lifecycle management never as secure as the protection provided by the encryption itself.”

Keep your servers up-to-date with patches, too, because weaknesses in the operating system or other software running on the servers that support the crypto software are other possible entryways for intruders or spies.

One of the most common mistakes: not restricting or knowing who has access to the server storing crypto keys, when, and from where, according to SSH’s Ylonen. “And that person’s access must be properly terminated when it’s no longer needed,” he says. “I don’t think this problem is encryption: it is overall security.”

Ylonen says it’s also a wakeup call for taking better care and management of endpoints.

Not having proper key management is dangerous, he says. One of SSH Communications’ bank customers had more than 1.5 million keys for accessing its production servers, but the bank didn’t know who had control over the keys, he says.

“There are two kinds of keys–keys for encryption and keys for gaining access that can give you further access to encryption keys,” he says. And access-granting keys are often the worst-managed, he says. “Some of the leading organizations don’t know who has access to the keys to these systems,” he says.

“If you get the encryption keys, you can read [encrypted data]. If you get the access keys, you can read the data, and you can modify the system … or destroy the data,” he says.

Conduct a risk analysis on what information the NSA, the Chinese, or others would be interested in

Once you’ve figured out what data would be juicy for targeting, double down to protect it.

“Whatever that is, protect it using modern, strong encryption, where you control the endpoints and you control the keys. If you do that, you can be reasonably assured your information will be safe,” Unisys’ Frymier says.

In the end, crypto-cracking and pilfered keys are merely weapons in cyberspying and cyberwarfare, experts say.

“The NSA wants access to data … they want access to passwords and credentials to access the system so it can be used for offensive purposes if the need arises, or for data collection,” Ylonen says. “They want access to modern software and applications so they are later guaranteed access to other systems.”

Have a comment on this story? Please click “Add Your Comment” below. If you’d like to contact Dark Reading’s editors directly, send us a message.

Article source: http://www.darkreading.com/authentication/keep-calm-keep-encrypting-with-a-few/240161105

A study has found that men are almost twice as likely to snoop on their partner’s mobile phone, peeking without permission to read “incriminating messages or activity” that might point to infidelity.

A study has found that men are almost twice as likely to snoop on their partner’s mobile phone, peeking without permission to read “incriminating messages or activity” that might point to infidelity.

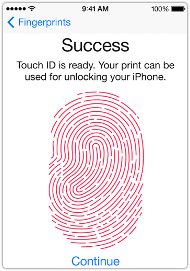

As well as unlocking the phone, the sensor will be able to approve purchases at the Apple store.

As well as unlocking the phone, the sensor will be able to approve purchases at the Apple store. A cross-vertical group, the

A cross-vertical group, the