A security audit conducted by Tencent’s Keen Security Lab on BMW cars has given the luxury automaker a handy crop of bugs to fix – including a backdoor in infotainment units fitted since 2012.

Now that the patches are gradually being distributed to owners, the Chinese infosec team has gone public with its security audit, revealing some of the details right here [PDF].

The researchers noted that their probing found flaws that could be exploited by an attacker to inject messages into a target vehicle’s CAN bus – the spinal cord, if you will, of the machine – and engine control unit while the car was being driven. That would potentially allow miscreants to take over or interfere with the operation of the vehicle to at least some degree.

There are 14 bugs in total. Seven have been assigned standard CVE ID numbers: CVE-2018-9322, CVE-2018-9320, CVE-2018-9312, CVE-2018-9313, CVE-2018-9314, CVE-2018-9311, and CVE-2018-9318. Other flaws are awaiting CVE assignments.

Four require physical USB access – you need to plug a booby-trapped gadget into a USB port – or access to the ODB diagnostics port. That means an attacker has to be inside your vehicle to exploit them. Six can be exploited remotely, from outside the car, with at least one via Bluetooth, which is super bad. Another four require physical or “indirect” physical access to the machine.

Of those 14, eight affect the internet-connected infotainment unit that plays music, media, and other stuff to the driver and passengers. Four affect the telematics control system. Two affect the wireless communications hardware.

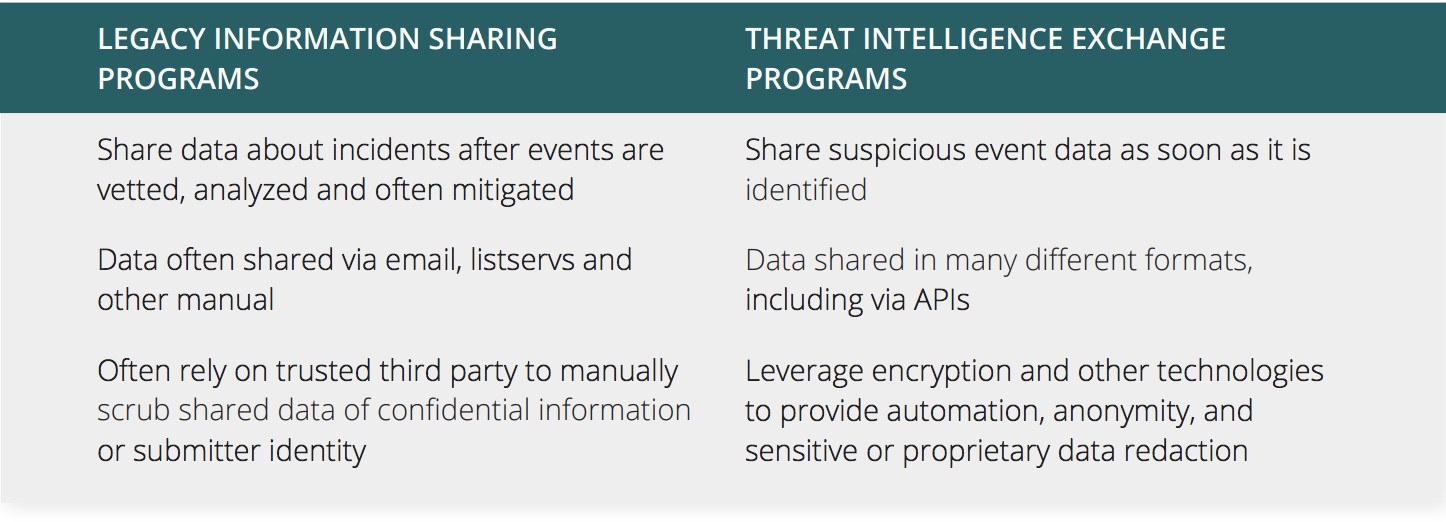

Forgive us if the details seem vague: Tencent is withholding all the nitty-gritty until people have had a chance to update their wagons. Below is a summary of the bugs that were uncovered and reported:

Tencent’s table of vulnerabilities found in BMW vehicles … HU_NBT is the internet-connected infotainment system, TCB is the telematics control, BDC is a wireless communications module

Click to enlarge

BMW chief: Big auto will stay in the driving seat with autonomous cars

READ MORE

Bimmer’s infotainment head uses the operating system QNX, running on an Intel-based x86 board that handles multimedia and the BMW ConnectedDrive services, and an Arm-compatible board that oversees things like power management and CAN bus communication.

One of the vulnerabilities at this point – getting from the CAN bus to BMW’s K-CAN bus – was discovered because the Bimmers’ developers reused some Texas Instruments code “to operate the special memory of Jacinto chip to send the CAN messages.” The telematics unit uses a Qualcomm MDM6200 for USB comms, and a Freescale 9S12X for K-CAN communications. Its software is based on Qualcomm’s REX OS realtime operating system.

The telematics control unit handles contact with the BMW service network, meaning the researchers could trigger BMW Remote Services with arbitrary messages though a simulated GSM network. Meanwhile, the worst telematics unit bug is described thus:

After some tough reverse-engineering work on TCB’s firmware, we also found a memory corruption vulnerability that allows us to bypass the signature protection and achieve remote code execution in the firmware.

The researchers also said they found ways to “influence the vehicle via different kinds of attack chains,” which ultimately let them send “arbitrary diagnostic messages to the ECUs [Engine Control Units].” And there’s a lack of defenses protecting things like diagnostics, they wrote, reproduced unedited:

A secure diagnostic function should be designed properly to avoid the incorrect usage at an abnormal situation. However, we found that most of the ECUs still respond to the diagnostic messages even at normal driving speed (confirmed on BMW i3), which could cause serious security issues already. It will become much worse if attackers invoke some special UDS routines (e.g. reset ECU, etc..).

What the researchers only describe as a “backdoor” in the infotainment unit – the most common vulnerabilities to carry this description are either admin accounts with no password or a default password, or accounts configured with hardcoded credentials, but the document doesn’t specify this – means a USB connection can get past the infotainment unit to the vehicle’s K-CAN bus, and from there, can be used to attack individual devices, such as the engine controller.

The infotainment unit is also at risk of over-the-air Bluetooth-borne attacks. Under controlled conditions, the Tencent researchers said they could also wirelessly hijack the car’s hardware via the cellular phone network, adding that “a malicious backdoor can inject controlled diagnosis messages to the CAN buses in the vehicle.”

The vulnerabilities were confirmed in the BMW i3, X1, 525Li and 730Li models the researchers tested, but bugs in the telematics control unit would affect “BMW models which equipped with this module produced from year 2012.”

BMW was able to send over-the-air updates to fix some bugs, we’re told, but others will need patches through the dealer network, which explains why the researchers are withholding their full technical report to March 2019.

We’ve asked BMW for further comment. ®

Sponsored:

Minds Mastering Machines – Call for papers now open

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2018/05/23/bmw_security_bugs/