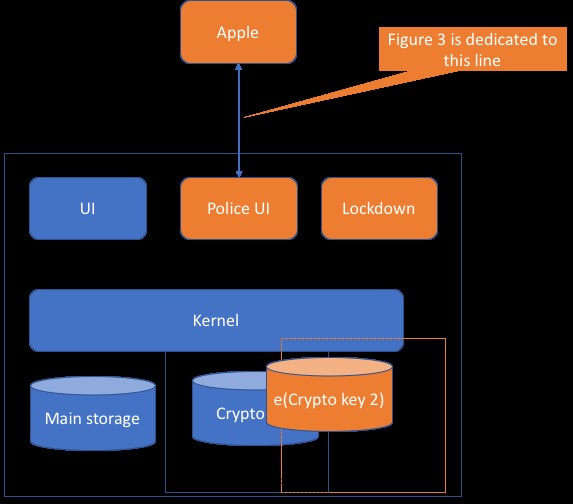

With the addition of secure enclaves, secure boot, and related features of “Clear,” the only ones that will be able to test this code are Apple, well-resourced nations, and vendors who sell jailbreaks.

Recently, Ray Ozzie proposed a system for a backdoor into phones that he claims is secure. He’s dangerously wrong.

According to Wired, the goal of Ozzie’s design, dubbed “Clear,” is to “give law enforcement access to encrypted data without significantly increasing security risks for the billions of people who use encrypted devices.” His proposal increases risk to computer users everywhere, even without it being implemented. Were it implemented, it would add substantially more risk.

The reason it increases risk before implementation is because there are a limited number of people who perform deep crypto or systems analysis, and several of us are writing about Ozzie’s bad idea rather than doing other, more useful work.

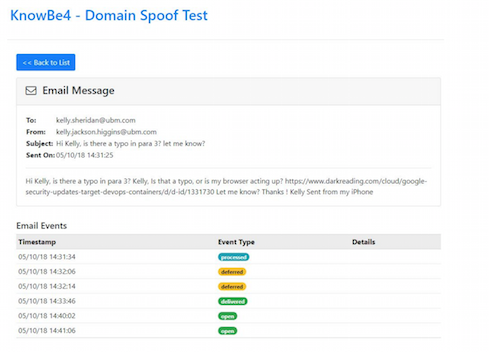

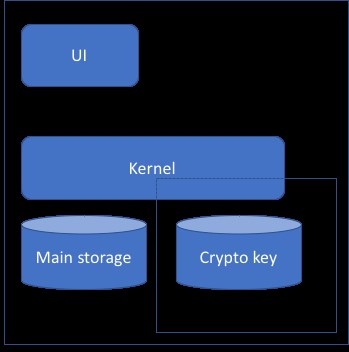

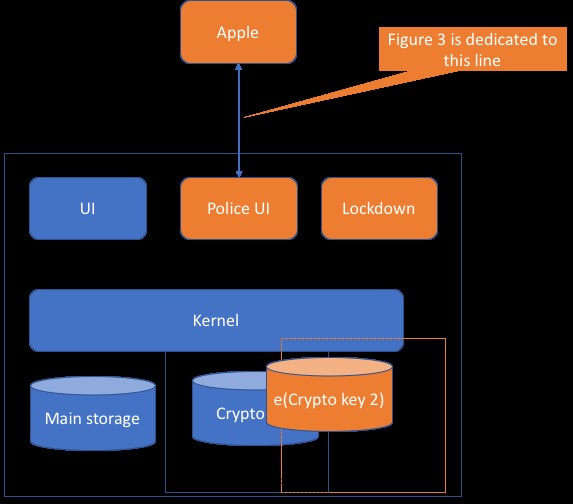

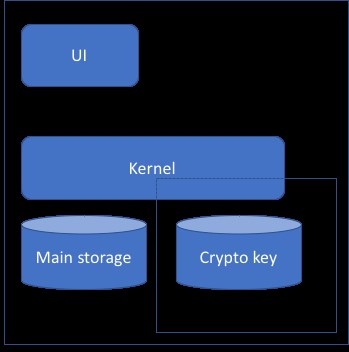

We’ll set aside, for a moment, the cybersecurity talent gap and look at the actual plan. The way a phone stores your keys today looks something like the very simplified Figure 1. There’s a secure enclave inside your phone. It talks to the kernel, providing it with key storage. Importantly, the dashed lines in this figure are trust boundaries, where the designers enforce security rules.

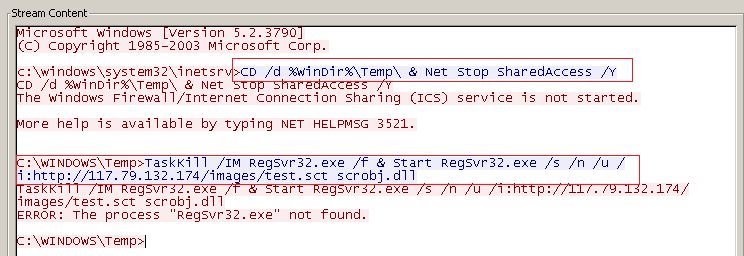

What the Clear proposal does is that when you generate a passphrase, an encrypted copy of that passphrase is stored in a new storage enclave. Let’s call that the police enclave. The code that accepts passphrase changes gets a new set of modules to store that passphrase in the police enclave. (Figure 2 shows added components in orange.) Now, that code, already complex to manage Face ID, Touch ID, corporate rules about passphrases, and perhaps other things, has to add additional calls to a new module, and we have to do that securely. We’ll come back to how risky that is. But right now, I want to mention a set of bugs, lock screen vulnerabilities, and issues, not to criticize Apple, but to point out that this code is hard to get right, even before we multiply the complexity involved.

There’s also a new police user interface on the device, which allows you to enter a different passphrase of some form. The passcode validation functions also get more complex. There’s a new lockdown module, which, realistically, is going to be accessible from your lock screen. If it’s not accessible from the lock screen, then someone can remote wipe the phone. So, entering a passcode, shared with a few million police, secret police, border police, and bloggers around the globe will irrevocably brick your phone. I guess if that’s a concern, you can just cooperatively unlock it.

Lastly, there’s a new set of off-device communications that must be secured. These communications are complex, involving new network protocols with new communications partners, linked by design to be able to access some very sensitive keys, implemented in all-new code. It also involves setting up relationships with, likely, all 193 countries in the United Nations, possibly save the few where US companies can’t operate. There are no rules against someone bringing an iPhone to one of those countries, and so cellphone manufacturers may need special dispensation to do business with each one.

Apple has been criticized for removing apps from the App Store in China, and that may be a precedent that Apple’s “evaluate” routine may be different from country to country, making that code more complex.

The Trusted Computing Base

There’s an important concept, now nearly 40 years old, of a trusted computing base, or TCB. The idea is that all code has bugs, and bugs in security systems lead to security consequences. A single duplicated line of code (“goto fail;“) led to failures of SSL, and that was in highly reviewed code. Therefore, we should limit the size and complexity of the TCB to allow us to analyze, test, and audit it. Back in the day, the trusted kernels were on the order of thousands of lines of code, which was too large to audit effectively. My estimate is that the code to implement this proposal would add at least that much code, probably much more, to the TCB of a phone.

Worse, the addition of secure enclaves, secure boot, and related features make it hard to test this code. The only people who’ll be able to do so are first, Apple; second, well-resourced nations; and third, vendors that sell jailbreaks. So, bugs are less likely to be found. Those that exist will live longer because no one who can audit the code (except Apple) will ever collaborate to get the bugs fixed. The code will be complex and require deep skill to analyze.

Bottom line: This proposal, like all backdoor proposals, involves a dramatically larger and more complex trusted computing base. Bugs in the TCB are security bugs, and it’s already hard enough to get the code right. And this goes back to the unavoidable risk of proposals like these. Doing the threat modeling and security analysis is very, very hard. There’s a small set of people who can do it. When adding to the existing workload of analyzing the Wi-Fi stack, the Bluetooth stack, the Web stack, the sandbox, the app store, each element we add distributes the analysis work over a larger volume of attack surface. The effect of the distribution is not linear. Each component to review involves ramp-up time, familiarization, and analysis, and so the more components we add, the worse the security of each will be.

This analysis, fundamentally, is independent of the crypto. There is no magic crypto implementation that works without code. Securing code is hard. Ignoring that is dangerous. Can we please stop?

Adam is an entrepreneur, technologist, author and game designer. He’s a member of the BlackHat Review Board, and helped found the CVE and many other things. He’s currently building his fifth startup, focused on improving security effectiveness, and mentors startups as a … View Full Bio

Article source: https://www.darkreading.com/endpoint/risky-business-deconstructing-ray-ozzies-encryption-backdoor/a/d-id/1331743?_mc=rss_x_drr_edt_aud_dr_x_x-rss-simple