LoJack Attack Finds False C2 Servers

Researchers have identified a new attack that uses computer-recovery tool LoJack as a vehicle for breaching a company’s defenses and remaining persistent on the network. The good news is that it hasn’t started specific malicious activity — yet.

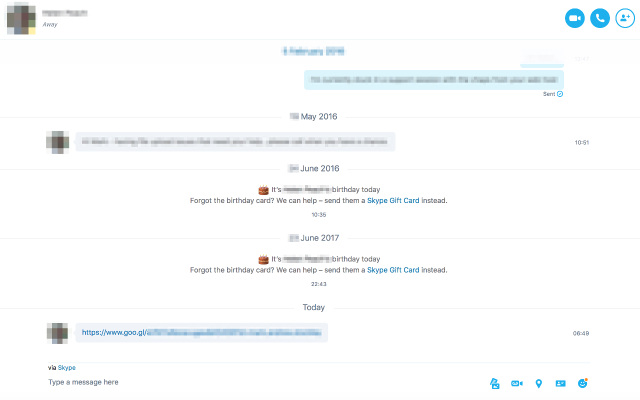

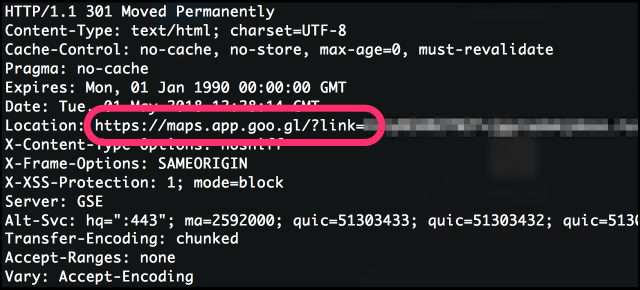

Arbor Networks’ ASERT, acting on a third-party tip, found a subtle hack involving legitimate LoJack endpoint agents. The threat actors didn’t change any of the legitimate actions of the software. All they did was strip out the legitimate C2 (command and control) server address from the system and replace it with a C2 server of their own.

Richard Hummel, manager of threat research at NETSCOUT Arbor’s ASERT, says that the subtlety of the action means that most existing security software and systems will not provide any indication that something is going wrong. “If they’re running as an admin, they might not see it,” Hummel says. “This doesn’t identify as malicious or malware, so they might just see a subtle warning.”

The addresses of the new C2 servers are pieces of the analysis that led researchers to believe that the campaign is attributable to Fancy Bear, a Russian hacking group responsible for a number of well-known exploits. On initial execution, the infected software contacts on of the C2 servers, which logs its success. Then, the new LoJack application proceeds to … wait. It simply does what LoJack does, with no additional communication or activity.

Hummel says that the lack of activity and lack of steps to prevent legitimate activity means that most security software won’t recognize that the app is anything but legitimate.

Once in place, the nature of the LoJack system’s activities means that it’s very persistent, remaining in place and active through reboots, on/off cycles, and other disruptive events.

“This is basically giving the attacker a foothold in an agency,” Hummel says. “There’s no LoJack execution of files, but they could launch additional software at a later date.” And the foothold that the software gains is a strong one.

“If they’re on a critical system or the user is someone with high privileges, then they have a direct line into the enterprise,” Hummel explains, adding, “with the permissions that LoJack requires, [the attackers] have permission to install whatever they want on the victims’ machines.”

There is, so far, nothing about the attack that contains a huge element of novelty. As for the code, Hummel says that this particular mechanism for attack has been around since 2014, when the software that is now LoJack was called Computrace. Even then, Hummel says, “researchers talked about how LoJack maintains its persistence.”

And while Hummel’s team has suspicions about infection mechanisms, they aren’t yet sure what’s happening. “We did some initial analysis on how the payload is being distributed and other Fancy Bear attacks, and we can’t verify the infection chain,” Hummel says, though he’s quick to add, “We don’t think that LoJack is distributing bad software.”

With stealth and persistence on its side, how does an enterprise prevent this new attack from placing bad software on corporate computers? Hummel says everything begins with proper computer hygiene. “Users will be prompted for permission warnings — don’t just blow by them,” he says.

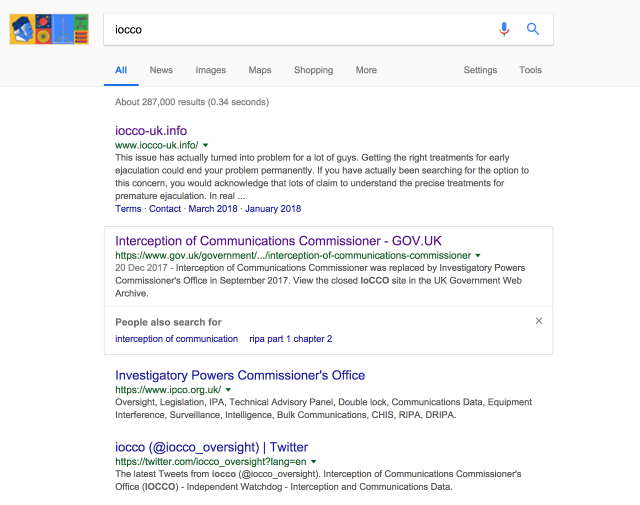

Next, the IT security team can scan for five domains currently used by the software:

- elaxo[.]org

- ikmtrust[.]com

- lxwo[.]org

- sysanalyticweb[.]com (2 forms)

Each of these domains should be blocked by network security mechanisms.

It’s rare to have warning of an active infection method prior to damaging attacks using the infection target. Organizations now have time to learn about the tactic and disinfect compromised endpoints before the worst occurs.

Related Content:

- Destructive and False Flag Cyberattacks to Escalate

- 8 Nation-State Hacking Groups to Watch in 2018

- Lazarus Group, Fancy Bear Most Active Threat Groups in 2017

- Why OAuth Phishing Poses a New Threat to Users

Curtis Franklin Jr. is Senior Editor at Dark Reading. In this role he focuses on product and technology coverage for the publication. In addition he works on audio and video programming for Dark Reading and contributes to activities at Interop ITX, Black Hat, INsecurity, and … View Full Bio

Article source: https://www.darkreading.com/attacks-breaches/lojack-attack-finds-false-c2-servers/d/d-id/1331691?_mc=rss_x_drr_edt_aud_dr_x_x-rss-simple