On Monday, during its earnings call, Google’s parent Alphabet held up a shiny YouTube bauble.

Look at the results of our wondrous machine learning, Google CEO Sundar Pichai said in prepared remarks, pointing to automagic flagging and removal of violent, hate-filled, extremist, fake-news and/or other violative YouTube videos.

At the same time, YouTube released details in its first-ever quarterly report on videos removed by both automatic flagging and human intervention.

There are big numbers in that report: between October and December 2017, YouTube removed a total of 8,284,039 videos. Of those, 6.7 million were first flagged for review by machines rather than humans, and 76% of those machine-flagged videos were removed before they received a single view.

That’s a lot of hate stopped, however, it is unlikely to impress the parents of children gunned down in school shootings, who for years have endured the YouTube excoriations of Alex Jones.

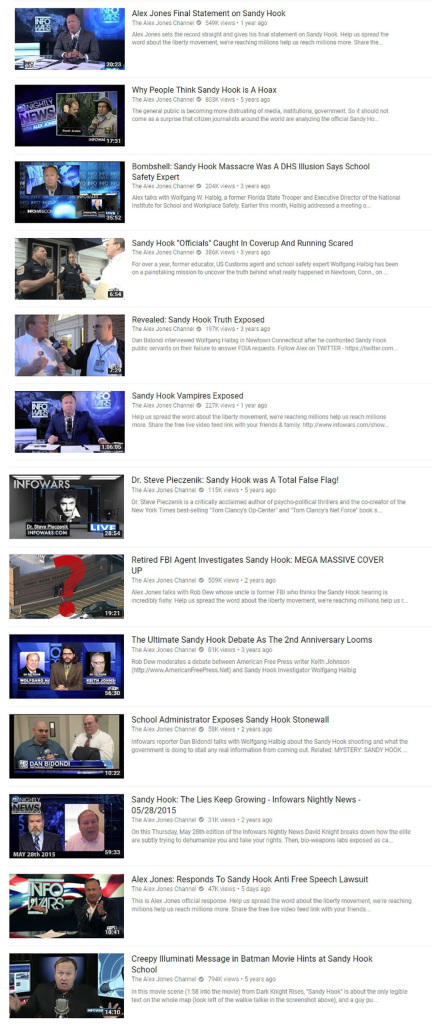

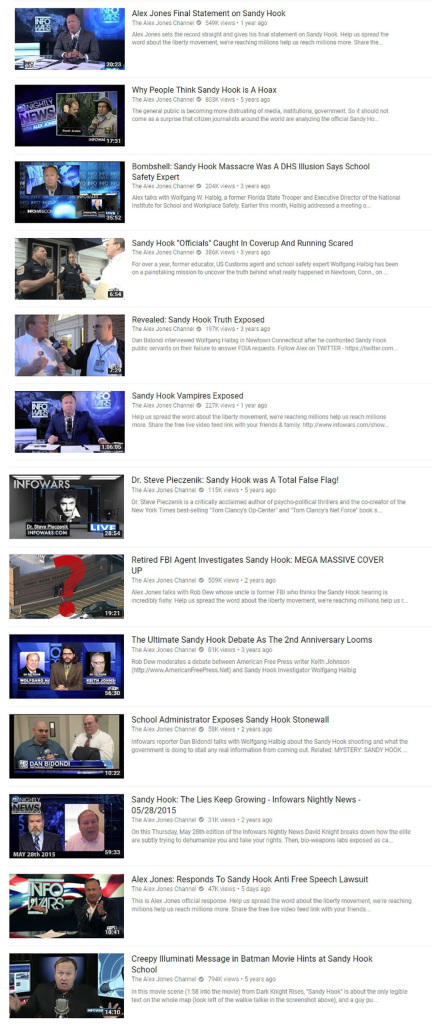

Jones is a conspiracy theorist who for 5.5 years has been calling them liars. Since 2012, Jones has been pushing his skepticism about the massacre at Sandy Hook Elementary School, in Newtown, Connecticut, that left 20 students and six educators dead, with scores of videos on his Infowars YouTube channel that have never been removed.

In those videos, Jones has over the years said that the Sandy Hook shooting has “inside job written all over it,” has called the shooting “synthetic, completely fake, with actors, in my view, manufactured,” has claimed “the whole thing was fake,” said that the massacre was “staged,” called it a “giant hoax,” suggested that some victims’ parents lied about seeing their dead children, and promoted other conspiracy theories (Sandy Hook is only one of his many focuses).

Recently, Media Matters for America – a nonprofit dedicated to monitoring, analyzing, and correcting conservative misinformation in US media – announced that its own compilation of Jones’s conspiracy theories about Sandy Hook was flagged and removed.

The message the group saw when staff logged in to the nonprofit’s YouTube account included a section called “Video content restrictions” that described reasons why videos might be taken down:

YouTubers share their opinions on a wide range of different topics. However, there’s a fine line between passionate debate and personal attacks. As our Community Guidelines outline, YouTube is not a platform for things like predatory behavior, stalking, threats, harassment, bullying, or intimidation. We take this issue seriously and there are no excuses for such behavior. We remove comments, videos, or posts where the main aim is to maliciously harass or attack another user. If you’re not sure whether or not your content crosses the line, we ask that you not post it.

That made sense, the content is pretty objectionable, but what didn’t make sense is that the original source material it borrowed from was untouched. Why were the original videos from which the compilation was made not flagged and removed too?

The group appealed, and the compilation video was reposted.

Google told me that the removal of Media Matters’ Alex Jones compilation video was simply a mistake, a false positive. From a statement sent by a Google spokesperson:

With the massive volume of videos on our site, sometimes we make the wrong call. When it’s brought to our attention that a video or channel has been removed mistakenly, we act quickly to reinstate it. We also give uploaders the ability to appeal these decisions and we will re-review the videos.

As we rapidly hire and train new content reviewers, we are seeing more mistakes. We take every mistake as an opportunity to learn and improve our training. This incident was a human review error.

Those concerned might have expected that both the compilation and the originals would be removed as a result of the appeal but, in fact, both are considered OK by Google and fall on the right side of YouTube’s community guidelines.

The mistake shows some positive aspects of YouTube’s approach to video removal – human judgement is being used when the machine learning algorithms falter and, in this case at least, the rules were applied even-handedly in the end.

It also highlights a question that Silicon Valley is just beginning to grapple with – if you are going to have community standards that are stricter than the those required to meet your legal obligations, where do you draw the line?

Why are the videos that turn Sandy Hook parents into victims OK?

Is it simply the price of free speech or, as ex-Reddit mogul Dan McComas suggested only last week, that big organisations like Reddit, Facebook and Twitter are reluctant to make decisions, or to upset groups of users who are known to be volatile?

I think that the biggest problem that Reddit had and continues to have, and that all of the platforms, Facebook and Twitter, and Discord, now continue to have is that they’re not making decisions, is that there is absolutely no active thought going into their problems

Jones has, in fact, come close to being banned from YouTube. In February, The Hill reported that Infowars was “one strike away” from a YouTube ban.

Jones’s channel got its first strike on 23 February for a video that suggested that David Hogg and other student survivors of the mass shooting at Marjory Stoneman Douglas high school in Parkland, Florida, were crisis actors. The video, “David Hogg Can’t Remember His Lines In TV Interview,” was removed for violating YouTube’s policies on bullying and harassment.

The second strike was on a video that was also about the Parkland shooting. The consequences of getting two strikes within three months was a two-week suspension during which the account couldn’t post new content. A third strike within three months would mean Infowars would get banned from YouTube. At the time, Infowars had more than two million YouTube subscribers.

Last month, YouTube said it was planning to post excerpts from credible sources onto pages containing videos about hoaxes and conspiracy theories, in order to provide more context for viewers.

But when the Wall Street Journal provided examples of how YouTube still promotes deceptive and divisive videos, it says that YouTube execs acknowledged that the video recommendations were a problem. The newspaper quoted YouTube’s product-management chief for recommendations, Johanna Wright:

We recognize that this is our responsibility [and] and we have more to do.

Last Monday, two defamation lawsuits were filed against Alex Jones by Sandy Hook parents. Those parents claim that Jones’s “repeated lies and conspiratorial ravings” have led to death threats.

Another lawsuit has been filed against Jones by a man whom Infowars incorrectly identified as the Parkland, Florida, school shooter.

A cynical view might see nothing but profit as being responsible for YouTube keeping Jones’s content up. But that view doesn’t jibe with the strenuous technology efforts YouTube is putting into automatic filtering of violative content, and the platform certainly isn’t sparing the mass hiring of humans to review flagged content.

So what does that leave? It leaves YouTube’s community policies. Jones has violated policies against bullying twice, but he evidently hasn’t violated policies a third, three-strikes-you’re-out time.

His videos contain no nudity or sexual content, threats, nor violent or graphic content. Nor does Jones incite hatred against specific groups—at least, not those named in YouTube’s policies, which rule out promoting or condoning violence against “individuals or groups based on race or ethnic origin, religion, disability, gender, age, nationality, veteran status or sexual orientation/gender identity.”

But what about those Sandy Hook parents, who claim that Jones’s conspiracy theorist have led to death threats?

This isn’t a technology problem – the machine-learning technology is doing the job it’s supposed to do and it’s getting better all the time.

This is a guidelines problem – “Sandy Hook parents” aren’t a named category in YouTube’s policies about protected groups so we’re stuck with content that casts grieving parents as liars.

“This can be a delicate balancing act, but if the primary purpose is to attack a protected group, the content crosses the line,” YouTube says. Delicate? You can say that again.

For all the progress made in machine learning it still looks like we’re only just beginning to figure out how best to police something like YouTube, with its billion monthly users, in all of their weird, outrageous, amusing, informative and occasionally deeply offensive variety.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/lvwPPewfwS8/