How to protect your Facebook data [UPDATED]

How do you protect your data following the ongoing revelations that Facebook can’t, or won’t?

Well, first you do fill-in-the-blank, and then you wait for an hour, because Facebook’s sure to squirt out another data privacy overhaul in this Cambridge Analytica, Cubeyou, data spillage-induced fix-it time.

Not to complain about the data privacy overhauls, mind you. It’s just hard to keep up.

Here’s the latest box of muffins, fresh as of mid-April. Note that much of this is copy-pasted from our 20 March set of protect-your-data muffins, at least one of which was rendered stale a week later.

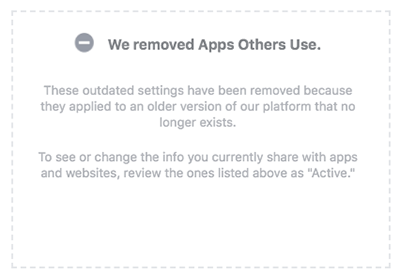

One of the changes was to do away with the Apps Others Use privacy option, which formerly allowed users to control how they share their data with third-party apps.

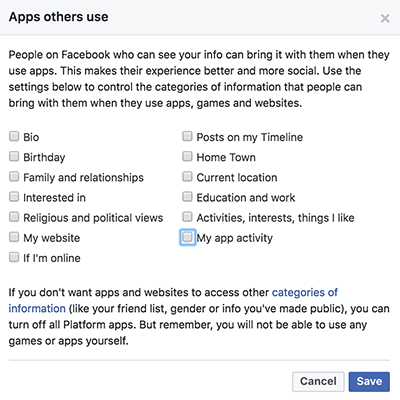

Up until Facebook’s privacy settings update late last month, Apps Others Use was located under the Apps Setting page and gave you a slew of data categories to control what types of your data people could bring with them when they used apps, games and websites. Here’s what it looked like:

Now, it’s just a grayed-out option:

Facebook ditched Apps Others Use because it was made redundant years ago, after the platform stopped people from sharing friends’ information with developers, a spokesperson told Wired:

These controls were built before we made significant changes to how developers build apps on Facebook. At the time, the Apps Others Use functionality allowed people to control what information could be shared to developers. We changed our systems years ago so that people could not share friends’ information with developers unless each friend also had explicitly granted permission to the developer.

In other words, the privacy control was like a light switch that wasn’t connected to a light: toggling it didn’t do anything at all.

As you can see in the image above, Facebook’s data oversharing – which enabled quiz developer Aleksander Kogan to harvest data from tens of millions of Facebook users and illicitly send it on to the political targeted-marketing data firm Cambridge Analytica – was thorough. Apps your friends use could get at your birthdate, whether or not you were online, your religious and political views, your interests, posts on your Timeline, and much more.

No wonder that Cambridge Analytica could get at so much data – we’re talking about 87 million users, or what Facebook called “most people on Facebook.” That includes the data of CEO Mark Zuckerberg himself, he revealed in testimony before the House and Senate last week.

Note also that Facebook didn’t offer checkboxes to ensure that we could prevent apps from getting at our gender, friends list, or public information: to do so, you’d have to turn off all apps on the platform.

At any rate, back to the muffins. These should all be fresh, but if Facebook bakes some new privacy changes, we’ll be heading back into the kitchen to keep you updated.

Check your privacy settings

We’ve written about this quite a bit. Here’s a good guide on how to check your Facebook settings to make sure your posts aren’t searchable, for starters.

That post also includes instructions on how to check how others view you on Facebook, how to limit the audience on past Facebook posts, and how to lock down the privacy on future posts.

The security and privacy settings changes Facebook promised at the end of March fall into these three buckets:

- A simpler, centralized settings menu. Facebook redesigned the settings menu on mobile devices “from top to bottom” to make things easier to find. No more hunting through nearly 20 different screens: now, the settings will be accessible from a single place. Facebook also got rid of outdated settings to make it clear what information can and can’t be shared with apps. The new version not only regroups the controls but also adds descriptions on what each involves.

- A new privacy shortcuts menu. The dashboard will bring together into a central spot what Facebook considers to be the most critical controls: for example, the two-factor authentication (2FA) control; control over personal information so you can see, and delete, posts; the control for ad preferences; and the control over who’s allowed to see your posts and profile information.

- Revised data download and edit tools. There will be a new page, Access Your Information, where you can see, and delete, what data Facebook has on you. That includes posts, reactions and comments, and whatever you’ve searched for. You’ll also be able to download specific categories of data, including photos, from a selected time range, rather than going after a single, massive file that could take hours to download.

Not all these changes have taken place yet so it’s a good idea to keep checking in on the settings periodically to see if anything has changed.

Audit your apps

You should always be careful about which Facebook apps you allow to connect with your account, as they can collect varying levels of information about you.

People still get surprised to see what they’ve opted into sharing with various apps over the years, so again, it’s smart to audit apps regularly.

To audit which apps are doing what:

1. On Facebook in your browser, drop down the arrow at the top right of your screen and click Settings. Then click on the Apps and websites tab for a list of apps connected to your account. This takes you to the App and websites settings page.

2. Check out the permissions you granted to each app to see what information you’re sharing and remove any that you no longer use or aren’t sure what they are for.

And finally, if you’re ready to disengage entirely, there’s the cut-it-out-completely option:

Delete your profile.

This is a lot more serious than simply deactivating your profile. When you deactivate, Facebook still has all your data. To truly remove your data from Facebook’s sweaty grip, deletion is the way to go.

But stop: don’t delete until you’ve downloaded your data first! Here’s how:

1. On Facebook in your browser, drop down the arrow at the top right of your screen and click Settings.

2. At the bottom of General Account Settings, click Download a copy of your Facebook data.

3. Choose Start My Archive.

Be careful about where and how you keep that file. It does, after all, have all the personal information you’re trying to keep safe in the first place.

You ready?

Have you downloaded the data? Have you encrypted it or otherwise stored it somewhere safe? OK, take a deep breath. Here’s comes the doomsday button.

Go to Delete My Account.

Blow the platform a kiss, and away you go.

Now, are you truly gone forever? It’s worth asking, given all the data Facebook collects on people who never even signed up for the service – the data that forms what’s known as shadow profiles.

Facebook has also said that it keeps “backup copies” of deleted accounts for a “reasonable period of time,” which can be as long as three months.

CBS News notes that Facebook also may retain copies of “some material” from deleted accounts, but that it anonymizes the data.

Facebook also retains log data – including when users log in, click on a Facebook group or post a comment – forever. That data is also anonymized.

One type of data about you that you won’t be able to delete: anything posted by your friends and family. As long as your BFFs keep their profiles active, whatever they’ve shared about you won’t be deleted along with your own account. After all, it’s not your content. That includes, for example, the messages you’ve sent to others via the platform.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/8ZRlQH0SWxg/