Yet another Apple password leak – how to avoid it

Mac forensics guru Sarah Edwards, who blogs under the cool nickname of mac4n6 (say it out aloud slowly and deliberately), recently wrote about a rather worrying Mac password problem.

Another Mac password problem, that is – or, to be more precise, yet another password problem.

Apple has ended up with password egg on its face twice before since the release of macOS 10.13 (High Sierra).

First was the “password plaintext stored as password hint” bug, where macOS used your password as your password hint, so that clicking the [Show Hint] button after plugging in a removable drive would immediately reveal the actual password instead.

Second was the “blank root password” hole, whereby trying to logon as root with a blank root password would inadvertently enable the root account, and leave it enabled with no password.

Max4n6’s new password bug isn’t quite that serious, but it’s still a bad look for Apple: under some conditions, the password you choose when creating an encrypted disk ends up written into the system log.

That sort of behaviour is a serious no-no: some sorts of data just aren’t meant to be stored.

The 3-digit CVV code on the back of your credit card is one example – you’re only supposed to use CVVs to validate individual transactions, and you’re not allowed to store them for later.

And passwords are another example – the plaintext of your password should never be stored in its raw, plaintext form, so that’s it’s never left lying around where someone else might stumble upon it, such as in the system logs.

Apple’s leaky logging

Here’s what we did and what happened, based on what we learned from Mac4n6’s article.

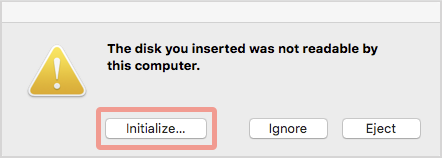

We plugged in a blank USB drive that macOS wouldn’t recognise or mount. (To blank the disk in the first place, we used macOS’s handy but potentially dangerous diskutil zeroDisk utility.)

After a short while, macOS popped up its familiar “disk not readable” popup, at which we clicked [Initialize...] to launch the Mac Disk Utility app:

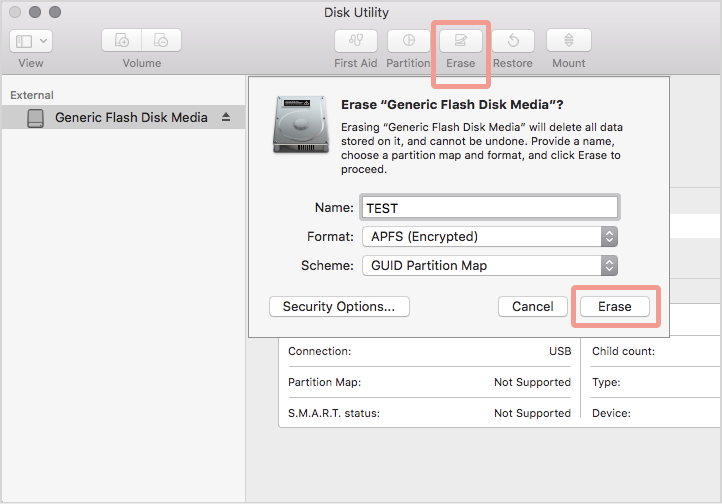

We used the Erase option to initialise a blank volume using APFS, Apple’s new-and-groovy filing system introduced in macOS 10.13.

We created an APFS (Encrypted) volume called TEST, entering the password text when requested:

Once the new APFS volume was created and visible in Disk Utility, we reviewed the system log for recent invocations of the newfs system command.

On Macs, newfs is much like mkfs on Linux and FORMAT on Windows – the low-level command used to prepare a new volume for use with a specific filing system:

$ log stream --info --predicate 'eventMessage contains "newfs"' Filtering the log data using "eventMessage CONTAINS "newfs"" Timestamp ..... Command 2018-03-28 10:59:23 /System/Library/Filesystems/hfs.fs/Contents/Resources/newfs_hfs -J -v TEST /dev/rdisk2s1 . 2018-03-28 10:59:35 /System/Library/Filesystems/apfs.fs/Contents/Resources/newfs_apfs -C disk2s1 .

Curiously, Disk Utility first created an old-school HFS volume called TEST using the specific utility newfs_hfs, an apparently redundant operation considering what happens next, but presumably as a side-effect of partitioning the uninitialised USB device for use.

Next, Disk Utility used newfs_apfs to convert the drive into a so-called APFS container – that’s like an APFA “disk within a disk” that can be divided up further.

However, the final newfs_apfs command by which the APFS encrypted volume itself gets created wasn’t logged, presumably as a security measure to prevent the password ending up in the logs.

Reformtting an APFS drive

Unfortunately, if you create an encrypted volume on a USB device that already contains an APFS container – for example, a device you’ve initialised or reinitialised since macOS 10.13 came out – then no such logging precautions are taken.

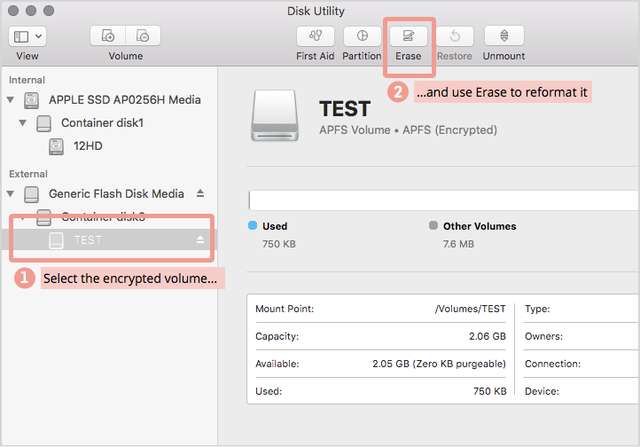

We went back into Disk Utility, clicked on the already-mounted volume TEST, and once again used Erase to create an APFS (Encrypted) volume with a password.

This is a handy way to reformat a USB drive and choose a new password at the same time:

This time, the system log revealed just one invocation of newfs, with the command line parameters used to reformat the existing APFS volume, including the plaintext of the password we just typed in:

$ log stream --info --predicate 'eventMessage contains "newfs"' Filtering the log data using "eventMessage CONTAINS "newfs"" Timestamp ..... Command 2018-03-28 11:01:23 /System/Library/Filesystems/apfs.fs/Contents/Resources/newfs_apfs -i -E -S pa55word -v TEST disk3s1 .

According to Mac4n6, this “leak the password to the system log” behaviour always happens in macOS 10.13 and 10.13.1, even when you’re initialising a brand new device, so it’s a good guess that Apple made some changes in 10.13.2 in order to be more cautious with the plaintext password…

…but it’s also a good guess that Apple didn’t identify all possible Disk Utility workflows in which passwords get logged, and thus didn’t get around fixing this one.

What to do?

Assuming you have your Mac’s built-in disk encrypted – you really should! – an attacker can’t just steal your computer and read off passwords for all your other devices – they’d have to know your Mac password first.

But a quick-fingered crook (or an ill-intentioned colleague) with access to your unlocked Mac, even for just a few seconds, could use this bug to try to recover any disk passwords you’ve chosen recently.

Fortunately, you can change the password on existing APFS volumes in a way that doesn’t (as far as we can see) leave any password traces in the log.

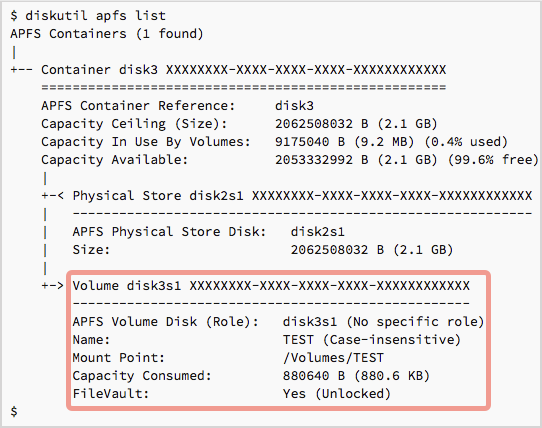

Mount your encrypted disk and figure out its macOS device name using diskutil apfs list:

Once you know the drive’s device name, you can change the APFS password directly, without reformatting it, with diskutil apfs changePassphrase, like this:

If you’re worried about passwords left behind in your current system logs, you can purge the logs with the log erase command:

$ sudo log erase -all Password: ******** Deleted selected logs $

(You need to use sudo to promote yourself to a system administrator, to prevent just anyone deleting your logs.)

Where next?

This isn’t a show-stopping bug, and it’s easy to work around it if you’re happy using the command line, but it’s still a bad look for Apple.

We’re guessing it will quietly get fixed – for good, this time – in the not-too-distant future.

Follow @NakedSecurity

Follow @duckblog

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/01JlRDH_l5k/