Remember the US government’s years-long battle to get Microsoft to cough up a customer’s private email stored on its servers in Ireland?

It’s back.

Actually, it never went away. A US appeals court in January 2017 rejected a government appeal to rethink its denial of the government’s attempts to get Microsoft to hand over the Outlook.com email, which has something to do with a drug trafficking prosecution.

So the government took the case to the Supreme Court of the US (SCOTUS) for more legal wrangling in this case – a case that troubles Silicon Valley tech giants, who are worried that data stored abroad could be made vulnerable to government grabbing.

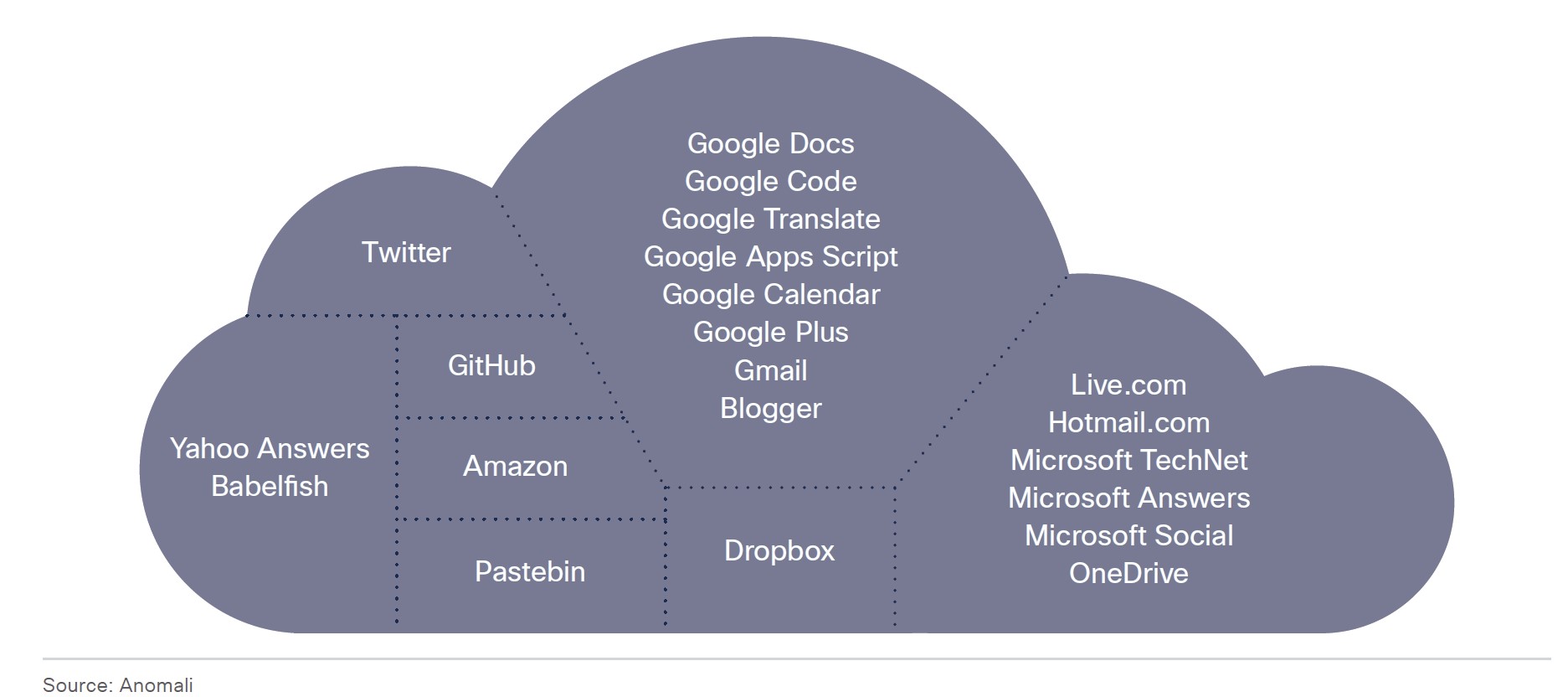

On Tuesday morning, liberal justices Sonia Sotomayor and Ruth Bader Ginsburg wondered why Congress isn’t regulating “this brave new world” of cloud storage, rather than expecting the nation’s top court to interpret the legality of a warrant obtained under the dusty old 1986 Stored Communications Act.

Justice Ginsburg, from oral arguments:

[In 1986,] no one ever heard of clouds. This kind of storage didn’t exist… If Congress takes a look at this, realizing that much time and… innovation has occurred since 1986, it can write a statute that takes account of various interests.

Wouldn’t it be wiser to say, ‘Let’s leave things as they are. If Congress wants to regulate this “brave new world,” let them do it’?

In fact, a bill was introduced last month, with bipartisan support, that seeks to address the potential problems that the government’s interpretation of the SCA would create – namely, in Microsoft’s view, the act of “unilaterally” reaching into a foreign land to “search for, copy, and import private customer correspondence” in spite of it being protected by foreign law.

It’s known as the Clarifying Lawful Overseas Use of Data (CLOUD) Act.

That bill won’t come into play here, however, as the DOJ pointed out. It’s still freshly hatched and not yet law, and that leaves SCOTUS stuck with interpreting the SCA. The issue can’t wait, according to DOJ lawyer Michael Dreeben: the 2nd Circuit Court of Appeals ruling has already “caused grave and immediate harm to the government’s ability to enforce federal criminal law.” It has to be resolved now, Dreeben said.

Department of Justice (DOJ) prosecutors have been claiming that allowing Microsoft to spurn its warrant is tantamount to encouraging companies to evade the law by keeping sensitive data overseas. No, Microsoft says, that’s how we keep our customers from ditching us over lack of data privacy.

Microsoft’s lawyer, E. Joshua Rosenkranz:

If people want to break the law and put their emails outside the reach of the US government, they simply wouldn’t use Microsoft.

Dreeben said that Microsoft’s creating a “mirage” with its insistence that complying with the government subpoena would break strong overseas privacy laws.

Justice Sotomayor’s response, in essence: Really? All the amici briefs from other technology companies telling us the same thing as Microsoft: are those companies also fabricating a mirage?

Such briefs include arguments similar to those made in amici briefs submitted in March 2017 from Apple, Amazon, Microsoft and Cisco as they lined up to support Google, which is also resisting a warrant to seize email stored on overseas servers.

Rosenkranz said that the old law just doesn’t jibe with our current technological reality:

This is a very new phenomenon, this whole notion of cloud storage in another country. We didn’t start doing it until 2010. So the fact that we analyzed what our legal obligations were and realized, ‘Wait a minute, this is actually an extraterritorial act that is unauthorized by the US Government.’ The fact that we were sober-minded about it shouldn’t be held against us.

Sober, as in, not summarily marching into Ireland and demanding information.

Justice Anthony Kennedy wondered about the “binary” choice of focusing on disclosure of the email (which would be against foreign law) vs. focusing on Microsoft’s compliance with the order to hand it over (given that Microsoft is located in the US and hence within jurisdiction):

Why should we have a binary choice between a focus on the location of the data and the location of the disclosure? Aren’t there some other factors, where the owner of the email lives or where the service provider has its headquarters?

Actually, we don’t know where the owner of the email lives, Justice Samuel Alito said. So what does Ireland have to do with anything?

Well, if this person is not Irish, and Ireland played no part in your decision to store the information there, and there’s nothing that Ireland could do about it if you chose tomorrow to move it someplace else, it is a little difficult for me to see what Ireland’s interest is in this.

On the contrary, Ireland’s interest in this case is the same as ours, Rosenkranz responded – or, for that matter, the same as any sovereign nation that has laws to protect data stored within its border:

Your Honor, Ireland’s interests are the same interest of any sovereign who protects information stored where – within their domain. We protect information stored within the United States, and we don’t actually care whose information it is because we have laws that guard the information for everyone.

SCOTUS is expected to return a decision within the coming months.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/ben3JEyCH8E/