The sound made by your computer could give away your encryption keys…

One of the first computers I was ever allowed to use all on my own was a superannuated ICL-1901A, controlled from a Teletype Model 33.

One of the first computers I was ever allowed to use all on my own was a superannuated ICL-1901A, controlled from a Teletype Model 33.

One of the processor’s address lines was wired up to a speaker inside the teletype, producing an audible click every time that address bit changed.

The idea was that you could, quite literally, listen to your code running.

Loops, in particular, tended to produce recognisable patterns of sound, as the program counter iterated over the same set of memory adresses repeatedly.

This was a great help in debugging – you could count your way through a matrix multiplication, for instance, and keep track of how far your code ran before it crashed.

You could even craft your loops (or the data you fed into them) to produce predictable frequencies for predictable lengths of time, thus producing vaguely tuneful – and sometimes even recognisable – musical output.

Plus ça change, plus c’est la même chose

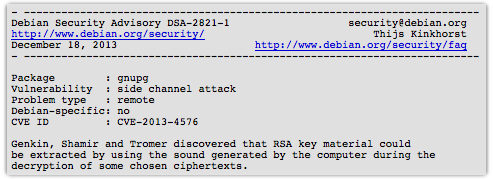

So it was with considerable amusement that I read a recent Debian security advisory that said:

Genkin, Shamir and Tromer discovered that RSA key material could be extracted by using the sound generated by the computer during the decryption of some chosen ciphertexts.

(The Shamir amongst the authors is the same Adi Shamir that is the S in RSA.)

Their paper, which is well worth reading even if you are neither a mathematician nor a cryptographer, just to see the research process that the authors followed, reaches a remarkable conclusion.

You can still “listen to your loops,” even on a recent multi-gigahertz laptop.

In fact, as the authors show by means of a working (if somewhat impractical) attack, you can listen in to other people’s loops, assuming you have a mobile phone or other microphone handy to do your audio eavesdropping, and recover, bit by bit, their RSA private keys.

Remember that with a victim’s private email key, you can not only read their most confidential messages, but also send digitally signed emails of apparently unimpeachable veracity in their name.

First steps

The authors started out by creating a set of contrived program loops, just to see if there was any hope of telling which processor instructions were running based on audio recordings made close to the computer.

The results were enough to convince them that it was worth going further:

In the image above, time runs from top to bottom, showing the audio frequency spectrum recorded near the voltage regulation circuitry (the acoustic behaviour of which varies with power consumption) as different instructions are repeated for a few hundred milliseconds each.

ADDs, MULtiplies and FMULs (floating point multiplications) look similar, but nevertheless show differences visible to the naked eye, while memory accesses cause a huge change in spectral fingerprint.

Digging deeper

Telling whether a computer is adding or multiplying in a specially-coded loop doesn’t get you very far if your aim is to attack the internals of an encryption system.

Nevertheless, the authors went on to do just that, encouraged by their initial, albeit synthetic, success.

Their next result was to discover that they could determine which of a number of RSA keys were being used, just by listening in to the encryption of a fixed message using each key in turn:

Above, with time once again running from top to bottom, you can see slight but detectable differences in acoustic pattern as the same input text is encrypted five times with five different keys.

This is called a key distinguishing attack.

Differentiating amongst keys may not sound like much of a result, but an attacker who has no access to your computer (or even to the network to which it is connected) should not be able to tell anything about what or how you are encrypting your traffic.

Anwyay, that was just the beginning: the authors ultimately went much further, contriving a way in which a particular email client, bombarded with thousands of carefully-crafted encrypted messages, might end up leaking its entire RSA private key, one bit at a time.

Mitigations

The final attack presented by the authors – recovering an entire RSA private key – requires:

- A private key that is not password protected, so that decryption can be triggered repeatedly without user interaction.

- An email client that automatically decrypts incoming emails as they are received, not merely if or when they are opened.

- The GnuPG 1.4.x RSA encryption software.

- Accurate acoustic feedback from the decryption of message X, needed to compute what data to send in message (X+1).

→ Feature (4) means that this is an adaptive ciphertext attack: you need feedback from the decryption of the first message before you can decide what to put into the second message, and so on. You can’t simply construct all your attack messages in advance, send them in bulk, and then extract the key material. Of course, this means you need a live listening device that can report back to you in real time – a mobile phone rigged for surveillance, for example – somewhere near the victim’s computer.

The easiest mitigation, therefore, is simply to replace GnuPG 1.4.x with its more current cousin, GnuPG 2.x.

The Version 2 branch of GnuPG has already been made resilient against forced-decryption attacks by what is known as RSA blinding.

Very greatly simplified, this involves a quirk of how RSA encryption works, allowing you to multiply a random number into the input before encryption, and then to divide it out after decryption, without affecting the result.

This messes up the “adaptive” part of the attack, which relies on each ciphertext having a bit pattern determined by the attacker.

→ If you are a GnuPG 1.x user and don’t want to upgrade to Version 2, be sure to get the latest version, as mentioned in the Debian advisory above. GnuPG 1.4.16 has been patched against this attack.

Other circumstances that may make things harder for an attacker include:

- Disabling auto-decryption of received emails.

- Putting your mobile phone in your pocket or bag before reading encrypted emails.

- The presence of background noise.

- “Decoy processes” running on other CPU cores at the same time.

Note, however, that the authors explain that background noise often has a narrow frequency band, making it easy to filter out.

Worse still, they show some measurements taken while running a decoy process in parallel, aiming to interfere with their key-recovery readings:

The extra CPU load merely reduced the frequency of the acoustic spikes they were listening out for, ironically making them easier to detect with a lower-quality microphone.

What next?

As the authors point out in two appendixes to the paper, data leakage of this sort is not limited to the acoustic realm.

They also tried measuring fluctuations in the power consumption of their laptops, by monitoring the voltage of the power supply between the power brick and the laptop power socket.

They didn’t get the accuracy needed to do full key recovery, but they were able to perform their key distinguishing attack, so exploitable data is almost certainly leaked by your power supply, too.

The authors further claim that changes in the electrical potential of the laptop’s chassis – which can be measured at a distance if any shielded cables (e.g. USB, VGA, HDMI) are plugged in, as the shield is connected to the chassis – can give results at least as accurate as the ones they achieved acoustically.

In short: expect more intriguing research into what’s called side channel analysis, and in the meantime, upgrade to GnuPG 2!

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/f6RDoaR9gaA/

![Click on the image to read the original paper [PDF]...it's well worth it! Click on the image to read the original paper [PDF]...it's well worth it!](https://stewilliams.com/wp-content/plugins/rss-poster/cache/d4300_gpg-loops-500.png)

![Click on the image to read the original paper [PDF]...it's well worth it! Click on the image to read the original paper [PDF]...it's well worth it!](https://stewilliams.com/wp-content/plugins/rss-poster/cache/d4300_gpg-differents-500.png)

![Click on the image to read the original paper [PDF]...it's well worth it! Click on the image to read the original paper [PDF]...it's well worth it!](https://stewilliams.com/wp-content/plugins/rss-poster/cache/d4300_gpg-decoy-spikes-500.png)