5 ransomware as a service (RaaS) kits – SophosLabs investigates

In recent months we’ve told you about ransomware distribution kits sold on the Dark Web to anyone who can afford it. These RaaS packages (ransomware as a service) allow people with little technical skill to attack with relative ease.

Naked Security has reported on individual packages, and in July we released a paper on one of the slicker, more prolific campaigns: Philadelphia.

This article takes a broader look at the problem, analyzing five RaaS kits and comparing/contrasting how each is marketed and priced. The research was conducted by Dorka Palotay, a threat researcher based in SophosLabs’ Budapest, Hungary, office.

Measuring RaaS-based ransomware attacks is difficult, as the developers are good at covering their tracks. Samples received by SophosLabs have measured from single digits to hundreds. That doesn’t sound like much on the surface, but the question researchers now grapple with is how sales of these kits have contributed to global ransomware levels as a whole.

The task of fighting RaaS proliferation starts with knowing what’s out there, and that’s the point of this article. Whatever the numbers are, this phenomenon has almost certainly helped the global ransomware scourge grow worse, and the number of available kits will only increase with time.

Philadelphia

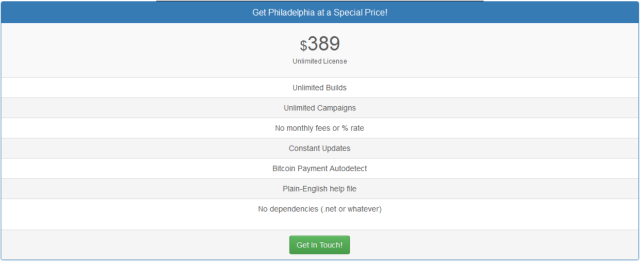

As noted above, Philadelphia is among the most sophisticated and market-savvy cases. There are more options to personalize, and for $389 one can get a full unlimited license.

The RaaS kit’s creators – Rainmakers Labs – run their business the same way a legitimate software company does to sell its products and services. While it sells Philadelphia on marketplaces hidden on the Dark Web, it hosts a production-quality “intro” video on YouTube, explaining the nuts and bolts of the kit and how to customize the ransomware with a range of feature options.

Customers include an Austrian teenager police arrested in April for infecting a local company. In that case, the alleged hacker had locked the company’s servers and production database, then demanded $400 to unlock them. The victim refused, since the company was able to retrieve the data from backups.

Stampado

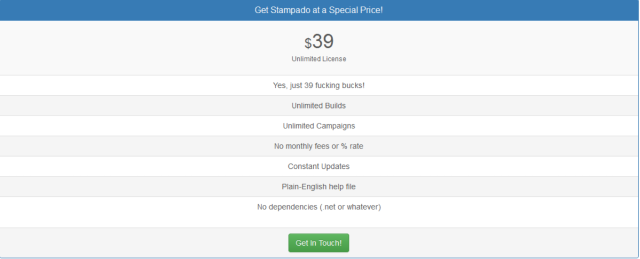

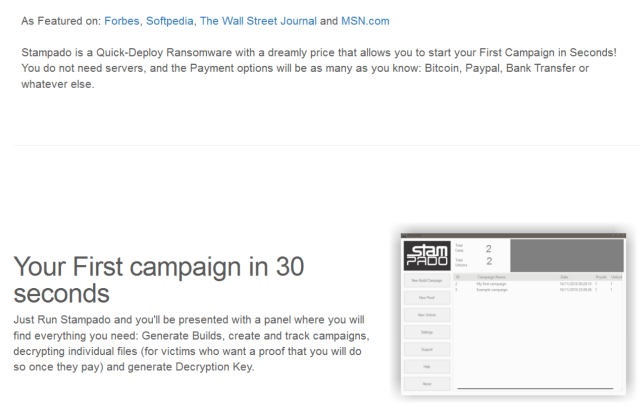

This was Rainmaker Labs’ first available RaaS kit, which they started to sell in the summer of 2016 for the low price of $39.

Based on their experiences by the end of 2016, the developers created the much more sophisticated Philadelphia, which incorporated much of Stampado’s makeup. Its creators are confident enough in Philadelphia’s supremacy that they ask for the much more substantial sum of $389.

Stampado continues to be sold despite the creation of Philadelphia. The ad below is from the developer’s website:

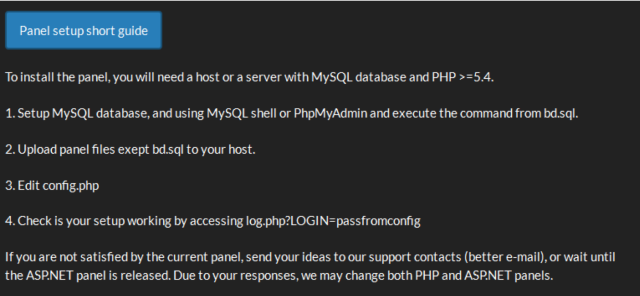

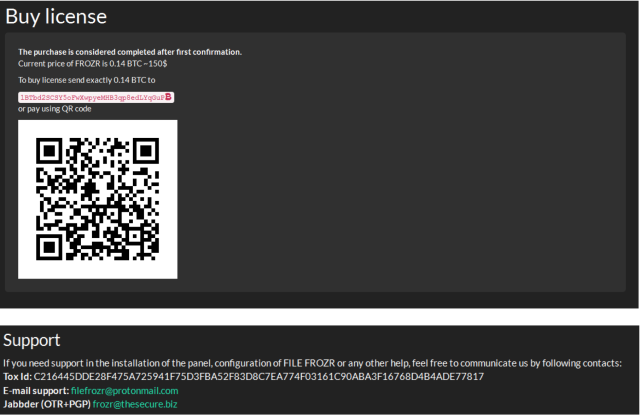

Frozr Locker

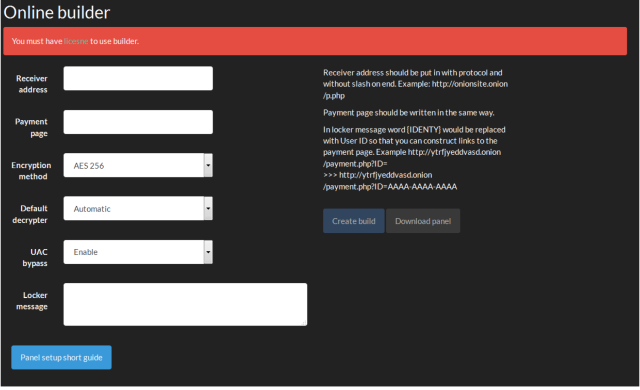

FileFrozr kits are offered for the price of 0.14 in bitcoins. If infected, victims files are encrypted.

Files with around 250 different extensions will get encrypted. The Frozr Locker page notes that people must acquire a license to use the builder:

Its creators even offer online support for customers to ask questions and troubleshoot any problems:

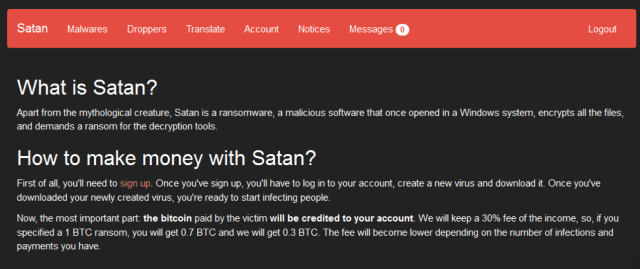

Satan

This service claims to generate a working ransomware sample and let you download it for free, allows you to set your own price and payment conditions, collects the ransom on your behalf, provides a decryption tool to victims who pay up, and pays out 70% of the proceeds via Bitcoin.

Its creators keep the remaining 30% of income, so if the victim pays a ransom worth 1 bitcoin, the customer receives 0.7 in bitcoin.

The fee moves higher or lower depending on the number of infections and payments the customer is able to accumulate.

When creating a sample of Satan to send out into the world, customers fill out the form below to concoct the pay scheme. It includes a captcha box to make sure you are who you claim to be.

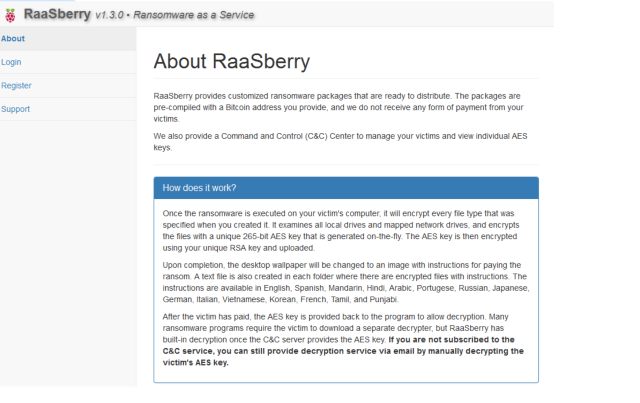

RaasBerry

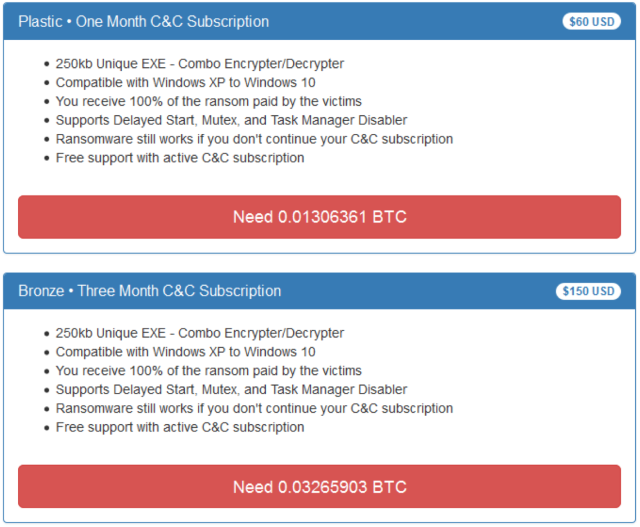

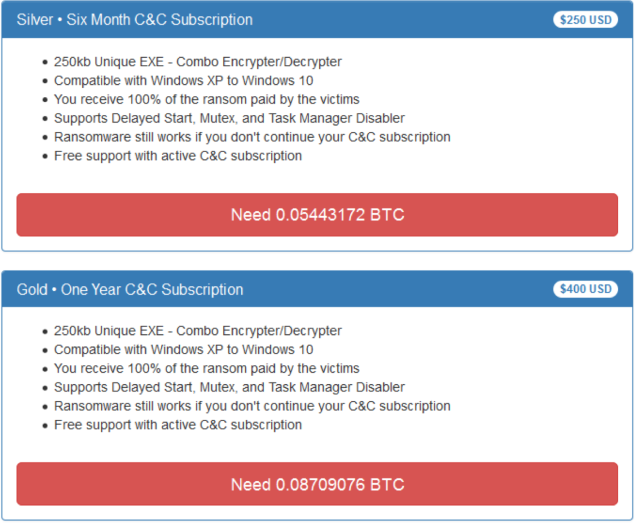

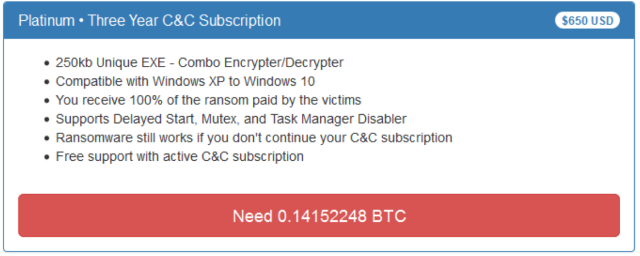

SophosLabs first spotted this one in July 2017. It was announced on the Dark Web and, like the others, allows the customer to customize their attack. Packages are pre-compiled with a Bitcoin and email address the customer provides, and the developer promises not to take a cut of the profits:

Customers can choose from five different packages, from a “Plastic” one month command-and-control subscription to a “bronze” three-month subscription, and so on:

Defensive measures

For now, the best way for companies and individuals to combat the problem is to follow these defensive measures against ransomware in general:

- Back up regularly and keep a recent backup copy off-site. There are dozens of ways other than ransomware that files can suddenly vanish, such as fire, flood, theft, a dropped laptop or even an accidental delete. Encrypt your backup and you won’t have to worry about the backup device falling into the wrong hands.

- Don’t enable macros in document attachments received via email. Microsoft deliberately turned off auto-execution of macros by default many years ago as a security measure. A lot of malware infections rely on persuading you to turn macros back on, so don’t do it!

- Be cautious about unsolicited attachments. The crooks are relying on the dilemma that you shouldn’t open a document until you are sure it’s one you want, but you can’t tell if it’s one you want until you open it. If in doubt, leave it out.

- Patch early, patch often. Malware that doesn’t come in via document macros often relies on security bugs in popular applications, including Office, your browser, Flash and more. The sooner you patch, the fewer open holes remain for the crooks to exploit. In the case of this attack, users want to be sure they are using the most updated versions of PDF and Word.

- Use Sophos Intercept X if you are looking to protect an organization. Intercept X stops ransomware in its tracks by blocking the unauthorized encryption of files.

- Try Sophos Home for Windows and Mac to help protect your family and friends. It’s free!

Follow @NakedSecurity

Follow @BillBrenner70

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/mqN18B4sxj0/