Brit MP Dorries: I gave my staff the, um, green light to use my login

UK MP Nadine Dorries revealed yesterday that she shares her parliamentary login information with her staff, in an attempt to defend recently resurfaced allegations about porn allegedly found on Damian’s office computer.

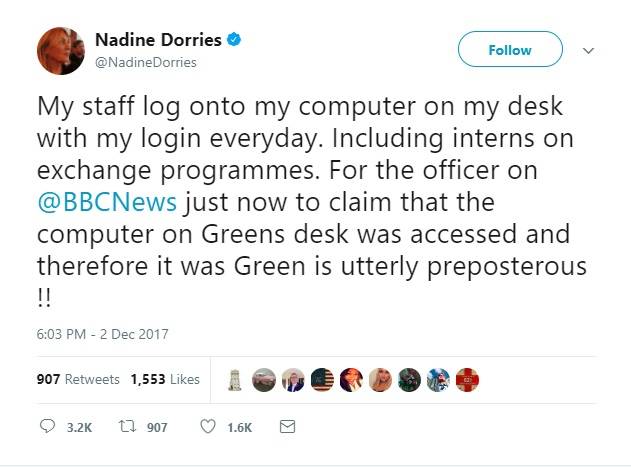

Tweeting on Saturday, Dorries disputed the assertion that only Green could have accessed the computer, saying that she gives out her password to her staff so that they can access her email account.

As is inevitable on Twitter, horrified replies soon appeared, pointing out that sharing your login details with four to six staff members is a bad idea. She then caused additional alarm by saying that she herself struggled to remember her password.

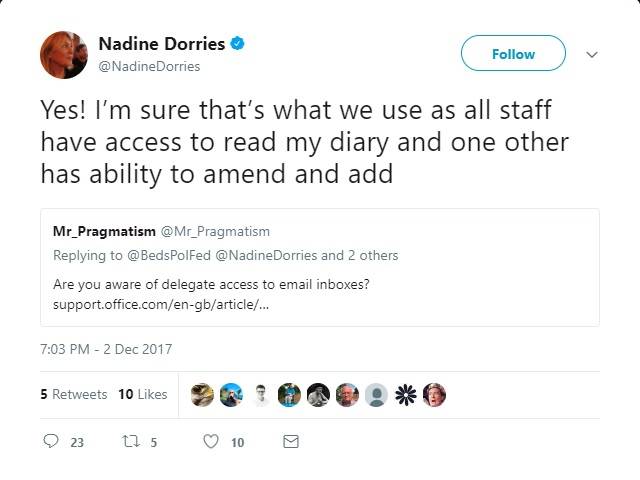

At one point Dorries also tweeted that her staff had delegate level access to her account, which caused users to question why she would need to give out her details in the first place.

The origins of the story date back to a 2008 police raid on Green’s office, originally to investigate the potential leaking of government documents to Green, then the shadow immigration minister.

The story reappeared after the BBC interviewed former detective Neil Lewis last week, who claimed he was certain that Green had been the one who accessed the X-rated images due to the use of Green’s personal account and documents on his office computer while the material was accessed. Mr Green denies the allegations.

Infosec experts were quick to criticise Dorries’ approach, which is all the more questionable just months after a targeted attack against computers at Westminster.

“@NadineDorries thinks that she’s unimportant, and not a target. That’s naive in itself, but reckless when you consider the PII and confidential communications of others,” said anti-virus industry veteran Graham Cluley.

Infosec veteran Quentyn Taylor added: “Given Parliament is using office 365 there is no excuse for @NadineDorries sharing her credentials. Delegation rules are there for a reason and probably easier than sharing passwords.”

Thom Langford, an experienced CSO and sometime security blogger and conference speaker said: “The practice of sharing logins is madness and should be reviewed immediately. The integrity of parliamentary data is at stake as well as non repudiation of any data/messages sent.”

Even more to the point, Dories’ stated practices are contrary to guidance in the House of Commons staff handbook, which clearly advises that MPs should not share their passwords.

This criticism was dismissed by Dorries over the weekend as “trolling” by computer nerds. Another Tory, Nick Boles MP, admitted that he had to ask his staff for password reminders.

Dorries, a former inmate of the I’m a Celebrity, Get me Outta Here Jungle, then doubled down by suggesting the work PCs of all MPs are riddled with the stuff.

That’s quite a claim, but infosec experts are at least ready to back up suggestions that lax IT security among MPS is not a party political issue. “I think this is an all party issue, probably happens across Parliament,” said Langford.

The frustrating thing for those versed in infosec is that there are plenty of simple technologies to ease password hassles – which are a problem – including password managers, two-factor authentication and more. “I’d like to see proximity card assisted login on MP accounts and computers. You walk away, you’re out. Make the card part of their ID card so they are less likely to ‘loan’ it,” said Rik Ferguson of Trend Micro.

The ICO commented on the social media platform: “We’re aware of reports that MPs share logins and passwords and are making enquiries of the relevant parliamentary authorities. We would remind MPs and others of their obligations under the Data Protection Act to keep personal data secure.” ®

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2017/12/04/dorries_i_give_my_staff_my_login_details/