New BankBot Version Avoids Detection in Google Play — Again

BankBot’s newest version ducked detection in Google Play by downloading its payload from an external source, according to a report by researchers at Avast, SfyLabs, and ESET, which made the discovery.

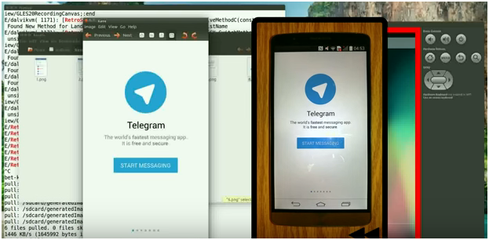

Once installed, BankBot will wait for a user to launch a legitimate banking app on his or her device and then overlay a copycat version of the app. It will not only steal users’ bank credentials as they log in to the fake app, but it will also intercept victim’s text messages, including mobile transaction authentication numbers (TANs), says Lukas Stefanko, a malware researcher at ESET.

“It will allow them to carry out bank transfers on a user’s behalf,” warns Stefanko. Banks will often rely on text messages as a form of two-factor authentication.

Attack Path

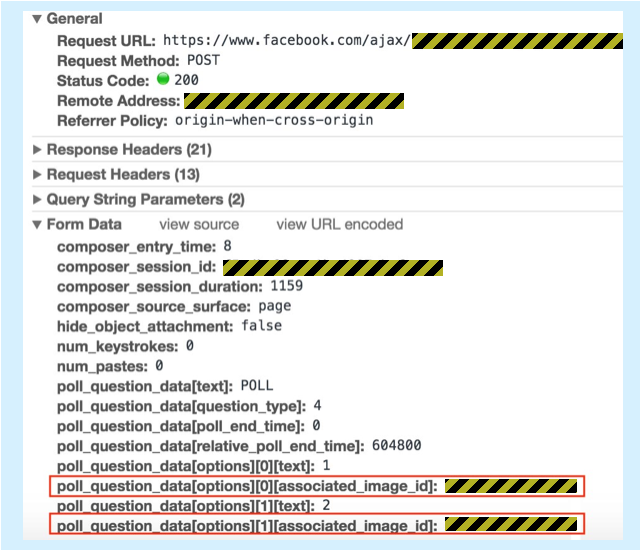

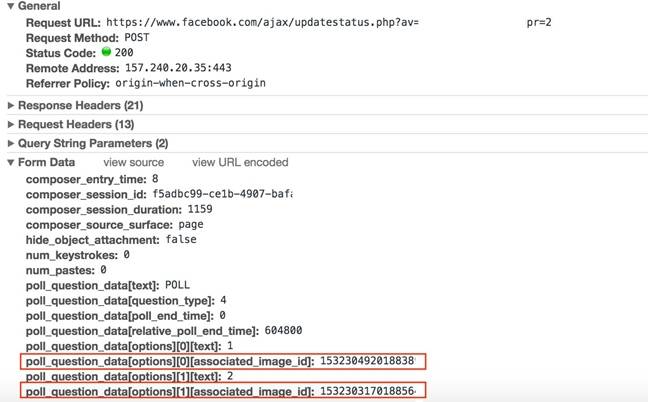

The authors of the latest BankBot version managed to get past Google Play’s security vetting process by submitting a bogus app without the actual payload packed within the app, Stefanko explains.

The victim downloads a Trojanized flashlight app, which even has flashlight functionality, and then the malicious payload is dropped from a nefarious link in the background.

The malicious payload waits two hours after it’s dropped before requesting the victim install it, says Stefanko, giving the cybercriminal administrator rights to the app.

After the user executes one of the targeted financial apps, such as Wells Fargo, Chase, or any of the other institutions on BankBot’s hit list, a fake overlay that mimics the original screen is placed on top of the legitimate app, says Nikolaos Chrysaidos, head of mobile threat intelligence and security at Avast. He adds that more advanced users may be able to detect the bogus overlay, given they are not identical to the original banking app interface, but other users may not notice the difference.

BankBot’s flexibility in the payload it delivers makes it unique, the security researchers say.

“Using the same payload delivery mechanism, the actors could drop whatever malware, spyware, banker [Trojans] they want into the device,” Chrysaidos warns. “CISOs should at least be proactive and use an AV solution on the Android devices of their company devices.”

The security researchers suspect BankBot’s authors are based in Ukraine, Belarus, and Russia, because its activities in those regions are absent. As a result, they believe the actors are keeping a low profile with the local law authorities, the report states.

Google was notified of the latest BankBot version on Nov. 17, and the Internet giant removed it from Google Play on the same day, Chrysaidos says. To date, all of the reported BankBot variants have been removed from Google Play, but the actors still appear active, so it is likely that another run will be made in the future to upload newer versions of BankBot, the researchers note.

Old vs. New

BankBot, which ESET initially discovered at the start of this year, had another version emerge in September, Stefanko says.

The droppers in the September version were considered far more sophisticated than this newest version, the report states. The malicious payloads could use Google’s Accessibility Service to enable the installation of apps from unknown sources. But in the fall, Google halted use of this Accessibility Service feature for everyone except those who are blind.

“Bad actors removing this functionality could make their malware a bit more stealthy from discovery, as something that uses the Accessibility Service could be very quickly detected as suspicious,” Chrysaidos says. “On the other hand, it makes the malware less powerful.”

Related Content:

- New Banking Trojan Similar to Dridex, Zeus, Gozi

- Terdot Banking Trojan Spies on Email, Social Media

- New Banking Malware Touts Zeus-Like Capabilities

Dawn Kawamoto is an Associate Editor for Dark Reading, where she covers cybersecurity news and trends. She is an award-winning journalist who has written and edited technology, management, leadership, career, finance, and innovation stories for such publications as CNET’s … View Full Bio