Say goodbye to the entry-level security operations center (SOC) analyst as we know it.

It’s one of the least glamorous and most tedious information security gigs: sitting all day in front of a computer screen, manually clicking through the thousands of raw alerts generated by firewalls, IDS/IPS, SIEM, and endpoint protection tools, and either ignoring or escalating them. There’s also the constant, gnawing fear of mistakenly dismissing that one alert tied to an actual attack.

But the job of the so-called Tier 1 or Level 1 security operations center (SOC) analyst is on track for extinction. A combination of emerging technologies, alert overload, and fallout from the cybersecurity talent shortage is starting to gradually squeeze out the entry-level SOC position.

Technology breakthroughs like security automation, analytics, and orchestration, and a wave of SOC outsourcing service options, will ultimately morph the traditionally manual front-line role into a more automated and streamlined process.

That doesn’t mean the Tier 1 SOC analyst, who makes anywhere from $40,0000- to $70,000 a year and whose job responsibilities can in some cases include running vulnerability scans and configuring security monitoring tools, will become obsolete. Rather, the job description as we know it today will.

“The [existing] role is going away,” says Forrester principal analyst Jeff Pollard, of the SOC Tier 1 analyst job. “It will exist in a different form.”

Gone will be the mostly manual and mechanical process of the Tier 1 SOC analyst, an inefficient and error-prone method to triage increasingly massive volumes of alerts and threats flooding organizations today. Waiting for and clicking on alerts, using a scripted process, and then forwarding possible threats to a Tier 2 analyst to confirm them and gather further data just isn’t a sustainable model, experts say.

“I’ve never been a fan of the term ‘Tier 1 SOC analyst.’ The term itself is a symptom of a larger problem,” says Justin Bajko, co-founder and a vice president of new SOC-as-a-service startup Expel and the former head of Mandiant’s CERT. “There’s a lot of manual crank-turning, and I’m [the analyst] awash in a sea of alerts. My ability to do real analysis and add value to the business with clickthrough work … is pretty minimal.

“That’s where we are right now” with the Tier 1 SOC analyst, he says.

Bajko believes this manual role has actually contributed to the cybersecurity talent gap. “It’s not a great use of talent that’s out there,” he notes.

The Tier 1 SOC job not surprisingly has a relatively high burnout and turnover rate. Once analysts get enough in-the-trenches experience, they often leave for higher-paying positions elsewhere. Some quit out of boredom and opt for more lucrative and interesting developer opportunities.

Large organizations meanwhile are scrambling to keep their SOC seats filled while they begin rolling out orchestration and automation technologies, for instance, to better streamline operations.

“The majority of a Tier 1 SOC analyst’s job is just getting through the noise as best you can looking for a signal,” say Bajko. “It starts feeling like a losing battle with a bunch of raw and uncurated alerts” to go through, and sometimes multiple consoles that aren’t integrated, he says.

With the use of analytics, orchestration, and automation technologies as well as new SOC services that perform much of the triaging of alerts before they reach the analyst’s screen, the Tier 1 analyst can become more of an actual analyst, according to Bajko. “Instead of a sea of alerts, they can spend time being thoughtful about things they are looking at and make better decisions and apply more context.”

Greg Martin, founder of startup JASK, which offers an artificial intelligence-based SOC platform, says Tier 1 analysts are basically the data entry-level job of cybersecurity. “We created it out of necessity because we had had no other way to do it,” he says. But he envisions them ultimately taking on more specialized tasks such as assisting in investigations using intel they gather from an incident.

The Tier 1 SOC analyst will become more like the Tier 2 analyst, who actually analyzes an alert flagged by a Tier 1 and decides whether it should get escalated to the highly skilled Tier 3 SOC analyst for a deeper inspection and possible incident response or forensics investigation. Tier 2 analysts, who often kick off the official incident response process, also would get more responsibility in that scenario, and Tier 3 could spend more time on proactive and advanced tasks such as threat hunting, or rooting out potential threats.

“So Tier 1 would be able to figure out if [an alert is] real and Tier 2 would make decisions like we should isolate that machine,” for example, Forrester’s Pollard says. “Tier 1 won’t go away; it must move up to more advanced tasks.”

Today’s Tier 1 analyst drowning in alerts is at risk of alert fatigue. That could result in a real security incident getting missed altogether if it’s misidentified as a false positive (think Target’s mega-breach). “My big worry in the SOC is a Tier 1 analyst is under pressure to get through as many alerts as they can, and they make some bad decisions,” says Expel’s Bajko, who has built and managed several SOCs during his career. “I’m much more worried about calling a thing a false positive” when it’s not, he says.

Aggies in the SOC

Some SOC managers are already re-architecting their teams and incorporating new technologies. Take Dan Basile, executive director of Texas AM University’s SOC, which supports the 11 universities under the AM system as well as a half-dozen state government agencies on its network. Basile had to create a whole new level of SOC analyst to staff up: he calls it the “Tier .5” SOC analyst.

“We initially have Tier 1s, 2s, etc. But we have had a hard time even hiring full-time employees, much less hanging onto them for more than a year. We fully expect them to leave and go to industry and make three times what” a university can pay, Basile says.

So Basile got creative. The Texas AM SOC partnered with several groups on campus to identify undergraduate students who might be a good fit for part-time SOC positions. The student Tier .5 SOC analysts work closely with Tier 1 SOC analysts, who oversee and perform back-checks on the students’ alert-vetting decisions. The students look at the alerts and then grab external information to put context around the alert. “They pivot and hand it up to a higher grade student or an official Tier 1 employee,” Basile says. “They’re doing that first false-positive removal.”

The Texas AM Tier 1 SOC analyst then verifies the Tier .5’s work. “They send it on up if it’s okay,” he says.

Hiring undergrads helps fill open slots in more remote campus locations, for example, he says. There are some 250,000 users on the university’s massive network at any time, so there are a lot of moving parts to track. “Due to the location of some of these universities [in the AM system], it’s just hard as heck to hire anyone in cybersecurity right now.”

Texas AM recently added an artificial intelligence-based tool from Vectra to the SOC to help cut the time it took to vet alerts, a process that often took hours to reach the action phase. AI technology now provides context to alerts as well, and now it only takes 15- to 20 minutes to triage them, Basile says.

The Tier 1 SOC analysts at Texas AM are viewing results from the AI-driven tools, next-generation endpoint, and SIEM tools, he says. “They’re doing that first rundown: Is this really bad? Do I need to escalate it? Is this garbage? Or do I need to scream at the top of my lungs because it’s that bad?”

Basile says even with newer technologies that streamline the process, you still need person power. “I don’t see people moving away because of AI,” he says. You need people to verify and dig deeper on the intel the tools are generating. “AI is just providing you more information,” he says. “You will always need someone sitting there behind the screen and saying yes or no.”

It’s not about automating the SOC itself. “I don’t think you’ll ever automate away the job of SOC analysts. You need humans to do critical thinking,” Expel’s Bajko says.

Meantime, it’s still more difficult to fill the higher-level, more skilled Level 2 and 3 SOC analyst positions. “I’ve been looking for a good forensics person for a year now. I don’t even have the job posted anymore” after being unable to fill it, Texas AM’s Basile says. The result: the university’s Tier 3 analysts have a heavier workload, he notes.

Meanwhile, the student SOC staffers get to acquire deeper technical experience. “Now they can dig into packet capture,” for instance, he says. “This gives entry-level people the opportunity to learn, and to find more bad things.”

That’s good news for entry-level security talent. While SOC Tier 1 jobs today are relatively low-tech, the positions often call for a few years’ experience in security, including analysis of security alerts from various security tools. Such qualification requirements make it even harder for SOC managers to fill the slots since most newcomers to security just don’t have the hands-on experience.

SOCs Without Tiers

Not all SOCs operate in tiers or levels of analysts. Mischel Kwon, co-founder of MKACyber and former director of the US-CERT, says she doesn’t believe in designating SOC analysts by level. “I don’t see my SOC in tiers, and a lot of people are not looking at tiers anymore,” says Kwon, whose company offers SOC managed services and consulting.

Placing analysts by tiers – 1, 2, and the most advanced, 3 – only made the job tedious for lower-level analysts, she notes. “It puts the more junior people into boring and pigeonholed activity. We really find that that exacerbates the turnover problem.”

Kwon says a SOC analyst should understand all things SOC. Her firm “pools” SOC analysts into groups, she says, rather than tiers. Pooling is not new, though: “It’s been in sophisticated SOCs for at least [the past] 10 years,” she says.

MKACyber’s SOC strategy is similar to that of Texas AM’s: pair up the junior analysts with more senior ones so they can learn skills from them. “No one wants to be Tier 1 and it’s hard to be Tier 3. But if you put them them into pools working together, the junior [analysts] become midlevel very quickly, versus in a very stovepiped SOC,” Kwon says.

See Texas AM Dan Basile, executive director of the university’s SOC, present Maximizing the Productivity and Value of Your IT Security Team at this month’s INSecurity conference.

![]()

Related Content:

Kelly Jackson Higgins is Executive Editor at DarkReading.com. She is an award-winning veteran technology and business journalist with more than two decades of experience in reporting and editing for various publications, including Network Computing, Secure Enterprise … View Full Bio

Article source: https://www.darkreading.com/analytics/death-of-the-tier-1-soc-analyst/d/d-id/1330446?_mc=rss_x_drr_edt_aud_dr_x_x-rss-simple

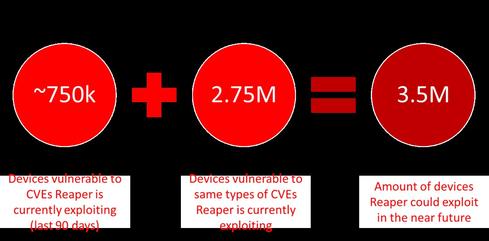

giant blinking red light in our faces every day warning us that we’d better figure out how to secure IoT.

giant blinking red light in our faces every day warning us that we’d better figure out how to secure IoT.