Shock! Hackers for medieval caliphate are terrible coders

DerbyCon An analysis of the hacking groups allying themselves to Daesh/ISIS has shown that about 18 months ago the religious fanatics stopped trying to develop their own secure communications and hacking tools and instead turned to the criminal underground to find software that actually works.

Kyle Wilhoit, a senior security researcher at DomainTools, told the DerbyCon hacking conference in Kentucky that while a multiplicity of different hacking groups with different aims have consolidated themselves under the banner of the United Cyber Caliphate (UCC), their coding skills and opsec are “garbage.”

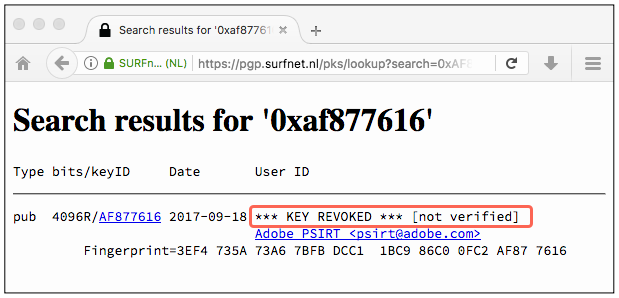

A few years ago the UCC created three apps for its followers to use – some script-kiddie level malware that was riddled with bugs, a version of PGP called Mujahideen Secrets that the NSA just love, for all the wrong reasons, and a DDOS tool called “Caliphate cannon” that was laughably poor.

“ISIS is really really bad at the development of encryption software and malware,” Wilhoit said. “The apps are sh*t to be honest, they have several vulnerabilities in each system that renders them useless.”

Wilhoit said the Daesh-bags have therefore started using mainstream communication systems like Telegram and Russian email services popular with online criminals to communicate. Even so, their lousy security is getting members killed.

He recounted how he’d found an open server online containing photographs of active military operations by ISIS in Iraq and Syria, which were to be used for propaganda purposes. However, the uploaders had included all the metadata in the photographs, making them easy targets. Little wonder four of the groups’ IT leaders have been killed in the last two years by drone strikes.

Many become one

Wilhoit also used his DerbyCon presentation to detail the formation of at least four specialised Islamic hacking groups. One, the Caliphate Cyber Army, for example, formed about four years ago and concentrated on online defacement of websites.

The Islamic State Hacking Division concentrates on trying to get into government databases in the US, UK and Australia so that they can compile and publish kill lists of targets. To date there is no evidence that this group has succeeded. Wilhoit said that’s because their technical skills are negligible.

The Islamic Cyber Army focuses on researching basic information about power grids, with a sideline in defacing websites. There’s no evidence they have actually managed to break into a power company, instead they share basic information about such systems online, Wilhoit opined.

The Sons of the Caliphate Army are another online group who caused a brief stir when they claimed to have plans to kill Mark Zuckerberg. Obviously they’re yet to succeed and now work under the UCC banner.

One unifying theme of these group’s work is the stunning lack of success and ineptitude. They will deface a website few people visit and claim a success, or try and launch a DDoS attack using a couple of dozen infected PCs.

The terrorists are also fond of using social networks to recruit members and recruit members. Wilhoit said Facebook takes such pages down within 12 hours and Twitter pulls accounts before the number of followers reaches triple figures.

Even attempts to use the internet for fundraising have been problematic, he said. While some of the groups mentioned above have solicited Bitcoin donations to help them buy weapons, scammers have adopted their tactic and Islamic State stylings, diluting the amount of donations that reach extremists.

“If UCC gets more savvy individuals to join then a true online terrorist incident could occur,” Wilhoit concluded. “But as it stands ISIS are not hugely operationally capable online. As it is right now we should we be concerned, of course, but within reason.” ®

Sponsored:

The Joy and Pain of Buying IT – Have Your Say

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2017/09/25/extremist_hackers_dubious_competence/