Crypto-busters reverse nearly 320 MEELLION hashed passwords

The anonymous CynoSure Prime “cracktivists” who two years ago reversed the hashes of 11 million leaked Ashley Madison passwords have done it again, this time untangling a stunning 320 million hashes dumped by Australian researcher Troy Hunt.

CynoSure Prime’s previous work pales compared to what’s in last week’s post.

Hunt, of HaveIBeenPwned fame, released the passwords in the hope that people who persist in re-using passwords could be persuaded otherwise, by letting websites look up and reject common passwords. The challenge was accepted by the group of researchers who go by CynoSure Prime, along with German IT security PhD student @m33x and infoseccer Royce Williams (@tychotithonus).

The password databases Hunt mined for his release were sourced from various different leaks, so it’s not surprising that many hashing algorithms (15 in all) appeared in it, but most of them used SHA-1. That algorithm was handed its death-note some time ago, and its replacement became untenable in February this year when boffins demonstrated a practical SHA-1 collision.

The other problem is its weakness: hashing is used to protect passwords because it is supposed to be irreversible: p455w0rd gets hashed to b4341ce88a4943631b9573d9e0e5b28991de945d, the hash gets stored in the database, and it’s supposed to be impossible to get the password from the hash.

The 15 different hashes in use were discovered using the MDXfind tool.

Along the way, the post looks at Hunt’s methodology and notes that some people are storing info beyond just the passwords in the hashes (for example, there are email:password combinations and other varieties of personally identifiable information, which CyptoSure Prime says Hunt didn’t intend to release).

Hunt told The Register the CynoSure Prime people did some “pretty neat” work, and that they’ve been cooperative.

He agreed that the data leaks involved carried “a bit of junk” because the original owners made mistakes in parsing, and as a result the leaked user lists include names where only passwords are expected.

While some of this landed in his release, Hunt said, those data sets are in “two files that anyone could download with a few minutes’ searching”. He’s working with the CryptoSure Prime data to purge it from the hashed lists hosted at HaveIBeenPwned.

When it comes to reversing the hashes, the post illustrates just how good the available tools have become: running MDXfind and Hashcat on a quad-core Intel Core i7-6700K system, with four GeForce GTX 1080 GPUs and 64GB of memory, the researchers “recover all but 116 of the SHA-1 hashes”.

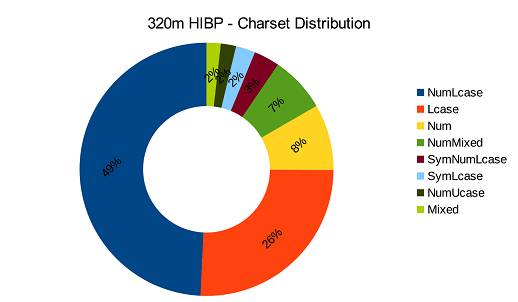

With the passwords reversed, here’s the distribution of character sets found by CryptoSure Prime

Most of the passwords in the HaveIBeenPwned release are between seven and 10 characters long. ®

Sponsored:

The Joy and Pain of Buying IT – Have Your Say

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2017/09/04/cryptobusters_reverse_nearly_320_meellion_hashed_passwords/