Advanced, Low-Cost Ransomware Tools on the Rise

Malware developers keep making it easier for even the most broke and technically inept bad guys to jump on the ransomware craze with cheap and user-friendly tools that are bound to fuel plenty more computer blackmail attacks in 2017. The latest evidence of the trend comes from a report out today of a new variant offered up by Russian cybercriminals through a software-as-a-service delivery mechanism that costs criminals only $175 to get started.

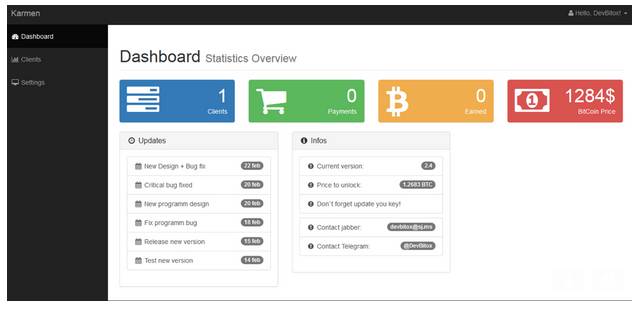

According to researchers with Recorded Future, the Karmen Cryptolocker malware variant is ransomware-as-a-service (RaaS) built on top of the open-source Hidden Tear project. It follows the standard ransomware m.o., with mechanisms for taking data hostage with AES-256 encryption, accepting Bitcoin payment from hostages. and automatically decrypting data upon payment. In addition to the low-cost barrier to”clients” – otherwise known as victims in the law-abiding world– and tally total money stolen, as well as update the software when updates are available.

RaaS is hardly a new phenomenon. Security researchers have been digging up similar examples over the last two years after McAfee Labs researchers found the Tox malware kit in the wild. But the professional sheen to the Karmen shows how ransomware tools continue to evolve as every type of criminal tries to make a buck on the ransomware gold rush.

Many technically minded criminals are able to crank out variations like Karmen for their less geeky brethren due to the now wide availability of code found in open-source projects like the one behind Karmen’s code. For example, just today, Cylance researchers reported another new variant of CrypVault ransomware, which uses the GnuPG open-source encryption tool to encrypt files.

“Unlike common ransomware, CrypVault is simply written using Windows scripting languages such as DOS batch commands, JavaScript and VBScript,” writes Rommel Ramos of Cylance. “Because of this, it is very easy to modify the code to create other variants of it. Any potential cybercriminals with average scripting knowledge should be able to create their own version of this to make money.”

For it’s part, the Hidden Tear project from which Karmen was derived was first developed as ‘educational ransomware’ software. But it took off with a mind of its own once the bad guys got their hands on it several years ago. The silver lining for security professionals is that the underlying code has vulnerabilities embedded within it, which has made it possible for ransomware researchers like Michael Gillespie to create decryptors for it. He’s already taken to Twitter to offer help to anyone affected by Karmen.

Nevertheless, Karmen still shines a light on the dangerous technical evolution of ransomware with some under-the-hood tinkering that Recorded Future researchers say are meant to deter sandbox analysis.

“A notable feature of Karmen is that it automatically deletes its own decryptor if a sandbox environment or analysis software is detected on the victim’s computer,” writes Diana Granger with Recorded Future. Her associates told Dark Reading that it’s meant to discourage security tools and researchers from learning too much about its code.

This kind of evasion technique is typical of most evolving malware and is happening among a number of notable malware types. Last month Trend Micro reported that new variants for Cerber are running anti-sandbox features to evade machine-learning security technology.

“This is a typical game of cat and mouse. Criminals make an innovation in their techniques, so defenders follow suit,” says Travis Smith, senior security research engineer for Tripwire. “Once the criminals activities are being slowed by defensive measures, they continue to change their tactics. As far as the seriousness of these evasion techniques, they pose no additional risk to the end-user when it comes to protecting themselves.”

Unfortunately, according to a recent study by SecureWorks, even though 76% of organizations see ransomware as a significant business threat, only 56% have a ransomware response plan. According to Keith Jarvis, senior security researcher at SecureWorks, organizations worried about ransomware need to not only make sure their backup and endpoint protection protocols are firmly in place, they’ve also got to take a second look at email filtering and patch management.

“We see most ransomware come in through emails and browser exploit kits that rely on poorly patched environments,” he says, pointing to Adobe Flash as a common culprit. “A great first step for email defense would be to block outright the most abused file extensions used by executables and scripts. Next would be to block Word documents that contain macros. If you take these steps, you’re going to block the overwhelming majority of ransomware.”

Related Content:

- Nearly 40% of Ransomware Victims Pay Attackers

- Cerber Ransomware Now Evades Machine Learning

- 7 Ways Hackers Target Your Employees

- 6 Free Ransomware Decryption Tools

Ericka Chickowski specializes in coverage of information technology and business innovation. She has focused on information security for the better part of a decade and regularly writes about the security industry as a contributor to Dark Reading. View Full Bio

Article source: http://www.darkreading.com/attacks-breaches/advanced-low-cost-ransomware-tools-on-the-rise/d/d-id/1328675?_mc=RSS_DR_EDT