Apple urged to legalize code injection: Let apps do JavaScript hot-fixes

Faced with an existential threat to its hot patching service, Rollout.io is appealing to Apple to extend its app oversight into post-publication injections of JavaScript code.

CTO and cofounder Eyal Keren has penned an open letter to Apple asking the i-obsessed device maker to develop and deploy a “Live Update Service Certificate” as a way to sign JavaScript code so it can be pushed safely to iOS apps for instant content updates.

Apple already reviews iOS apps destined for its App Store, to make sure they conform with its shifting and sometimes vague rules. In so doing, it manages mostly to limit the presence of malicious apps while also enforcing modest minimum standards for quality.

The review process, however, can take anywhere from a few days to a week or more, which turns out to be inconvenient when app developers want to make immediate changes to their code.

Code pushing (or hot patching) frameworks like Rollout and JSPatch emerged to give developers the ability to deploy code without Apple’s involvement, an arrangement Apple until recently has tolerated.

But over the past week, developers using Rollout and JSPatch in their apps have reported receiving warning notices from the company.

While app modifications of this sort can be harmless – replacing interface elements with different designs, for example – they also have the potential to alter previously approved behavior through a technique known as method swizzling, which involves swapping one function for another.

Apple’s concern appears to be specific to Rollout and JSPatch because the two frameworks can “pass arbitrary parameters to dynamic methods.”

In theory, a developer could exploit this capability to call private APIs or to activate and deactivate a malicious function without detection. Other hot patching frameworks that haven’t elicited a response from Apple, like Expo and CodePush, have more limited capabilities because they don’t have the same access to native Objective-C or Swift methods.

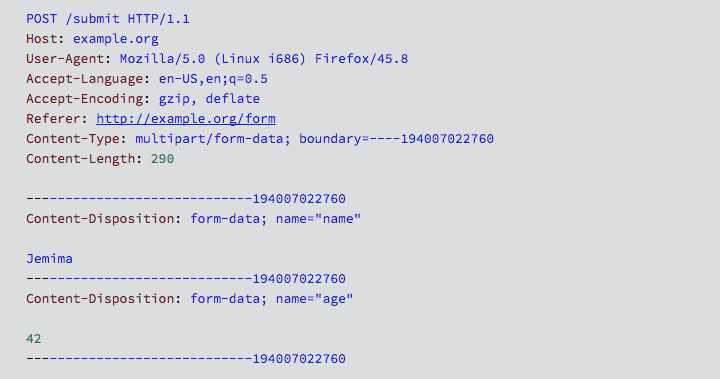

Keren, however, in a phone interview with The Register, said, “Rollout blocked private framework API calls a long time ago.” He said he believes Apple’s main concern has to do with the possibility of a man-in-the-middle attack against the patching system, by which private information could be stolen.

Keren added he wasn’t aware of whether any such attack has occurred, but he acknowledges that Apple has a legitimate concern.

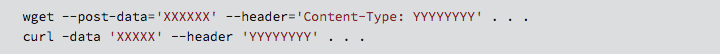

Apple’s desire to limit JavaScript injection may be reasonable, but the barrier it’s putting into place is more of an obstacle to misuse than a guarantee of safety. Any app that communicates with a remote server can be coded to pass private information or perform other actions without Apple’s knowledge or approval.

Still, there are benefits to being able to update apps without seeking permission and waiting for a green light from Apple. Keren suggests Apple offer a service to legalize what has until now been a gray market activity. He would like to see Apple develop a means to issue Live Update Service Certificates, which would be similar to other Apple signing certificates.

“Just as Apple signs .ipa files, which are pushed to the App Store and then downloaded to end user devices, we propose Apple begin to sign Javascript code, which is returned to the developer, who can then push it directly to live devices,” Keren suggested in his post. “The Apple SDK would verify the signature authenticity and only execute verified code.”

The Register asked Apple to comment, but the company continued its habit of silence.

Keren doesn’t expect an immediate response from Apple. But pointing to the 50 million devices using Rollout and to the popularity of other frameworks that support pushing code changes through JavaScript, he said, “I think there’s a need. Developers are struggling with the ability to continuously improve their apps.” ®

Article source: http://go.theregister.com/feed/www.theregister.co.uk/2017/03/14/apple_urged_to_legalize_code_injection/

n this issue, Black Hat interviews

n this issue, Black Hat interviews