Thanks to Anna Szalay and Xinran Wu of SophosLabs for their behind-the-scenes work on this article.

Last week, SophosLabs showed us a new ransomware sample.

That might not sound particularly newsworthy, given the number of malware variants that show up every day, but this one is more interesting than usual…

…because it’s targeted at Mac users. (No smirking from the Windows tent, please!)

In fact, it was clearly written for the Mac on a Mac by a Mac user, rather than adapted (or ported, to use the jargon term, in the sense of “carried across”) from another operating system.

This ransomware, detected and blocked by Sophos as OSX/Filecode-K and OSX/Filecode-L, was written in the Swift programming language, a relatively recent programming environment that comes from Apple and is primarily aimed at the macOS and iOS platforms.

Swift was released as an open-source project in 2016 and can now officially be used on Linux as well as on Apple platforms, and also on Windows 10 via Microsoft’s Linux subsystem. Nevertheless, malware programmers who choose Swift for their coding almost certainly have their eyes set firmly on making Mac users into their victims.

The good news is that you aren’t likely to be troubled by the Filecode ransomware, for a number of reasons:

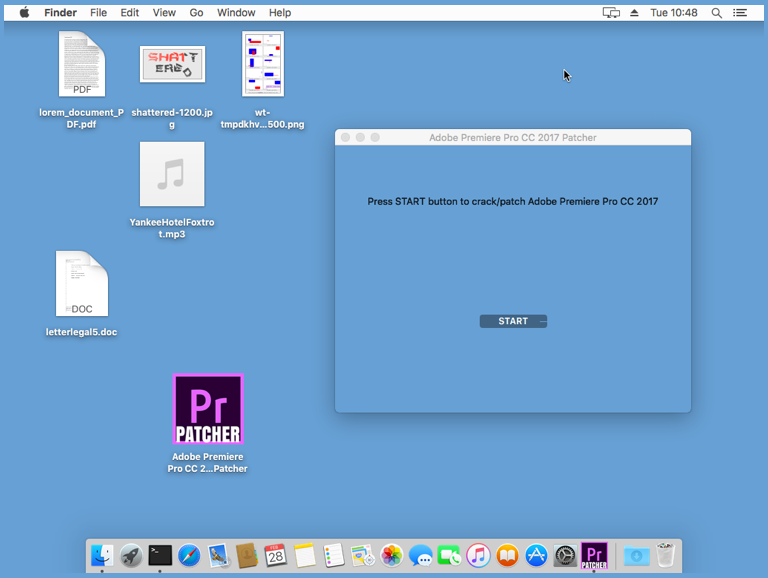

- Filecode apparently showed up because it was planted in various guises on software piracy sites, masquerading as cracking tools for mainstream commercial software products. So far, we’re not aware of Filecode attacks coming from any other quarter, so if you stay away from sites claiming to help you bypass the licensing checks built into commerical software, you should be OK.

- Filecode relies on built-in macOS tools to help it find and scramble your files, but doesn’t utilise these tools reliably. As a result, in our tests, the malware sometimes got stuck after encrypting just a few files.

- Filecode uses an encryption algorithm that can almost certainly be defeated without paying the ransom. As long as you have an original, unencrypted copy of one of the files that ended up scrambled, it’s very likely that you will be able to use one of a number of free tools to “crack” the decryption key and to recover the files for yourself.

Ironically, the fact that you can recover without paying comes as a double relief.

That’s because the crook behind this ransomware failed to keep a copy of the random encryption key chosen for each victim’s computer.

We’ve written about this sort of ransomware before, dubbing it “boneidleware“, because the crooks were sufficiently inept or lazy that they didn’t even bother to set up a payment system, scrambling (or simply deleting) your files, throwing away the key, and then asking for money in the hope that at least some victims would pay up anyway.

CryptoLocker, back in 2013, was the the first widespread ransomware. The crooks behind it set up an extortion process that could reliably supply decryption keys to victims who paid the ransom. Word got around that paying up, no matter how much it might hurt to do so, would probably save your data, and that’s what many people did, creating a “seller’s market” for ransomware demands. But recent attacks, where paying up doesn’t do any good, have started to change public opinion. In a neat irony, it looks as though the latest waves of ransomware have turned out to be the strongest anti-ransomware message of all!

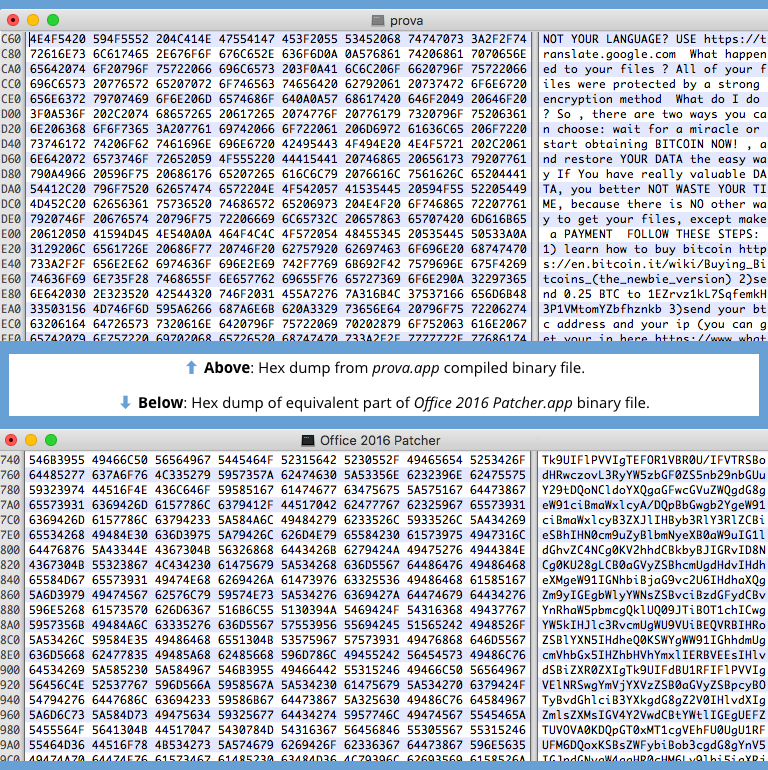

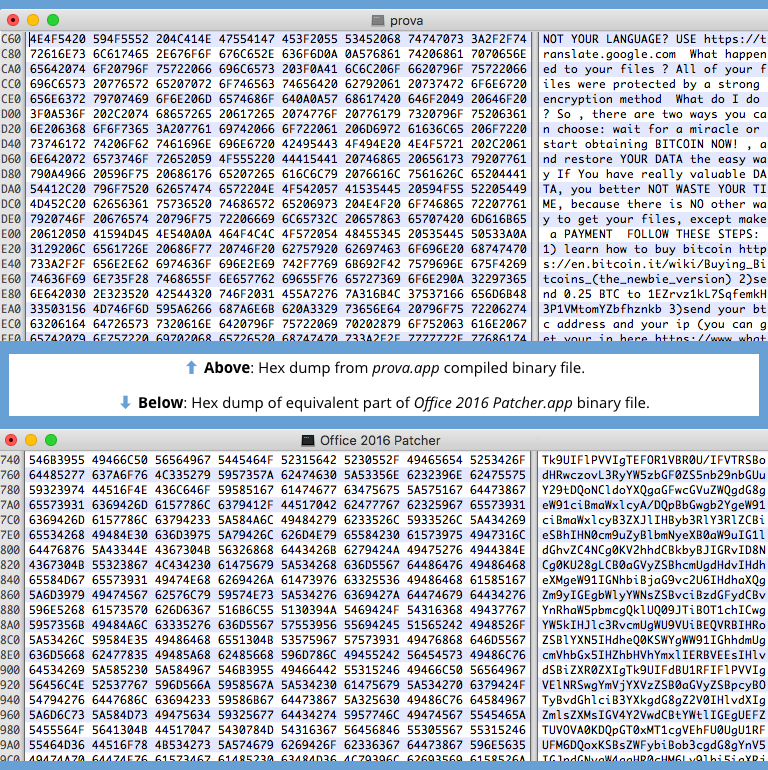

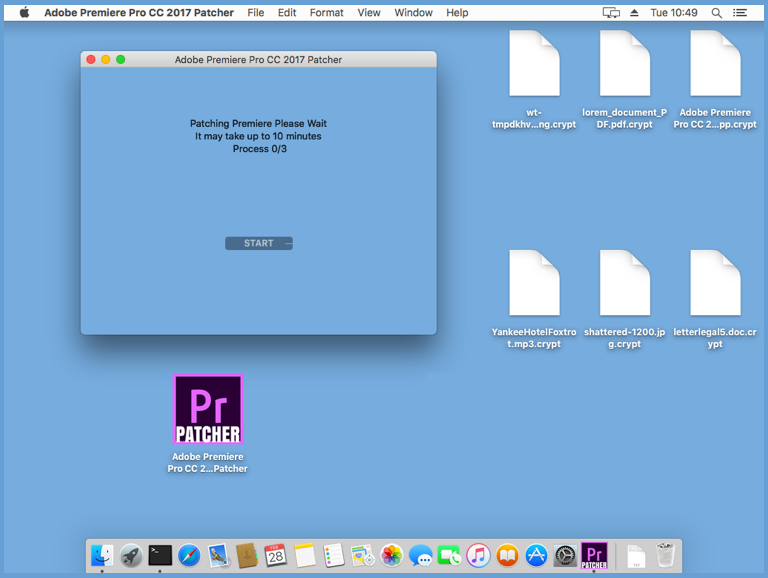

We’ve seen three versions of Filecode: one claims to crack Adobe Premiere, the second to crack Office 2016, and the third, called Prova, seems to be a version that wasn’t supposed to be released:

The word prova means “test” in Italian.

This version only encrypts files in a directory called /Desktop/test, and doesn’t make any effort to hide the giveaway text messages stored inside the program:

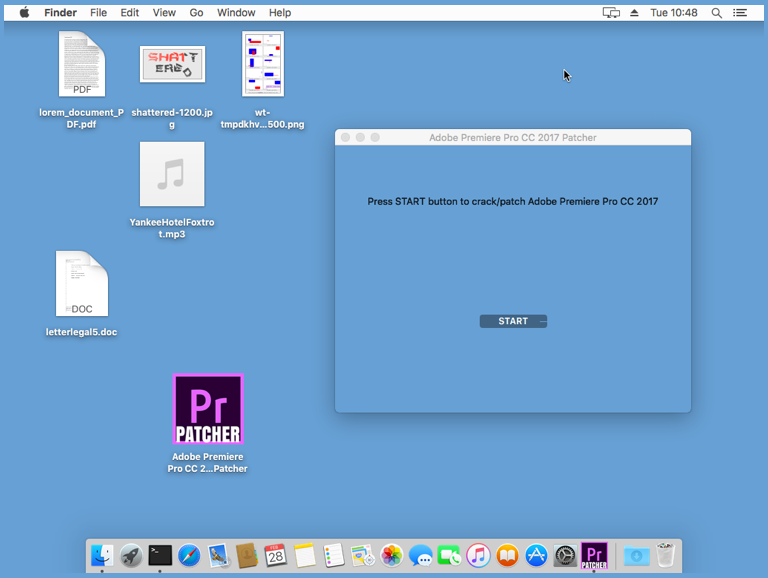

If you run one of the “Patcher” versions of this ransomware, you’ll see a popup window that makes it clear the program is about to get up to no good:

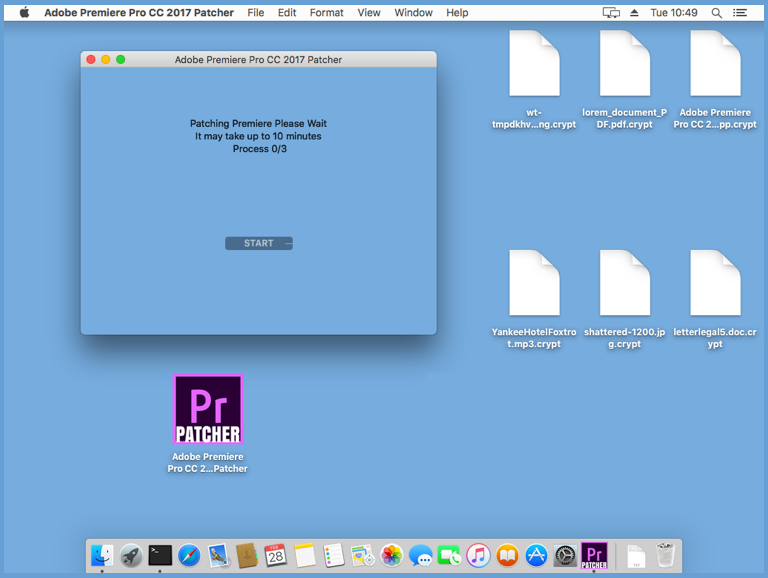

If you click [Start], the process will begin under the guise of a fictitious message, shown here still pretending everything would be OK, even after the files on the Mac desktop had been encrypted:

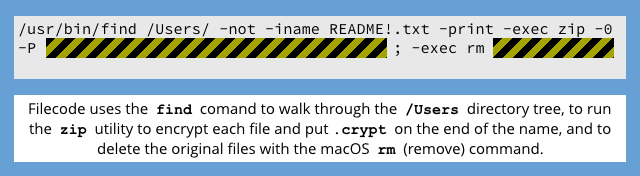

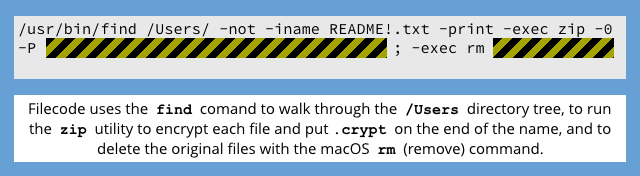

In fact, Filecode goes through all the files it can access in the /Users directory, using the built-in macOS program find to list your files, zip to encrypt them, and rm to delete them. (Files removed using the rm program don’t go into the Trash and so can’t easily be recovered.)

The ZIP password used is a randomly-chosen 25-character text string, so that although each infected Mac will be scrambled with a different password, all the files in one run of the malware will have the same key. (As we’ll see below, that’s a silver lining in this case.)

Note that the Filecode malware doesn’t need administrator privileges. When you run the ransomware app, you implicitly give it the right to read and write the same set of files that you could read and modify yourself using any other app, such as Word or Photos. Ransomware doesn’t need system-wide access to attack your personal files, and those are the files that are mot valuable to you. Because of this, you won’t see any giveaway warnings popping up to say “This app wants to make changes – Enter your password to allow this”. Generally speaking, only apps that need to install components that can be used outside the app itself, such as kernel drivers or browser plugins, will alert you with a password popup. We regularly meet Mac users who still don’t realise this, and who therefore think that looking out for password popups is a necessary and sufficient precaution against malware attack.

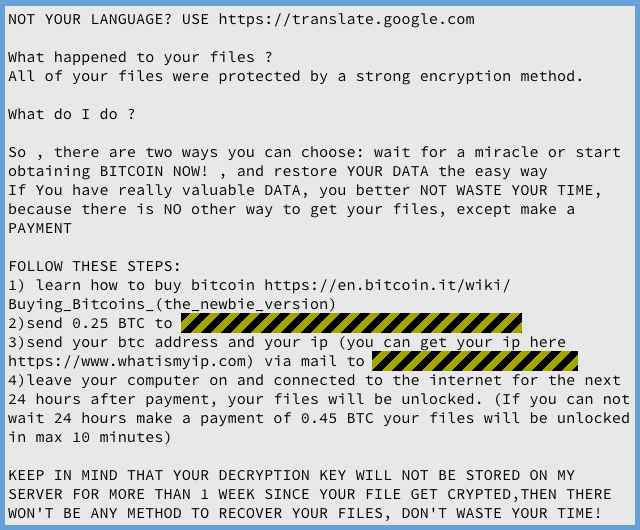

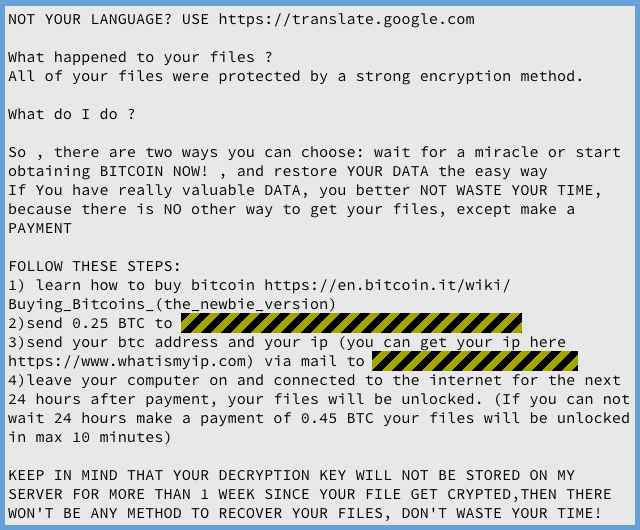

Filecode leaves behind a raft of text files that tell you how to pay 0.25 bitcoins (about $300 on the date we published this) to the crook behind the attack, giving you a Bitcoin address for the money and a temporary email address to make contact.

You’re then supposed to leave your computer connected to the internet so the criminal can access it remotely – instructions on how this part works are not supplied at this stage – and he promises to let himself in and unscramble your files within 24 hours.

Apparently, for BTC 0.45 (about $530) instead of BTC 0.25, you can buy the expedited service and he’ll unlock your files within 10 minutes:

The real problem comes if you don’t have a backup and make the stressful decision to pay up in the hope of making the best of a bad job.

We couldn’t see anywhere in the code where the crook keeps a record of the encryption key that he passes to the ZIP program, either by secretly saving the password locally or uploading it to himself.

In other words, Filecode seems to be yet another example of “boneidleware“, in which the crook either neglects, forgets or isn’t able to create a reliable back-end system to keep track of keys and payments, leaving both you and him with no straightforward recovery process.

Even if you did grant the crook access to your computer to “fix” the very problem he created in the first place, and even if he were able to connect in remotely to have a go, we think that he’d have no better approach that trying to crack the ZIP encryption from scratch.

So, in the unlikely event you are hit by this ransomware, you might as well learn how to crack the ZIP encryption yourself, and avoid having to rely on a criminal for help.

Cracking your own code

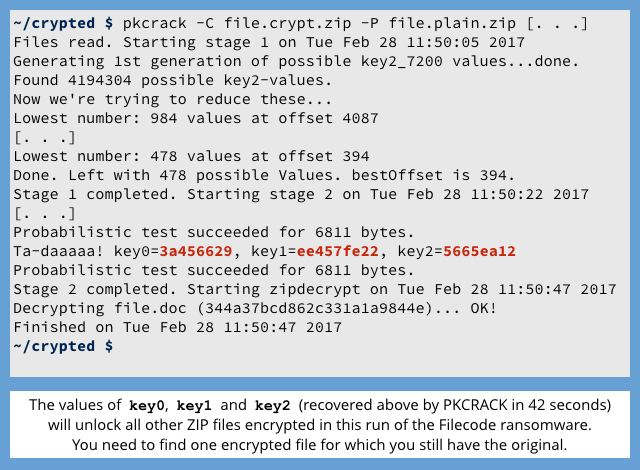

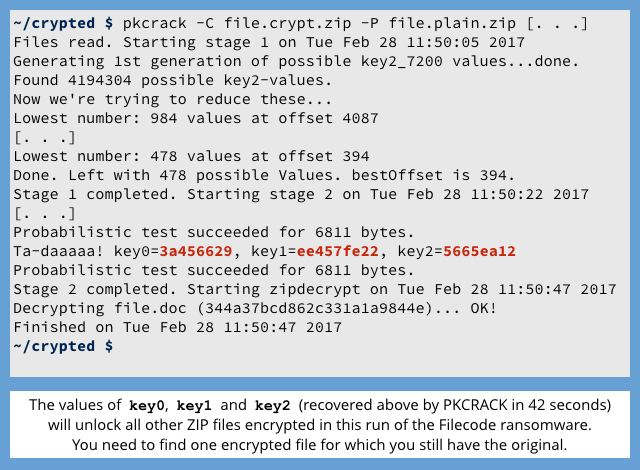

In our tests, a ZIP cracking tool called PKCRACK (it’s free to download, but you have to send a postcard to the author if you use it) was able to figure out how to recover Filecode-encrypted files in just 42 seconds.

That’s because the standard encryption algorithm used in the ZIP application was created by the late Phil Katz (the PK in the original PKZIP software), who was a programmer but not a cryptographer.

The algorithm was soon deconstructed and cracked, and software tools to automate the process quickly followed.

PKCRACK doesn’t work out the actual 25-character password used by the ransomware in the ZIP command; instead, it reverse-engineers three 32-bit (4 byte) key values that can be used to configure the internals of the decryption algorithm correctly, essentially short-circuiting the need to start with the password to generate the key material:

If we assume a choice of 62 different characters (A-Z, a-z and 0-9), then there are a whopping 6225 alternatives to try, or about one billion billion billion billion billion.

But by focusing on the three internal 32-bit key values inside PKZIP’s encryption process, and the fact that only a small subset of combinations are possible, PKCRACK and other ZIP recovery tools can do the job almost immediately.

The only caveat is that you need to have an original copy of any one of the files that was encrypted by Filecode, because ZIP cracking tools rely on what’s called a known plaintext attack, where comparing the input and output of the encryption algorithm for a known file greatly speeds up recovery of the key.

Once you’ve cracked the 32-bit key values for one file, you can use the same values to decrypt all the other files directly, so you’re home free.

What to do?

Watch this space for our followup article giving a step-by-step description of how we got our own files back for free from our sacrificial test Mac!

LISTEN NOW

(Audio player above not working? Listen on Soundcloud or access via iTunes.)

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/qJgenobYg8M/