Several updated Mac models don’t receive EFI security fixes, putting machines at risk for targeted cyberattacks.

A systemic problem in several popular Apple Mac computer models is leaving machines vulnerable to stealthy and targeted cyberattacks.

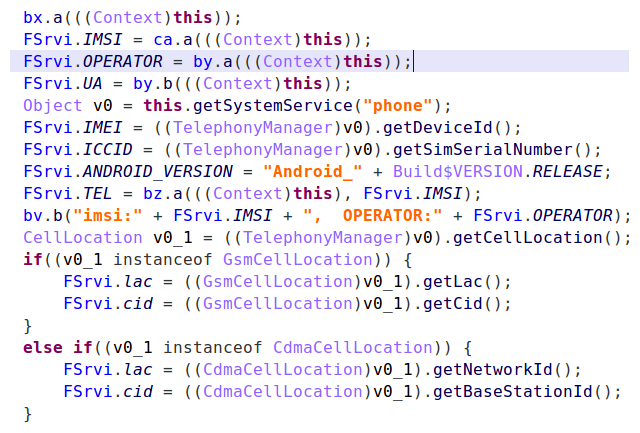

Researchers at Duo Security analyzed 73,000 real-world Mac systems from users across industries over three years of OS updates. They found many don’t receive Extensible Firmware Interface (EFI) security fixes when they upgrade to the latest OS or download security updates, exposing them to threats like Thunderstrike 2 and Vault 7 data detailing attacks on firmware.

Attacks on the EFI layer, which boots and manages functions for hardware systems, are especially threatening because they give attackers a high level of privilege on target systems.

“At that layer, [attacks] can influence anything on the layers above,” says Rich Smith, director of RD at Duo. “You can really circumvent any security controls that may be in place … it’s ultimate power in terms of raw access to what the computer has to offer.”

“For the longest time, Apple didn’t do a lot to keep [EFI firmware] up-to-date, and it was very manual,” explains RD engineer Pepijn Bruienne. After Thunderstrike 1 was published in 2015, Apple recognized the danger and simplified its update process by deploying EFI fixes with OS upgrades.

The problem is, a significant number of machines do not receive EFI security updates when they upgrade their operating systems, meaning software is secure but firmware is exposed.

What’s the damage?

Researchers found major discrepancies between the versions of EFI running on analyzed systems, and the versions they should have been running.

Although only 4.2% of the Macs analyzed, overall, by Duo have an EFI firmware version different than what they ought to (based on their hardware, OS version, and the associated EFI update), certain models are faring worse than others.

At least sixteen Mac models running a supported Apple OS have never received any EFI firmware updates. The most vulnerable model is the 21.5″ iMac, released in late 2015. Researchers found 43% of systems they analyzed are running the wrong EFI versions.

Users running a version of macOS/OS X older than the latest major release (High Sierra) likely have EFI firmware that has not received the latest fixes for EFI problems. Forty-seven Mac models capable of running OS versions 10.12, 10.11, and 10.10 did not have an EFI firmware patch for the Thunderstrike 1 vulnerability. Thirty-one models capable of running the same versions didn’t have a patch for remote version Thunderstrike 2. Two recent Apple security updates (2017-001 for El Capitan 10.10 and 10.11) had the wrong firmware.

“While we can see the discrepancies and see what is happening, we can’t necessarily see why it is happening,” says Bruienne. Researchers say there is something interfering with the way bundled EFI updates are installed, which is why some systems are running older EFI versions.

Danger to the enterprise

Firmware sits below the operating system, application code, and hypervisors. Low-level attacks targeting firmware put attackers at an advantage, explains Rich Smith, director of RD at Duo.

Each EFI vulnerability is unique so details vary, but in general they are exploited through physical local access to a machine and plugging in a specially created device to a port that uses DMA; for example, a Thunderbolt or Firewire connection. These are frequently called “evil maid” attacks with the exception of Thunderstrike 2, which was purely software-based.

“Attacking EFI can be considered a sophisticated attack that would be used by nation-states or industrial espionage threat actors, and not something we expect to be used indiscriminately,” says Smith.

These attacks are difficult to detect and tougher to remediate; even wiping the hard drive would not completely eliminate malware once it’s installed, says Duo RD director Rich Smith. “From an attacker’s perspective it’s very stealthy,” he notes. “It’s very difficult to remove a compromise on a system.”

While the implications are “quite severe” in terms of compromised EFI, those who should be most aware of this are people working in higher-security environments. Tech companies, governments, and hacktivists, for example, are at risk of being targeted.

Fixing the problem

Smith advises businesses to check they are running the latest version of EFI for their systems; Duo released a tool today for conducting these checks. If possible, update to the latest version of the OS, 10.12.6, which will give the latest versions of Apple’s EFI firmware and patch against known software security problems.

If you cannot update to 10.12.6 for compatibility reasons or because your hardware cannot run it, you may not be able to run up-to-date EFI firmware. Check Duo’s research for a list of hardware that hasn’t received an EFI update.

Given EFI attacks are mostly used by advanced actors, consider whether your business includes this level of sophisticated adversary in your threat model. If these attacks are something you proactively secure against, think about how a system with compromised EFI could affect your environment, and how you could confirm the integrity of your Macs’ EFI firmware.

“In many situations, answers to those questions would be ‘badly’ and ‘we probably wouldn’t be able to,'” says Smith. In these cases, he suggests replacing Macs that cannot update their EFI firmware, or moving them into roles where they are not exposed. These would involve physically secure environments with controlled network access.

Duo informed Apple of their data in June and Smith says interactions with the company have been “very positive.” Apple has taken steps forward with the release of macOS 10.13 (High Sierra).

Related Content:

Join Dark Reading LIVE for two days of practical cyber defense discussions. Learn from the industry’s most knowledgeable IT security experts. Check out the INsecurity agenda here.

Kelly Sheridan is Associate Editor at Dark Reading. She started her career in business tech journalism at Insurance Technology and most recently reported for InformationWeek, where she covered Microsoft and business IT. Sheridan earned her BA at Villanova University. View Full Bio

Article source: https://www.darkreading.com/vulnerabilities---threats/apple-mac-models-vulnerable-to-targeted-attacks/d/d-id/1330015?_mc=rss_x_drr_edt_aud_dr_x_x-rss-simple