Celebgate 3.0: Miley Cyrus among victims of photo thieves

Here we go again: it’s Celebgate 3.0, and that means a new round of stolen intimate photos of celebrities and tee-hee’ing jerks.

This time around, photos have been gang-grabbed from Miley Cyrus (pictured), Stella Maxwell, Kristen Stewart, Tiger Woods, Lindsey Vonn and Katharine McPhee.

The celebrity leak sites that posted the stolen content don’t merit whatever traffic they might get if we shared their names. Suffice it to say that one of them considers itself a “satirical website” that publishes rumors, speculation, assumptions, opinions, fiction, and what it calls facts… And, obviously, illegal stolen content.

According to Fossbytes, McPhee is taking legal action against the sites that published her content. Ditto for Woods and for Kristen Stewart and her girlfriend, Stella Maxwell, said TMZ.

Vonn, an Olympic skiier and Woods’ former girlfriend, called the theft “a depicable invasion of privacy”. The photos were stolen from her cell phone a few years ago. Her spokesman told People that she’s lawyering up:

Lindsey will take all necessary and appropriate legal action to protect and enforce her rights and interests. She believes the individuals responsible for hacking her private photos as well as the websites that encourage this detestable conduct should be prosecuted to the fullest extent under the law.

Celebs suffered through this type of mugging in 2015 with Celebgate 1.0. In v1, thieves and many equally scumbaggy photo-sharers trampled over the privacy of Jennifer Lawrence, Kate Upton, Kirsten Dunst, Selena Gomez, Kim Kardashian, Vanessa Hudgens, Lea Michele, Winona Ryder, Hulk Hogan’s son and Hillary Duff, among dozens of other women celebrities.

The photos in this latest round were still up as of Thursday evening.

We’ve seen multiple men convicted and given jail time over prying open the Gmail and iCloud accounts of Hollywood glitterati, but that sure didn’t stop Celebgate 2.0: in May, we saw the intimate photos of Emma Watson and Amanda Seyfried stolen and posted.

How to trip up the thieves

According to the FBI, the original Celebgate thefts were carried out by a ring of attackers who launched phishing and password-reset scams on celebrities’ iCloud and email accounts.

One of them, Edward Majerczyk, got to his victims by sending messages doctored to look like security notices from ISPs. Another Celebgate convict, Ryan Collins, chose to make his phishing messages look like they came from Apple or Google.

These guys’ pawing was persistent: the IP address of one of the Celebgate suspects, Emilio Herrera, was allegedly used to access about 572 unique iCloud accounts. The IP address went after some of those accounts numerous times: in total, somebody using it allegedly tried to access 572 iCloud accounts 3,263 times. Somebody at that IP address also allegedly tried to reset 1,987 unique iCloud account passwords approximately 4,980 times.

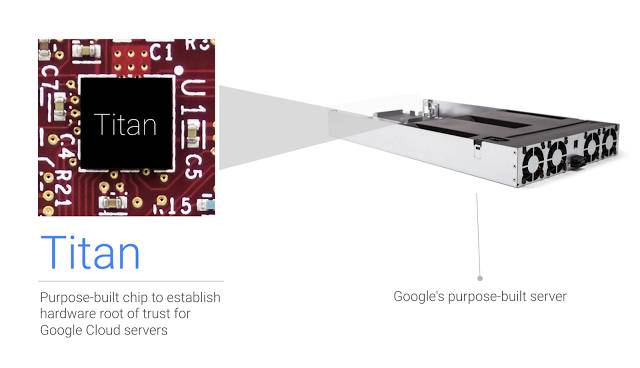

Some of the suspects used a password breaker tool to crack the account: a tool that doesn’t require special tech skills to use. In fact, anybody can purchase one of them online and use it to download a victim’s iCloud account if they know his or her login credentials.

To get those credentials, crooks break into a target’s iCloud account by phishing, be it by email, text message or iMessage.

All of which points to how scams that seem as old as the hills – like phishing – are still very much a viable threat.

Anybody who owns an email account and a body they don’t want to see parading around the internet without their permission should be on the lookout, though telling the difference between legitimate and illegitimate messages can be tough.

Here are some ways to keep your private images from winding up in the thieves’ sweaty palms:

- Don’t click on links in email and thus get your login credentials phished away. If you really think your ISP, for example, might be trying to contact you, instead of clicking on the email link, get in touch by typing in the URL for its website and contacting it via a phone number or email you find there.

- Use strong passwords.

- Lock down privacy settings on social media (here’s how to do it on Facebook, for example).

- Don’t friend people you haven’t met on Facebook, and don’t share photos with people you don’t know and trust. For that matter, be careful of those who you consider your “friends”. One example of creeps posing as friends can be found on the creepshot sharing site Anon-IB, where users have posted images they say they took from Instagram feeds of “a friend”.

- Use multifactor authentication (MFA) whenever possible. MFA means you need a one-time login code, as well as your username and password, every time you log in. That’s one more thing the scumbags need to figure out every time they try to phish you.

Follow @NakedSecurity

Follow @LisaVaas

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/9idObu-SMLA/