Uber is in the computer security news again, this time over allegations that its 2FA is no good.

ZDNet, for instance, doesn’t mince its words at all, leading with the headline, “Uber ignores security bug that makes its two-factor authentication useless.”

Two-factor authentication, or 2FA, is also known as 2SV, short for two-step verification.

It’s an increasingly common security procedure that aims to protect your online accounts against password-stealing cybercrooks.

When you login, you have to put in your usual password, which typically doesn’t change very often, plus an additional login code, which is different every time.

These one-time login codes are typically sent to you via SMS (text message) or voicemail, or calculated by a secure app that runs on your mobile phone.

The “second factor” is therefore usually your phone; the “second step” is figuring out and confirming the one-off password that crooks can’t predict in advance.

It’s not a perfect solution, but it does make it much harder for a crook who has just bought stolen usernames and passwords on the Dark Web: your password alone isn’t enough to raid your account.

What’s the story?

So, why is Uber’s 2FA supposedly “useless”?

According to ZDNet, an Indian security researcher has convinced them that he can bypass Uber’s 2FA, thus reducing your security back to what it was before 2FA was introduced.

So, when would you expect to see a 2FA prompt?

We’re not Uber users, but some of our colleagues are, and as far as we can tell, Uber doesn’t have an option to force on an additional 2FA check every time you login.

Apparently, Uber automatically activates 2FA only when it thinks the risk warrants it.

This approach works because fraudulent logins frequently stand out from regular logins: they come from a different country; a different ISP; a new browser; an unusual operating system; and so on.

In a few tests here at Sophos HQ in Oxfordshire, England (ironically, Uber isn’t licensed to operate in Oxford, but that is a story for another time), we were able to provoke Uber’s 2FA prompts easily enough.

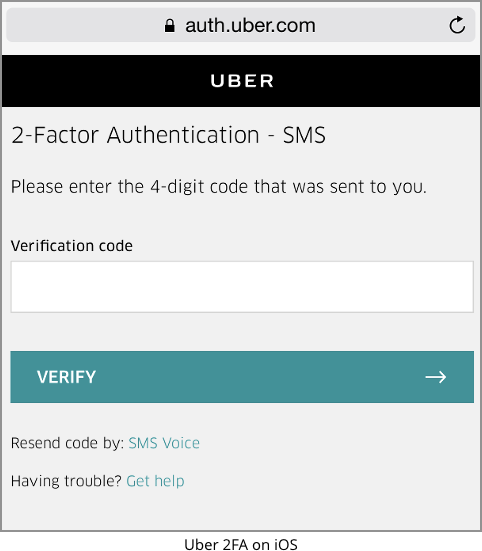

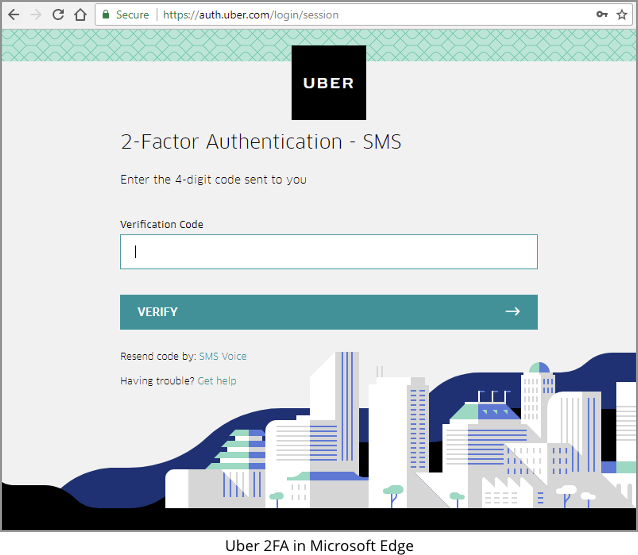

For example, we were asked for a one-time code after by forcing a password reset via the mobile app:

We also tried logging in to the mobile app and then connecting via a regular browser from a laptop, whereupon we hit the 2FA system, too:

Once Uber “knew” about the laptop, the Uber servers did’t ask for 2FA codes again when we logged back in from the same computer.

Is 2FA worth it?

Uber’s “part-time” approach to 2FA seems rather self-defeating: if 2FA is worth doing, surely it’s worth doing all the time?

Unfortunately, in real life, 2FA is not as popular as you might expect: Google, for example, recently lamented that the 2FA takeup rate amongst Gmail users is still below 10%.

In other words, fewer that 10% of Gmail users have turned the feature on.

Reasons for spurning 2FA include: I don’t trust Google with my phone number; it’s too much hassle; I get locked out every time I leave my phone at home; no or poor mobile coverage in my area; nothing worth hiding anyway.

In short: convenience before security.

Uber’s approach therefore takes a middle ground common to many online services, such as Google’s CAPTCHA system: try to avoid any extra customer-facing security shenanigans whenever possible.

Simply put: there’s a school of thought that it’s better to have everyone using 2FA some of the time, ideally when it’s most worth it, than to have most people not using it at all because of its perceived problems.

Is it useless?

If you could figure out what triggers a “part-time” 2FA system and therefore learn how to trick it into misidentifying you as a low-risk login, and you could reliably do it every time, you might reasonably claim that the 2FA system concerned was useless.

But in this instance, ZDNet admitted that “in some cases the bug would work, and in others the bug would fail, with nothing obvious to determine why.”

In other words, even if the effectiveness of Uber’s 2FA is less than expected, it doesn’t sound as though it’s strictly useless.

You’d also like to think that Uber deliberately doesn’t keep the when-to-activate logic in its 2FA system static, in order to keep the crooks on their toes.

(Of course, Uber infamously tried to hush up a recent data breach by paying off hackers under the guise of a bug bounty, and sacked its Chief Security Officer during the fallout, so just how proactive its security practices are remains to be seen.)

For all that Uber has done plenty to attract well-merited criticism in the past, we’re not sure that calling its 2FA “useless” on the basis of a bug that can’t reliably be reproduced is entirely fair…

…though if we were Uber, we’d make some tweaks anyway, such as the one we suggest at the end of the article.

What to do?

Suppressing 2FA doesn’t give cybercrooks a free lunch: they still need to crack your regular password, so:

Having said all that, we do have a suggestion for Uber, and for any other online services with “part-time” 2FA that only kicks in from time to time:

- Offer an “always on” option for 2FA.

Here’s our message to Uber, and anyone else out there with what you might call “part-time 2FA”…

…there is an important minority of users out there who favour security over convenience, and who would be happy to turn 2FA on permanently, so don’t be afraid to let them lead the way!

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/sufdSbenWKI/