One of Bitcoin’s attractions was always anonymity, but if you used it to buy something, your parcel was always trackable. Now, some researchers have used the blockchain concept underpinning it to make deliveries anonymous, too.

Bitcoin may have made money anonymous, but the problem is that privacy collapses as soon as you touch conventional centralized institutions, like the postal service or branded delivery companies. If you don’t want someone to know what you ordered, you take a risk sending it via the mail – although with drugs so easily concealable, many in Canada have willing taken that chance for years.

Now, academics have taken the onion routing concept popularized by Tor and married it with the blockchain to produce an anonymous parcel delivery system that is difficult, if not impossible, to track.

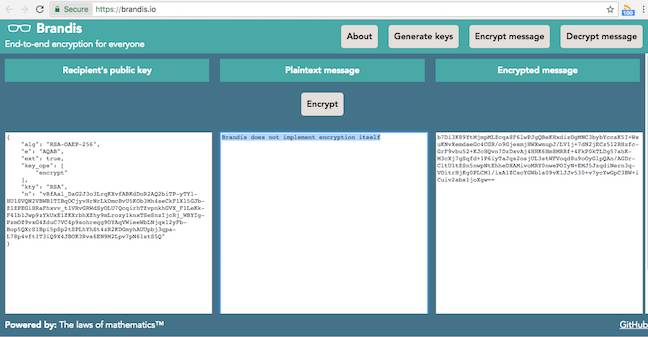

As described in this academic paper, the Lelantos delivery system uses Ethereum, one of the most promising new blockchains. While Bitcoin stores records of financial transactions in the blockchain, Ethereum runs entire programs in it. They’re called smart contracts, and are written in a program called Solidity. The contracts are distributed across different nodes in the blockchain and therefore not controlled by any one party. They use rules and events to trigger messages and send Ethereum’s own currency, called Ether.

How Lelantos works

Bob has ordered something privately from Alice on the dark web, and doesn’t want anyone to know that he’s the recipient. Especially Eve, who has an unhealthy interest in his affairs. He could have Alice send it to a PO box, but then the mail carrier might open his parcel and tell Eve.

Instead, Bob chooses several delivery companies that support Lelantos. He uses the Ethereum smart contract to organize them in a chain, with each relaying the package to another.

Along with his order to Alice, he sends an encrypted message with a tracking number and the address of the first delivery company in his chain. He also sends encrypted messages to a selection of chosen delivery companies. Each contains the encrypted addresses of the other delivery companies.

Alice takes his Ether, and sends the incriminating item to the first company. Bob’s smart contract, which is keeping track of all this, then gives it instructions to create a label using the address of the next company in the chain. Each time the parcel arrives at a new delivery company, the smart contract (which no one can link to Bob) instructs that company to send it on using the encrypted address they were given.

He can do this as many times as he likes, getting delivery companies to play pass the parcel until he feels confident to collect the parcel. He then sends a message to stop relaying the parcel and wait for him to come and pick up the item. So at no point will any party know the source and destination of the package, meaning that if they opened it, they wouldn’t be able to compromise Bob.

Even the most nefarious villains sometimes slip up. Ross Ulbricht, the mastermind behind the Silk Road dark web site, was finally collared after ordering fake identity documents from Canada delivered to his address on 15th Street in San Francisco. The police intercepted the package and paid him a visit, as they outline in this document, which is a fascinating account of OPSEC gone wrong.

If anyone ever deploys Lelantos, we’ve no doubt that people will use it to deliver goods and materials that are against the law, like Ulbricht. That’s predictable, but assuming that Bob wasn’t the illegal type, why might he want to use an anonymous delivery service as a legitimate user?

The paper raises the spectre of Eve profiling him based on his reading habits, or targeting him for burglary after seeing that he had expensive items delivered. He might buy certain legal medicines by mail, or an HIV test kit, and Eve might work for his life insurance company.

The Lelantos paper also cites another use case: simple communication. Former president Jimmy Carter apparently prefers the postal service as a means of communicating with world leaders, because he doesn’t trust the intelligence agencies not to read his emails. For true privacy, perhaps he should consider a service like Bob’s?

From anonymous parcels to fair music

This is an ingenious use of the blockchain, and follows a trend in the use of the technology which tends to cut out the middle person. As with its original application in Bitcoin, the idea with many newer implementations is to remove a single player – a bank, a large”sharing economy” broker, an auction-style e-commerce marketplace – and connect people directly to each other, giving them a way to transact while ensuring that no one can tamper with the system.

Why wouldn’t you want a central hub to control everything? It could snoop on your data, rig markets, or just decide that it doesn’t like you and freeze your accounts. This is why the blockchain carries such promise.

While Lelantos decentralizes the parcel delivery process, another application decentralizes the music business. Some music distribution sites want to reward lots of people, specifically everyone involved in producing a song or album.

Music is an industry that the blockchain could disrupt, but there’s a right and a wrong way to do it. We’ve seen artists such as RAC go it alone to distribute entire albums directly on the Ethereum blockchain in a bid to cut out the middle man. That creates a complex and confusing process for the listener, as even he admits in this Motherboard interview.

Buying Ethereum is still something of a bottleneck, but you’re going to have to buy ether. I’d recommend going to Coinbase or something like that to buy some. The album will be on a website and will work with a lot of built-in Ethereum wallets, like MetaMask or Parity. All you need to have is the chrome browser with the ether account already attached to it.

You can almost hear thousands of fans blinking slowly and backing away as they read that.

That’s the problem with blockchain-based tech. It is inherently complex – people I know still ask “is Bitcoin money? I don’t get it. Wasn’t there that exchange that got hacked?”

The blockchain might still be unfathomable for the average music fan, but it’s a great way to ensure that everyone working on a piece of creative content gets paid when someone streams or buys it online.

Musician Imogen Heap (pictured) is building one called Mycelia after experimenting with blockchain-based music distribution using Ethereum. The idea is to create a fair, sustainable music system that pays everyone involved.

If smart contracts can hold details about which delivery company to send a parcel to next and how much money to send them, then it can also hold details about who wrote the song for a particular track or did the sampling. It could also pay them their cut automatically when someone uses Ether to buy the song – and it might cut down on exploitative recording contracts.

Heap’s isn’t the only project along these lines. Muse is another blockchain-based project seeking artists to register on its network. It targets artists, and offers its information to streaming platforms, giving them the option to pay artists through its network using its internal currency, called Muse Dollars. Like Heap’s project, it’s still in the early stages.

That has little to do with transactional security or anonymity as such, but it does promote a different kind of security that seems to be central to the way that blockchains work: it maintains transparency, and keeps people honest.

Article source: http://feedproxy.google.com/~r/nakedsecurity/~3/Za44K89VDm8/

Black Hat USA returns to the fabulous Mandalay Bay in Las Vegas, Nevada, July 22-27, 2017. Click for information on the

Black Hat USA returns to the fabulous Mandalay Bay in Las Vegas, Nevada, July 22-27, 2017. Click for information on the